Central processing unit

Encyclopedia

The central processing unit (CPU) is the portion of a computer

system that carries out the instructions of a computer program

, to perform the basic arithmetical, logical, and input/output

operations of the system. The CPU plays a role somewhat analogous to the brain

in the computer. The term has been in use in the computer industry at least since the early 1960s. The form, design and implementation of CPUs have changed dramatically since the earliest examples, but their fundamental operation remains much the same.

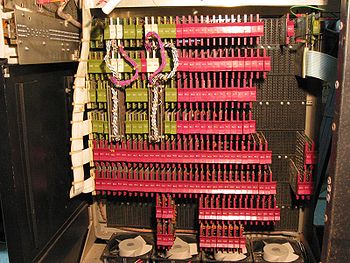

On large machines, CPUs require one or more printed circuit board

s. On personal computers and small workstations, the CPU is housed in a single silicon chip

called a microprocessor

. Since the 1970s the microprocessor class of CPUs has almost completely overtaken all other CPU implementations. Modern CPUs are large scale integrated circuit

s in packages typically less than four centimeters square, with hundreds of connecting pins.

Two typical components of a CPU are the arithmetic logic unit

(ALU), which performs arithmetic and logical operations, and the control unit

(CU), which extracts instructions from memory and decodes and executes them, calling on the ALU when necessary.

Not all computational systems rely on a central processing unit. An array processor or vector processor

has multiple parallel computing elements, with no one unit considered the "center". In the distributed computing

model, problems are solved by a distributed interconnected set of processors.

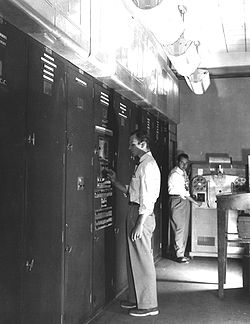

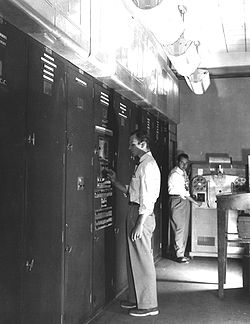

Computers such as the ENIAC

Computers such as the ENIAC

had to be physically rewired in order to perform different tasks, which caused these machines to be called "fixed-program computers." Since the term "CPU" is generally defined as a software (computer program) execution device, the earliest devices that could rightly be called CPUs came with the advent of the stored-program computer.

The idea of a stored-program computer was already present in the design of J. Presper Eckert

and John William Mauchly's ENIAC, but was initially omitted so that it could be finished sooner. On June 30, 1945, before ENIAC was made, mathematician John von Neumann

distributed the paper entitled First Draft of a Report on the EDVAC

. It was the outline of a stored-program computer that would eventually be completed in August 1949. EDVAC was designed to perform a certain number of instructions (or operations) of various types. These instructions could be combined to create useful programs for the EDVAC to run. Significantly, the programs written for EDVAC were stored in high-speed computer memory rather than specified by the physical wiring of the computer. This overcame a severe limitation of ENIAC, which was the considerable time and effort required to reconfigure the computer to perform a new task. With von Neumann's design, the program, or software, that EDVAC ran could be changed simply by changing the contents of the memory.

Early CPUs were custom-designed as a part of a larger, sometimes one-of-a-kind, computer. However, this method of designing custom CPUs for a particular application has largely given way to the development of mass-produced processors that are made for many purposes. This standardization began in the era of discrete transistor

mainframes

and minicomputer

s and has rapidly accelerated with the popularization of the integrated circuit

(IC). The IC has allowed increasingly complex CPUs to be designed and manufactured to tolerances on the order of nanometers. Both the miniaturization and standardization of CPUs have increased the presence of digital devices in modern life far beyond the limited application of dedicated computing machines. Modern microprocessors appear in everything from automobile

s to cell phones and children's toys.

While von Neumann is most often credited with the design of the stored-program computer because of his design of EDVAC, others before him, such as Konrad Zuse

, had suggested and implemented similar ideas. The so-called Harvard architecture

of the Harvard Mark I

, which was completed before EDVAC, also utilized a stored-program design using punched paper tape

rather than electronic memory. The key difference between the von Neumann and Harvard architectures is that the latter separates the storage and treatment of CPU instructions and data, while the former uses the same memory space for both. Most modern CPUs are primarily von Neumann in design, but elements of the Harvard architecture are commonly seen as well.

Relay

s and vacuum tube

s (thermionic valves) were commonly used as switching elements; a useful computer requires thousands or tens of thousands of switching devices. The overall speed of a system is dependent on the speed of the switches. Tube computers like EDVAC

tended to average eight hours between failures, whereas relay computers like the (slower, but earlier) Harvard Mark I

failed very rarely. In the end, tube based CPUs became dominant because the significant speed advantages afforded generally outweighed the reliability problems. Most of these early synchronous CPUs ran at low clock rate

s compared to modern microelectronic designs (see below for a discussion of clock rate). Clock signal frequencies ranging from 100 kHz

to 4 MHz were very common at this time, limited largely by the speed of the switching devices they were built with.

The design complexity of CPUs increased as various technologies facilitated building smaller and more reliable electronic devices. The first such improvement came with the advent of the transistor

The design complexity of CPUs increased as various technologies facilitated building smaller and more reliable electronic devices. The first such improvement came with the advent of the transistor

. Transistorized CPUs during the 1950s and 1960s no longer had to be built out of bulky, unreliable, and fragile switching elements like vacuum tube

s and electrical relays

. With this improvement more complex and reliable CPUs were built onto one or several printed circuit board

s containing discrete (individual) components.

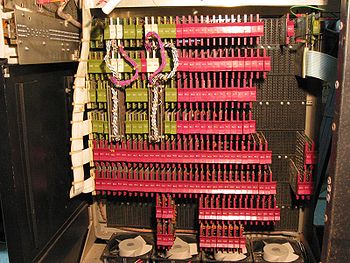

During this period, a method of manufacturing many transistors in a compact space gained popularity. The integrated circuit

(IC) allowed a large number of transistors to be manufactured on a single semiconductor

-based die

, or "chip." At first only very basic non-specialized digital circuits such as NOR gate

s were miniaturized into ICs. CPUs based upon these "building block" ICs are generally referred to as "small-scale integration" (SSI) devices. SSI ICs, such as the ones used in the Apollo guidance computer

, usually contained up to a few score transistors. To build an entire CPU out of SSI ICs required thousands of individual chips, but still consumed much less space and power than earlier discrete transistor designs. As microelectronic technology advanced, an increasing number of transistors were placed on ICs, thus decreasing the quantity of individual ICs needed for a complete CPU. MSI and LSI (medium- and large-scale integration) ICs increased transistor counts to hundreds, and then thousands.

In 1964 IBM

introduced its System/360

computer architecture which was used in a series of computers that could run the same programs with different speed and performance. This was significant at a time when most electronic computers were incompatible with one another, even those made by the same manufacturer. To facilitate this improvement, IBM utilized the concept of a microprogram (often called "microcode"), which still sees widespread usage in modern CPUs. The System/360 architecture was so popular that it dominated the mainframe computer

market for decades and left a legacy that is still continued by similar modern computers like the IBM zSeries

. In the same year (1964), Digital Equipment Corporation

(DEC) introduced another influential computer aimed at the scientific and research markets, the PDP-8

. DEC would later introduce the extremely popular PDP-11

line that originally was built with SSI ICs but was eventually implemented with LSI components once these became practical. In stark contrast with its SSI and MSI predecessors, the first LSI implementation of the PDP-11 contained a CPU composed of only four LSI integrated circuits.

Transistor-based computers had several distinct advantages over their predecessors. Aside from facilitating increased reliability and lower power consumption, transistors also allowed CPUs to operate at much higher speeds because of the short switching time of a transistor in comparison to a tube or relay. Thanks to both the increased reliability as well as the dramatically increased speed of the switching elements (which were almost exclusively transistors by this time), CPU clock rates in the tens of megahertz were obtained during this period. Additionally while discrete transistor and IC CPUs were in heavy usage, new high-performance designs like SIMD

(Single Instruction Multiple Data) vector processor

s began to appear. These early experimental designs later gave rise to the era of specialized supercomputer

s like those made by Cray Inc.

(Silicon Gate MOS ICs with self aligned gates along with his new random logic design methodology) changed the design and implementation of CPUs forever. Since the introduction of the first commercially available microprocessor (the Intel 4004

), in 1970 and the first widely used microprocessor

(the Intel 8080

) in 1974, this class of CPUs has almost completely overtaken all other central processing unit implementation methods. Mainframe and minicomputer manufacturers of the time launched proprietary IC development programs to upgrade their older computer architecture

s, and eventually produced instruction set

compatible microprocessors that were backward-compatible with their older hardware and software. Combined with the advent and eventual vast success of the now ubiquitous personal computer

, the term CPU is now applied almost exclusively to microprocessors. Several CPUs can be combined in a single processing chip.

Previous generations of CPUs were implemented as discrete components and numerous small integrated circuit

s (ICs) on one or more circuit boards. Microprocessors, on the other hand, are CPUs manufactured on a very small number of ICs; usually just one. The overall smaller CPU size as a result of being implemented on a single die means faster switching time because of physical factors like decreased gate parasitic capacitance

. This has allowed synchronous microprocessors to have clock rates ranging from tens of megahertz to several gigahertz. Additionally, as the ability to construct exceedingly small transistors on an IC has increased, the complexity and number of transistors in a single CPU has increased dramatically. This widely observed trend is described by Moore's law

, which has proven to be a fairly accurate predictor of the growth of CPU (and other IC) complexity to date.

While the complexity, size, construction, and general form of CPUs have changed drastically over the past sixty years, it is notable that the basic design and function has not changed much at all. Almost all common CPUs today can be very accurately described as von Neumann stored-program machines. As the aforementioned Moore's law continues to hold true, concerns have arisen about the limits of integrated circuit transistor technology. Extreme miniaturization of electronic gates is causing the effects of phenomena like electromigration

and subthreshold leakage

to become much more significant. These newer concerns are among the many factors causing researchers to investigate new methods of computing such as the quantum computer

, as well as to expand the usage of parallelism

and other methods that extend the usefulness of the classical von Neumann model.

The first step, fetch, involves retrieving an instruction (which is represented by a number or sequence of numbers) from program memory. The location in program memory is determined by a program counter

(PC), which stores a number that identifies the current position in the program. After an instruction is fetched, the PC is incremented by the length of the instruction word in terms of memory units. Often, the instruction to be fetched must be retrieved from relatively slow memory, causing the CPU to stall while waiting for the instruction to be returned. This issue is largely addressed in modern processors by caches and pipeline architectures (see below).

The instruction that the CPU fetches from memory is used to determine what the CPU is to do. In the decode step, the instruction is broken up into parts that have significance to other portions of the CPU. The way in which the numerical instruction value is interpreted is defined by the CPU's instruction set architecture (ISA). Often, one group of numbers in the instruction, called the opcode, indicates which operation to perform. The remaining parts of the number usually provide information required for that instruction, such as operands for an addition operation. Such operands may be given as a constant value (called an immediate value), or as a place to locate a value: a register

or a memory address, as determined by some addressing mode

. In older designs the portions of the CPU responsible for instruction decoding were unchangeable hardware devices. However, in more abstract and complicated CPUs and ISAs, a microprogram is often used to assist in translating instructions into various configuration signals for the CPU. This microprogram is sometimes rewritable so that it can be modified to change the way the CPU decodes instructions even after it has been manufactured.

After the fetch and decode steps, the execute step is performed. During this step, various portions of the CPU are connected so they can perform the desired operation. If, for instance, an addition operation was requested, the arithmetic logic unit

(ALU) will be connected to a set of inputs and a set of outputs. The inputs provide the numbers to be added, and the outputs will contain the final sum. The ALU contains the circuitry to perform simple arithmetic and logical operations on the inputs (like addition and bitwise operations). If the addition operation produces a result too large for the CPU to handle, an arithmetic overflow flag in a flags register may also be set.

The final step, writeback, simply "writes back" the results of the execute step to some form of memory. Very often the results are written to some internal CPU register for quick access by subsequent instructions. In other cases results may be written to slower, but cheaper and larger, main memory. Some types of instructions manipulate the program counter rather than directly produce result data. These are generally called "jumps" and facilitate behavior like loops, conditional program execution (through the use of a conditional jump), and functions

in programs. Many instructions will also change the state of digits in a "flags" register. These flags can be used to influence how a program behaves, since they often indicate the outcome of various operations. For example, one type of "compare" instruction considers two values and sets a number in the flags register according to which one is greater. This flag could then be used by a later jump instruction to determine program flow.

After the execution of the instruction and writeback of the resulting data, the entire process repeats, with the next instruction cycle

normally fetching the next-in-sequence instruction because of the incremented value in the program counter. If the completed instruction was a jump, the program counter will be modified to contain the address of the instruction that was jumped to, and program execution continues normally. In more complex CPUs than the one described here, multiple instructions can be fetched, decoded, and executed simultaneously. This section describes what is generally referred to as the "classic RISC pipeline

", which in fact is quite common among the simple CPUs used in many electronic devices (often called microcontroller). It largely ignores the important role of CPU cache

, and therefore the access stage of the pipeline.

Hardwired into a CPU's design is a list of basic operations it can perform, called an instruction set

. Such operations may include adding or subtracting two numbers, comparing numbers, or jumping to a different part of a program. Each of these basic operations is represented by a particular sequence of bits

; this sequence is called the opcode

for that particular operation. Sending a particular opcode to a CPU will cause it to perform the operation represented by that opcode. To execute an instruction in a computer program, the CPU uses the opcode for that instruction as well as its arguments (for instance the two numbers to be added, in the case of an addition operation). A computer program

is therefore a sequence of instructions, with each instruction including an opcode and that operation's arguments.

The actual mathematical operation for each instruction is performed by a subunit of the CPU known as the arithmetic logic unit

or ALU. In addition to using its ALU to perform operations, a CPU is also responsible for reading the next instruction from memory, reading data specified in arguments from memory, and writing results to memory.

In many CPU designs, an instruction set will clearly differentiate between operations that load data from memory, and those that perform math. In this case the data loaded from memory is stored in registers

, and a mathematical operation takes no arguments but simply performs the math on the data in the registers and writes it to a new register, whose value a separate operation may then write to memory.

(base ten) numeral system

to represent numbers internally. A few other computers have used more exotic numeral systems like ternary

(base three). Nearly all modern CPUs represent numbers in binary

form, with each digit being represented by some two-valued physical quantity such as a "high" or "low" volt

age.

Related to number representation is the size and precision of numbers that a CPU can represent. In the case of a binary CPU, a bit refers to one significant place in the numbers a CPU deals with. The number of bits (or numeral places) a CPU uses to represent numbers is often called "word size", "bit width", "data path width", or "integer precision" when dealing with strictly integer numbers (as opposed to Floating point

Related to number representation is the size and precision of numbers that a CPU can represent. In the case of a binary CPU, a bit refers to one significant place in the numbers a CPU deals with. The number of bits (or numeral places) a CPU uses to represent numbers is often called "word size", "bit width", "data path width", or "integer precision" when dealing with strictly integer numbers (as opposed to Floating point

). This number differs between architectures, and often within different parts of the very same CPU. For example, an 8-bit

CPU deals with a range of numbers that can be represented by eight binary digits (each digit having two possible values), that is, 28 or 256 discrete numbers. In effect, integer size sets a hardware limit on the range of integers the software run by the CPU can utilize.

Integer range can also affect the number of locations in memory the CPU can address (locate). For example, if a binary CPU uses 32 bits to represent a memory address, and each memory address represents one octet

(8 bits), the maximum quantity of memory that CPU can address is 232 octets, or 4 GiB

. This is a very simple view of CPU address space

, and many designs use more complex addressing methods like paging

in order to locate more memory than their integer range would allow with a flat address space.

Higher levels of integer range require more structures to deal with the additional digits, and therefore more complexity, size, power usage, and general expense. It is not at all uncommon, therefore, to see 4- or 8-bit microcontroller

s used in modern applications, even though CPUs with much higher range (such as 16, 32, 64, even 128-bit) are available. The simpler microcontrollers are usually cheaper, use less power, and therefore generate less heat, all of which can be major design considerations for electronic devices. However, in higher-end applications, the benefits afforded by the extra range (most often the additional address space) are more significant and often affect design choices. To gain some of the advantages afforded by both lower and higher bit lengths, many CPUs are designed with different bit widths for different portions of the device. For example, the IBM System/370

used a CPU that was primarily 32 bit, but it used 128-bit precision inside its floating point

units to facilitate greater accuracy and range in floating point numbers. Many later CPU designs use similar mixed bit width, especially when the processor is meant for general-purpose usage where a reasonable balance of integer and floating point capability is required.

Most CPUs, and indeed most sequential logic

devices, are synchronous

in nature. That is, they are designed and operate on assumptions about a synchronization signal. This signal, known as a clock signal

, usually takes the form of a periodic square wave

. By calculating the maximum time that electrical signals can move in various branches of a CPU's many circuits, the designers can select an appropriate period

for the clock signal.

This period must be longer than the amount of time it takes for a signal to move, or propagate, in the worst-case scenario. In setting the clock period to a value well above the worst-case propagation delay

, it is possible to design the entire CPU and the way it moves data around the "edges" of the rising and falling clock signal. This has the advantage of simplifying the CPU significantly, both from a design perspective and a component-count perspective. However, it also carries the disadvantage that the entire CPU must wait on its slowest elements, even though some portions of it are much faster. This limitation has largely been compensated for by various methods of increasing CPU parallelism. (see below)

However, architectural improvements alone do not solve all of the drawbacks of globally synchronous CPUs. For example, a clock signal is subject to the delays of any other electrical signal. Higher clock rates in increasingly complex CPUs make it more difficult to keep the clock signal in phase (synchronized) throughout the entire unit. This has led many modern CPUs to require multiple identical clock signals to be provided in order to avoid delaying a single signal significantly enough to cause the CPU to malfunction. Another major issue as clock rates increase dramatically is the amount of heat that is dissipated by the CPU. The constantly changing clock causes many components to switch regardless of whether they are being used at that time. In general, a component that is switching uses more energy than an element in a static state. Therefore, as clock rate increases, so does heat dissipation, causing the CPU to require more effective cooling solutions.

One method of dealing with the switching of unneeded components is called clock gating

, which involves turning off the clock signal to unneeded components (effectively disabling them). However, this is often regarded as difficult to implement and therefore does not see common usage outside of very low-power designs. One notable late CPU design that uses clock gating is that of the IBM PowerPC

-based Xbox 360

. It utilizes extensive clock gating in order to reduce the power requirements of the aforementioned videogame console in which it is used. Another method of addressing some of the problems with a global clock signal is the removal of the clock signal altogether. While removing the global clock signal makes the design process considerably more complex in many ways, asynchronous (or clockless) designs carry marked advantages in power consumption and heat dissipation in comparison with similar synchronous designs. While somewhat uncommon, entire asynchronous CPUs have been built without utilizing a global clock signal. Two notable examples of this are the ARM

compliant AMULET

and the MIPS

R3000 compatible MiniMIPS. Rather than totally removing the clock signal, some CPU designs allow certain portions of the device to be asynchronous, such as using asynchronous ALUs

in conjunction with superscalar pipelining to achieve some arithmetic performance gains. While it is not altogether clear whether totally asynchronous designs can perform at a comparable or better level than their synchronous counterparts, it is evident that they do at least excel in simpler math operations. This, combined with their excellent power consumption and heat dissipation properties, makes them very suitable for embedded computers.

The description of the basic operation of a CPU offered in the previous section describes the simplest form that a CPU can take. This type of CPU, usually referred to as subscalar, operates on and executes one instruction on one or two pieces of data at a time.

The description of the basic operation of a CPU offered in the previous section describes the simplest form that a CPU can take. This type of CPU, usually referred to as subscalar, operates on and executes one instruction on one or two pieces of data at a time.

This process gives rise to an inherent inefficiency in subscalar CPUs. Since only one instruction is executed at a time, the entire CPU must wait for that instruction to complete before proceeding to the next instruction. As a result, the subscalar CPU gets "hung up" on instructions which take more than one clock cycle to complete execution. Even adding a second execution unit (see below) does not improve performance much; rather than one pathway being hung up, now two pathways are hung up and the number of unused transistors is increased. This design, wherein the CPU's execution resources can operate on only one instruction at a time, can only possibly reach scalar performance (one instruction per clock). However, the performance is nearly always subscalar (less than one instruction per cycle).

Attempts to achieve scalar and better performance have resulted in a variety of design methodologies that cause the CPU to behave less linearly and more in parallel. When referring to parallelism in CPUs, two terms are generally used to classify these design techniques. Instruction level parallelism

(ILP) seeks to increase the rate at which instructions are executed within a CPU (that is, to increase the utilization of on-die execution resources), and thread level parallelism (TLP) purposes to increase the number of threads

(effectively individual programs) that a CPU can execute simultaneously. Each methodology differs both in the ways in which they are implemented, as well as the relative effectiveness they afford in increasing the CPU's performance for an application.

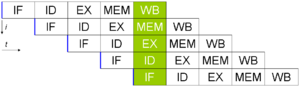

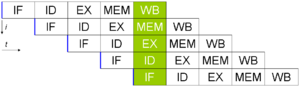

One of the simplest methods used to accomplish increased parallelism is to begin the first steps of instruction fetching and decoding before the prior instruction finishes executing. This is the simplest form of a technique known as instruction pipelining, and is utilized in almost all modern general-purpose CPUs. Pipelining allows more than one instruction to be executed at any given time by breaking down the execution pathway into discrete stages. This separation can be compared to an assembly line, in which an instruction is made more complete at each stage until it exits the execution pipeline and is retired.

One of the simplest methods used to accomplish increased parallelism is to begin the first steps of instruction fetching and decoding before the prior instruction finishes executing. This is the simplest form of a technique known as instruction pipelining, and is utilized in almost all modern general-purpose CPUs. Pipelining allows more than one instruction to be executed at any given time by breaking down the execution pathway into discrete stages. This separation can be compared to an assembly line, in which an instruction is made more complete at each stage until it exits the execution pipeline and is retired.

Pipelining does, however, introduce the possibility for a situation where the result of the previous operation is needed to complete the next operation; a condition often termed data dependency conflict. To cope with this, additional care must be taken to check for these sorts of conditions and delay a portion of the instruction pipeline if this occurs. Naturally, accomplishing this requires additional circuitry, so pipelined processors are more complex than subscalar ones (though not very significantly so). A pipelined processor can become very nearly scalar, inhibited only by pipeline stalls (an instruction spending more than one clock cycle in a stage).

Further improvement upon the idea of instruction pipelining led to the development of a method that decreases the idle time of CPU components even further. Designs that are said to be superscalar include a long instruction pipeline and multiple identical execution units. In a superscalar pipeline, multiple instructions are read and passed to a dispatcher, which decides whether or not the instructions can be executed in parallel (simultaneously). If so they are dispatched to available execution units, resulting in the ability for several instructions to be executed simultaneously. In general, the more instructions a superscalar CPU is able to dispatch simultaneously to waiting execution units, the more instructions will be completed in a given cycle.

Most of the difficulty in the design of a superscalar CPU architecture lies in creating an effective dispatcher. The dispatcher needs to be able to quickly and correctly determine whether instructions can be executed in parallel, as well as dispatch them in such a way as to keep as many execution units busy as possible. This requires that the instruction pipeline is filled as often as possible and gives rise to the need in superscalar architectures for significant amounts of CPU cache

. It also makes hazard

-avoiding techniques like branch prediction, speculative execution

, and out-of-order execution

crucial to maintaining high levels of performance. By attempting to predict which branch (or path) a conditional instruction will take, the CPU can minimize the number of times that the entire pipeline must wait until a conditional instruction is completed. Speculative execution often provides modest performance increases by executing portions of code that may not be needed after a conditional operation completes. Out-of-order execution somewhat rearranges the order in which instructions are executed to reduce delays due to data dependencies. Also in case of Single Instructions Multiple Data — a case when a lot of data from the same type has to be processed, modern processors can disable parts of the pipeline so that when a single instruction is executed many times, the CPU skips the fetch and decode phases and thus greatly increases performance on certain occasions, especially in highly monotonous program engines such as video creation software and photo processing.

In the case where a portion of the CPU is superscalar and part is not, the part which is not suffers a performance penalty due to scheduling stalls. The Intel P5

Pentium had two superscalar ALUs which could accept one instruction per clock each, but its FPU could not accept one instruction per clock. Thus the P5 was integer superscalar but not floating point superscalar. Intel's successor to the P5 architecture, P6

, added superscalar capabilities to its floating point features, and therefore afforded a significant increase in floating point instruction performance.

Both simple pipelining and superscalar design increase a CPU's ILP by allowing a single processor to complete execution of instructions at rates surpassing one instruction per cycle (IPC). Most modern CPU designs are at least somewhat superscalar, and nearly all general purpose CPUs designed in the last decade are superscalar. In later years some of the emphasis in designing high-ILP computers has been moved out of the CPU's hardware and into its software interface, or ISA

. The strategy of the very long instruction word

(VLIW) causes some ILP to become implied directly by the software, reducing the amount of work the CPU must perform to boost ILP and thereby reducing the design's complexity.

in parallel.

This area of research is known as parallel computing

. In Flynn's taxonomy

, this strategy is known as Multiple Instructions-Multiple Data or MIMD.

One technology used for this purpose was multiprocessing

(MP). The initial flavor of this technology is known as symmetric multiprocessing

(SMP), where a small number of CPUs share a coherent view of their memory system. In this scheme, each CPU has additional hardware to maintain a constantly up-to-date view of memory. By avoiding stale views of memory, the CPUs can cooperate on the same program and programs can migrate from one CPU to another. To increase the number of cooperating CPUs beyond a handful, schemes such as non-uniform memory access

(NUMA) and directory-based coherence protocols were introduced in the 1990s. SMP systems are limited to a small number of CPUs while NUMA systems have been built with thousands of processors. Initially, multiprocessing was built using multiple discrete CPUs and boards to implement the interconnect between the processors. When the processors and their interconnect are all implemented on a single silicon chip, the technology is known as a multi-core

microprocessor.

It was later recognized that finer-grain parallelism existed with a single program. A single program might have several threads (or functions) that could be executed separately or in parallel. Some of the earliest examples of this technology implemented input/output

processing such as direct memory access

as a separate thread from the computation thread. A more general approach to this technology was introduced in the 1970s when systems were designed to run multiple computation threads in parallel. This technology is known as multi-threading (MT). This approach is considered more cost-effective than multiprocessing, as only a small number of components within a CPU is replicated in order to support MT as opposed to the entire CPU in the case of MP. In MT, the execution units and the memory system including the caches are shared among multiple threads. The downside of MT is that the hardware support for multithreading is more visible to software than that of MP and thus supervisor software like operating systems have to undergo larger changes to support MT. One type of MT that was implemented is known as block multithreading, where one thread is executed until it is stalled waiting for data to return from external memory. In this scheme, the CPU would then quickly switch to another thread which is ready to run, the switch often done in one CPU clock cycle, such as the UltraSPARC

Technology. Another type of MT is known as simultaneous multithreading

, where instructions of multiple threads are executed in parallel within one CPU clock cycle.

For several decades from the 1970s to early 2000s, the focus in designing high performance general purpose CPUs was largely on achieving high ILP through technologies such as pipelining, caches, superscalar execution, out-of-order execution, etc. This trend culminated in large, power-hungry CPUs such as the Intel Pentium 4

. By the early 2000s, CPU designers were thwarted from achieving higher performance from ILP techniques due to the growing disparity between CPU operating frequencies and main memory operating frequencies as well as escalating CPU power dissipation owing to more esoteric ILP techniques.

CPU designers then borrowed ideas from commercial computing markets such as transaction processing

, where the aggregate performance of multiple programs, also known as throughput

computing, was more important than the performance of a single thread or program.

This reversal of emphasis is evidenced by the proliferation of dual and multiple core CMP (chip-level multiprocessing) designs and notably, Intel's newer designs resembling its less superscalar P6

architecture. Late designs in several processor families exhibit CMP, including the x86-64

Opteron

and Athlon 64 X2

, the SPARC

UltraSPARC T1

, IBM POWER4

and POWER5

, as well as several video game console

CPUs like the Xbox 360

's triple-core PowerPC design, and the PS3's 7-core Cell microprocessor

.

, these two schemes of dealing with data are generally referred to as SIMD

(single instruction, multiple data) and SISD

(single instruction, single data), respectively. The great utility in creating CPUs that deal with vectors of data lies in optimizing tasks that tend to require the same operation (for example, a sum or a dot product

) to be performed on a large set of data. Some classic examples of these types of tasks are multimedia

applications (images, video, and sound), as well as many types of scientific and engineering tasks. Whereas a scalar CPU must complete the entire process of fetching, decoding, and executing each instruction and value in a set of data, a vector CPU can perform a single operation on a comparatively large set of data with one instruction. Of course, this is only possible when the application tends to require many steps which apply one operation to a large set of data.

Most early vector CPUs, such as the Cray-1

, were associated almost exclusively with scientific research and cryptography

applications. However, as multimedia has largely shifted to digital media, the need for some form of SIMD in general-purpose CPUs has become significant. Shortly after inclusion of floating point execution units

started to become commonplace in general-purpose processors, specifications for and implementations of SIMD execution units also began to appear for general-purpose CPUs. Some of these early SIMD specifications like HP's Multimedia Acceleration eXtensions

(MAX) and Intel's MMX were integer-only. This proved to be a significant impediment for some software developers, since many of the applications that benefit from SIMD primarily deal with floating point

numbers. Progressively, these early designs were refined and remade into some of the common, modern SIMD specifications, which are usually associated with one ISA. Some notable modern examples are Intel's SSE

and the PowerPC-related AltiVec

(also known as VMX).

) and the instructions per clock (IPC), which together are the factors for the instructions per second

(IPS) that the CPU can perform.

Many reported IPS values have represented "peak" execution rates on artificial instruction sequences with few branches, whereas realistic workloads consist of a mix of instructions and applications, some of which take longer to execute than others. The performance of the memory hierarchy

also greatly affects processor performance, an issue barely considered in MIPS calculations. Because of these problems, various standardized tests, often called "benchmarks"

for this purpose—such as SPECint

-- have been developed to attempt to measure the real effective performance in commonly used applications.

Processing performance of computers is increased by using multi-core processors, which essentially is plugging two or more individual processors (called cores in this sense) into one integrated circuit

. Ideally, a dual core processor would be nearly twice as powerful as a single core processor. In practice, however, the performance gain is far less, only about 50%, due to imperfect software algorithms and implementation.

Further reading

Computer

A computer is a programmable machine designed to sequentially and automatically carry out a sequence of arithmetic or logical operations. The particular sequence of operations can be changed readily, allowing the computer to solve more than one kind of problem...

system that carries out the instructions of a computer program

Computer program

A computer program is a sequence of instructions written to perform a specified task with a computer. A computer requires programs to function, typically executing the program's instructions in a central processor. The program has an executable form that the computer can use directly to execute...

, to perform the basic arithmetical, logical, and input/output

Input/output

In computing, input/output, or I/O, refers to the communication between an information processing system , and the outside world, possibly a human, or another information processing system. Inputs are the signals or data received by the system, and outputs are the signals or data sent from it...

operations of the system. The CPU plays a role somewhat analogous to the brain

Human brain

The human brain has the same general structure as the brains of other mammals, but is over three times larger than the brain of a typical mammal with an equivalent body size. Estimates for the number of neurons in the human brain range from 80 to 120 billion...

in the computer. The term has been in use in the computer industry at least since the early 1960s. The form, design and implementation of CPUs have changed dramatically since the earliest examples, but their fundamental operation remains much the same.

On large machines, CPUs require one or more printed circuit board

Printed circuit board

A printed circuit board, or PCB, is used to mechanically support and electrically connect electronic components using conductive pathways, tracks or signal traces etched from copper sheets laminated onto a non-conductive substrate. It is also referred to as printed wiring board or etched wiring...

s. On personal computers and small workstations, the CPU is housed in a single silicon chip

Silicon Chip

Silicon Chip is an Australian electronics magazine. It was started in November, 1987 by Leo Simpson. Following the demise of Electronics Australia, it is the only hobbyist-related electronics magazine remaining in Australia.- Magazine :...

called a microprocessor

Microprocessor

A microprocessor incorporates the functions of a computer's central processing unit on a single integrated circuit, or at most a few integrated circuits. It is a multipurpose, programmable device that accepts digital data as input, processes it according to instructions stored in its memory, and...

. Since the 1970s the microprocessor class of CPUs has almost completely overtaken all other CPU implementations. Modern CPUs are large scale integrated circuit

Integrated circuit

An integrated circuit or monolithic integrated circuit is an electronic circuit manufactured by the patterned diffusion of trace elements into the surface of a thin substrate of semiconductor material...

s in packages typically less than four centimeters square, with hundreds of connecting pins.

Two typical components of a CPU are the arithmetic logic unit

Arithmetic logic unit

In computing, an arithmetic logic unit is a digital circuit that performs arithmetic and logical operations.The ALU is a fundamental building block of the central processing unit of a computer, and even the simplest microprocessors contain one for purposes such as maintaining timers...

(ALU), which performs arithmetic and logical operations, and the control unit

Control unit

A control unit in general is a central part of the machinery that controls its operation, provided that a piece of machinery is complex and organized enough to contain any such unit. One domain in which the term is specifically used is the area of computer design...

(CU), which extracts instructions from memory and decodes and executes them, calling on the ALU when necessary.

Not all computational systems rely on a central processing unit. An array processor or vector processor

Vector processor

A vector processor, or array processor, is a central processing unit that implements an instruction set containing instructions that operate on one-dimensional arrays of data called vectors. This is in contrast to a scalar processor, whose instructions operate on single data items...

has multiple parallel computing elements, with no one unit considered the "center". In the distributed computing

Distributed computing

Distributed computing is a field of computer science that studies distributed systems. A distributed system consists of multiple autonomous computers that communicate through a computer network. The computers interact with each other in order to achieve a common goal...

model, problems are solved by a distributed interconnected set of processors.

History

ENIAC

ENIAC was the first general-purpose electronic computer. It was a Turing-complete digital computer capable of being reprogrammed to solve a full range of computing problems....

had to be physically rewired in order to perform different tasks, which caused these machines to be called "fixed-program computers." Since the term "CPU" is generally defined as a software (computer program) execution device, the earliest devices that could rightly be called CPUs came with the advent of the stored-program computer.

The idea of a stored-program computer was already present in the design of J. Presper Eckert

J. Presper Eckert

John Adam Presper "Pres" Eckert Jr. was an American electrical engineer and computer pioneer. With John Mauchly he invented the first general-purpose electronic digital computer , presented the first course in computing topics , founded the first commercial computer company , and...

and John William Mauchly's ENIAC, but was initially omitted so that it could be finished sooner. On June 30, 1945, before ENIAC was made, mathematician John von Neumann

John von Neumann

John von Neumann was a Hungarian-American mathematician and polymath who made major contributions to a vast number of fields, including set theory, functional analysis, quantum mechanics, ergodic theory, geometry, fluid dynamics, economics and game theory, computer science, numerical analysis,...

distributed the paper entitled First Draft of a Report on the EDVAC

First Draft of a Report on the EDVAC

The First Draft of a Report on the EDVAC was an incomplete 101-page document written by John von Neumann and distributed on June 30, 1945 by Herman Goldstine, security officer on the classified ENIAC project...

. It was the outline of a stored-program computer that would eventually be completed in August 1949. EDVAC was designed to perform a certain number of instructions (or operations) of various types. These instructions could be combined to create useful programs for the EDVAC to run. Significantly, the programs written for EDVAC were stored in high-speed computer memory rather than specified by the physical wiring of the computer. This overcame a severe limitation of ENIAC, which was the considerable time and effort required to reconfigure the computer to perform a new task. With von Neumann's design, the program, or software, that EDVAC ran could be changed simply by changing the contents of the memory.

Early CPUs were custom-designed as a part of a larger, sometimes one-of-a-kind, computer. However, this method of designing custom CPUs for a particular application has largely given way to the development of mass-produced processors that are made for many purposes. This standardization began in the era of discrete transistor

Transistor

A transistor is a semiconductor device used to amplify and switch electronic signals and power. It is composed of a semiconductor material with at least three terminals for connection to an external circuit. A voltage or current applied to one pair of the transistor's terminals changes the current...

mainframes

Mainframe computer

Mainframes are powerful computers used primarily by corporate and governmental organizations for critical applications, bulk data processing such as census, industry and consumer statistics, enterprise resource planning, and financial transaction processing.The term originally referred to the...

and minicomputer

Minicomputer

A minicomputer is a class of multi-user computers that lies in the middle range of the computing spectrum, in between the largest multi-user systems and the smallest single-user systems...

s and has rapidly accelerated with the popularization of the integrated circuit

Integrated circuit

An integrated circuit or monolithic integrated circuit is an electronic circuit manufactured by the patterned diffusion of trace elements into the surface of a thin substrate of semiconductor material...

(IC). The IC has allowed increasingly complex CPUs to be designed and manufactured to tolerances on the order of nanometers. Both the miniaturization and standardization of CPUs have increased the presence of digital devices in modern life far beyond the limited application of dedicated computing machines. Modern microprocessors appear in everything from automobile

Automobile

An automobile, autocar, motor car or car is a wheeled motor vehicle used for transporting passengers, which also carries its own engine or motor...

s to cell phones and children's toys.

While von Neumann is most often credited with the design of the stored-program computer because of his design of EDVAC, others before him, such as Konrad Zuse

Konrad Zuse

Konrad Zuse was a German civil engineer and computer pioneer. His greatest achievement was the world's first functional program-controlled Turing-complete computer, the Z3, which became operational in May 1941....

, had suggested and implemented similar ideas. The so-called Harvard architecture

Harvard architecture

The Harvard architecture is a computer architecture with physically separate storage and signal pathways for instructions and data. The term originated from the Harvard Mark I relay-based computer, which stored instructions on punched tape and data in electro-mechanical counters...

of the Harvard Mark I

Harvard Mark I

The IBM Automatic Sequence Controlled Calculator , called the Mark I by Harvard University, was an electro-mechanical computer....

, which was completed before EDVAC, also utilized a stored-program design using punched paper tape

Punched tape

Punched tape or paper tape is an obsolete form of data storage, consisting of a long strip of paper in which holes are punched to store data...

rather than electronic memory. The key difference between the von Neumann and Harvard architectures is that the latter separates the storage and treatment of CPU instructions and data, while the former uses the same memory space for both. Most modern CPUs are primarily von Neumann in design, but elements of the Harvard architecture are commonly seen as well.

Relay

Relay

A relay is an electrically operated switch. Many relays use an electromagnet to operate a switching mechanism mechanically, but other operating principles are also used. Relays are used where it is necessary to control a circuit by a low-power signal , or where several circuits must be controlled...

s and vacuum tube

Vacuum tube

In electronics, a vacuum tube, electron tube , or thermionic valve , reduced to simply "tube" or "valve" in everyday parlance, is a device that relies on the flow of electric current through a vacuum...

s (thermionic valves) were commonly used as switching elements; a useful computer requires thousands or tens of thousands of switching devices. The overall speed of a system is dependent on the speed of the switches. Tube computers like EDVAC

EDVAC

EDVAC was one of the earliest electronic computers. Unlike its predecessor the ENIAC, it was binary rather than decimal, and was a stored program computer....

tended to average eight hours between failures, whereas relay computers like the (slower, but earlier) Harvard Mark I

Harvard Mark I

The IBM Automatic Sequence Controlled Calculator , called the Mark I by Harvard University, was an electro-mechanical computer....

failed very rarely. In the end, tube based CPUs became dominant because the significant speed advantages afforded generally outweighed the reliability problems. Most of these early synchronous CPUs ran at low clock rate

Clock rate

The clock rate typically refers to the frequency that a CPU is running at.For example, a crystal oscillator frequency reference typically is synonymous with a fixed sinusoidal waveform, a clock rate is that frequency reference translated by electronic circuitry into a corresponding square wave...

s compared to modern microelectronic designs (see below for a discussion of clock rate). Clock signal frequencies ranging from 100 kHz

Hertz

The hertz is the SI unit of frequency defined as the number of cycles per second of a periodic phenomenon. One of its most common uses is the description of the sine wave, particularly those used in radio and audio applications....

to 4 MHz were very common at this time, limited largely by the speed of the switching devices they were built with.

The control unit

The control unit of the CPU contains circuitry that uses electrical signals to direct the entire computer system to carry out stored program instructions. The control unit does not execute program instructions; rather, it directs other parts of the system to do so. The control unit must communicate with both the arithmetic/logic unit and memory.Discrete transistor and integrated circuit CPUs

Transistor

A transistor is a semiconductor device used to amplify and switch electronic signals and power. It is composed of a semiconductor material with at least three terminals for connection to an external circuit. A voltage or current applied to one pair of the transistor's terminals changes the current...

. Transistorized CPUs during the 1950s and 1960s no longer had to be built out of bulky, unreliable, and fragile switching elements like vacuum tube

Vacuum tube

In electronics, a vacuum tube, electron tube , or thermionic valve , reduced to simply "tube" or "valve" in everyday parlance, is a device that relies on the flow of electric current through a vacuum...

s and electrical relays

Relay

A relay is an electrically operated switch. Many relays use an electromagnet to operate a switching mechanism mechanically, but other operating principles are also used. Relays are used where it is necessary to control a circuit by a low-power signal , or where several circuits must be controlled...

. With this improvement more complex and reliable CPUs were built onto one or several printed circuit board

Printed circuit board

A printed circuit board, or PCB, is used to mechanically support and electrically connect electronic components using conductive pathways, tracks or signal traces etched from copper sheets laminated onto a non-conductive substrate. It is also referred to as printed wiring board or etched wiring...

s containing discrete (individual) components.

During this period, a method of manufacturing many transistors in a compact space gained popularity. The integrated circuit

Integrated circuit

An integrated circuit or monolithic integrated circuit is an electronic circuit manufactured by the patterned diffusion of trace elements into the surface of a thin substrate of semiconductor material...

(IC) allowed a large number of transistors to be manufactured on a single semiconductor

Semiconductor

A semiconductor is a material with electrical conductivity due to electron flow intermediate in magnitude between that of a conductor and an insulator. This means a conductivity roughly in the range of 103 to 10−8 siemens per centimeter...

-based die

Die (integrated circuit)

A die in the context of integrated circuits is a small block of semiconducting material, on which a given functional circuit is fabricated.Typically, integrated circuits are produced in large batches on a single wafer of electronic-grade silicon or other semiconductor through processes such as...

, or "chip." At first only very basic non-specialized digital circuits such as NOR gate

NOR gate

The NOR gate is a digital logic gate that implements logical NOR - it behaves according to the truth table to the right. A HIGH output results if both the inputs to the gate are LOW . If one or both input is HIGH , a LOW output results. NOR is the result of the negation of the OR operator...

s were miniaturized into ICs. CPUs based upon these "building block" ICs are generally referred to as "small-scale integration" (SSI) devices. SSI ICs, such as the ones used in the Apollo guidance computer

Apollo Guidance Computer

The Apollo Guidance Computer provided onboard computation and control for guidance, navigation, and control of the Command Module and Lunar Module spacecraft of the Apollo program...

, usually contained up to a few score transistors. To build an entire CPU out of SSI ICs required thousands of individual chips, but still consumed much less space and power than earlier discrete transistor designs. As microelectronic technology advanced, an increasing number of transistors were placed on ICs, thus decreasing the quantity of individual ICs needed for a complete CPU. MSI and LSI (medium- and large-scale integration) ICs increased transistor counts to hundreds, and then thousands.

In 1964 IBM

IBM

International Business Machines Corporation or IBM is an American multinational technology and consulting corporation headquartered in Armonk, New York, United States. IBM manufactures and sells computer hardware and software, and it offers infrastructure, hosting and consulting services in areas...

introduced its System/360

System/360

The IBM System/360 was a mainframe computer system family first announced by IBM on April 7, 1964, and sold between 1964 and 1978. It was the first family of computers designed to cover the complete range of applications, from small to large, both commercial and scientific...

computer architecture which was used in a series of computers that could run the same programs with different speed and performance. This was significant at a time when most electronic computers were incompatible with one another, even those made by the same manufacturer. To facilitate this improvement, IBM utilized the concept of a microprogram (often called "microcode"), which still sees widespread usage in modern CPUs. The System/360 architecture was so popular that it dominated the mainframe computer

Mainframe computer

Mainframes are powerful computers used primarily by corporate and governmental organizations for critical applications, bulk data processing such as census, industry and consumer statistics, enterprise resource planning, and financial transaction processing.The term originally referred to the...

market for decades and left a legacy that is still continued by similar modern computers like the IBM zSeries

ZSeries

IBM System z, or earlier IBM eServer zSeries, is a brand name designated by IBM to all its mainframe computers.In 2000, IBM rebranded the existing System/390 to IBM eServer zSeries with the e depicted in IBM's red trademarked symbol, but because no specific machine names were changed for...

. In the same year (1964), Digital Equipment Corporation

Digital Equipment Corporation

Digital Equipment Corporation was a major American company in the computer industry and a leading vendor of computer systems, software and peripherals from the 1960s to the 1990s...

(DEC) introduced another influential computer aimed at the scientific and research markets, the PDP-8

PDP-8

The 12-bit PDP-8 was the first successful commercial minicomputer, produced by Digital Equipment Corporation in the 1960s. DEC introduced it on 22 March 1965, and sold more than 50,000 systems, the most of any computer up to that date. It was the first widely sold computer in the DEC PDP series of...

. DEC would later introduce the extremely popular PDP-11

PDP-11

The PDP-11 was a series of 16-bit minicomputers sold by Digital Equipment Corporation from 1970 into the 1990s, one of a succession of products in the PDP series. The PDP-11 replaced the PDP-8 in many real-time applications, although both product lines lived in parallel for more than 10 years...

line that originally was built with SSI ICs but was eventually implemented with LSI components once these became practical. In stark contrast with its SSI and MSI predecessors, the first LSI implementation of the PDP-11 contained a CPU composed of only four LSI integrated circuits.

Transistor-based computers had several distinct advantages over their predecessors. Aside from facilitating increased reliability and lower power consumption, transistors also allowed CPUs to operate at much higher speeds because of the short switching time of a transistor in comparison to a tube or relay. Thanks to both the increased reliability as well as the dramatically increased speed of the switching elements (which were almost exclusively transistors by this time), CPU clock rates in the tens of megahertz were obtained during this period. Additionally while discrete transistor and IC CPUs were in heavy usage, new high-performance designs like SIMD

SIMD

Single instruction, multiple data , is a class of parallel computers in Flynn's taxonomy. It describes computers with multiple processing elements that perform the same operation on multiple data simultaneously...

(Single Instruction Multiple Data) vector processor

Vector processor

A vector processor, or array processor, is a central processing unit that implements an instruction set containing instructions that operate on one-dimensional arrays of data called vectors. This is in contrast to a scalar processor, whose instructions operate on single data items...

s began to appear. These early experimental designs later gave rise to the era of specialized supercomputer

Supercomputer

A supercomputer is a computer at the frontline of current processing capacity, particularly speed of calculation.Supercomputers are used for highly calculation-intensive tasks such as problems including quantum physics, weather forecasting, climate research, molecular modeling A supercomputer is a...

s like those made by Cray Inc.

Microprocessors

In the 1970s the fundamental inventions by Federico FagginFederico Faggin

Federico Faggin , who received in 2010 the National Medal of Technology and Innovation by Barack Obama, the highest honor bestowed by the United States government on scientists, engineers, and inventors, at the White House in Washington, is an Italian-born and naturalized U.S...

(Silicon Gate MOS ICs with self aligned gates along with his new random logic design methodology) changed the design and implementation of CPUs forever. Since the introduction of the first commercially available microprocessor (the Intel 4004

Intel 4004

The Intel 4004 was a 4-bit central processing unit released by Intel Corporation in 1971. It was the first complete CPU on one chip, and also the first commercially available microprocessor...

), in 1970 and the first widely used microprocessor

Microprocessor

A microprocessor incorporates the functions of a computer's central processing unit on a single integrated circuit, or at most a few integrated circuits. It is a multipurpose, programmable device that accepts digital data as input, processes it according to instructions stored in its memory, and...

(the Intel 8080

Intel 8080

The Intel 8080 was the second 8-bit microprocessor designed and manufactured by Intel and was released in April 1974. It was an extended and enhanced variant of the earlier 8008 design, although without binary compatibility...

) in 1974, this class of CPUs has almost completely overtaken all other central processing unit implementation methods. Mainframe and minicomputer manufacturers of the time launched proprietary IC development programs to upgrade their older computer architecture

Computer architecture

In computer science and engineering, computer architecture is the practical art of selecting and interconnecting hardware components to create computers that meet functional, performance and cost goals and the formal modelling of those systems....

s, and eventually produced instruction set

Instruction set

An instruction set, or instruction set architecture , is the part of the computer architecture related to programming, including the native data types, instructions, registers, addressing modes, memory architecture, interrupt and exception handling, and external I/O...

compatible microprocessors that were backward-compatible with their older hardware and software. Combined with the advent and eventual vast success of the now ubiquitous personal computer

Personal computer

A personal computer is any general-purpose computer whose size, capabilities, and original sales price make it useful for individuals, and which is intended to be operated directly by an end-user with no intervening computer operator...

, the term CPU is now applied almost exclusively to microprocessors. Several CPUs can be combined in a single processing chip.

Previous generations of CPUs were implemented as discrete components and numerous small integrated circuit

Integrated circuit

An integrated circuit or monolithic integrated circuit is an electronic circuit manufactured by the patterned diffusion of trace elements into the surface of a thin substrate of semiconductor material...

s (ICs) on one or more circuit boards. Microprocessors, on the other hand, are CPUs manufactured on a very small number of ICs; usually just one. The overall smaller CPU size as a result of being implemented on a single die means faster switching time because of physical factors like decreased gate parasitic capacitance

Parasitic capacitance

In electrical circuits, parasitic capacitance, stray capacitance or, when relevant, self-capacitance , is an unavoidable and usually unwanted capacitance that exists between the parts of an electronic component or circuit simply because of their proximity to each other...

. This has allowed synchronous microprocessors to have clock rates ranging from tens of megahertz to several gigahertz. Additionally, as the ability to construct exceedingly small transistors on an IC has increased, the complexity and number of transistors in a single CPU has increased dramatically. This widely observed trend is described by Moore's law

Moore's Law

Moore's law describes a long-term trend in the history of computing hardware: the number of transistors that can be placed inexpensively on an integrated circuit doubles approximately every two years....

, which has proven to be a fairly accurate predictor of the growth of CPU (and other IC) complexity to date.

While the complexity, size, construction, and general form of CPUs have changed drastically over the past sixty years, it is notable that the basic design and function has not changed much at all. Almost all common CPUs today can be very accurately described as von Neumann stored-program machines. As the aforementioned Moore's law continues to hold true, concerns have arisen about the limits of integrated circuit transistor technology. Extreme miniaturization of electronic gates is causing the effects of phenomena like electromigration

Electromigration

Electromigration is the transport of material caused by the gradual movement of the ions in a conductor due to the momentum transfer between conducting electrons and diffusing metal atoms. The effect is important in applications where high direct current densities are used, such as in...

and subthreshold leakage

Subthreshold leakage

The Subthreshold conduction or the subthreshold leakage or the subthreshold drain current is the current that flows between the source and drain of a MOSFET when the transistor is in subthreshold region, or weak-inversion region, that is, for gate-to-source voltages below the threshold voltage. The...

to become much more significant. These newer concerns are among the many factors causing researchers to investigate new methods of computing such as the quantum computer

Quantum computer

A quantum computer is a device for computation that makes direct use of quantum mechanical phenomena, such as superposition and entanglement, to perform operations on data. Quantum computers are different from traditional computers based on transistors...

, as well as to expand the usage of parallelism

Parallel computing

Parallel computing is a form of computation in which many calculations are carried out simultaneously, operating on the principle that large problems can often be divided into smaller ones, which are then solved concurrently . There are several different forms of parallel computing: bit-level,...

and other methods that extend the usefulness of the classical von Neumann model.

Operation

The fundamental operation of most CPUs, regardless of the physical form they take, is to execute a sequence of stored instructions called a program. The program is represented by a series of numbers that are kept in some kind of computer memory. There are four steps that nearly all CPUs use in their operation: fetch, decode, execute, and writeback.The first step, fetch, involves retrieving an instruction (which is represented by a number or sequence of numbers) from program memory. The location in program memory is determined by a program counter

Program counter

The program counter , commonly called the instruction pointer in Intel x86 microprocessors, and sometimes called the instruction address register, or just part of the instruction sequencer in some computers, is a processor register that indicates where the computer is in its instruction sequence...

(PC), which stores a number that identifies the current position in the program. After an instruction is fetched, the PC is incremented by the length of the instruction word in terms of memory units. Often, the instruction to be fetched must be retrieved from relatively slow memory, causing the CPU to stall while waiting for the instruction to be returned. This issue is largely addressed in modern processors by caches and pipeline architectures (see below).

The instruction that the CPU fetches from memory is used to determine what the CPU is to do. In the decode step, the instruction is broken up into parts that have significance to other portions of the CPU. The way in which the numerical instruction value is interpreted is defined by the CPU's instruction set architecture (ISA). Often, one group of numbers in the instruction, called the opcode, indicates which operation to perform. The remaining parts of the number usually provide information required for that instruction, such as operands for an addition operation. Such operands may be given as a constant value (called an immediate value), or as a place to locate a value: a register

Processor register

In computer architecture, a processor register is a small amount of storage available as part of a CPU or other digital processor. Such registers are addressed by mechanisms other than main memory and can be accessed more quickly...

or a memory address, as determined by some addressing mode

Addressing mode

Addressing modes are an aspect of the instruction set architecture in most central processing unit designs. The various addressing modes that are defined in a given instruction set architecture define how machine language instructions in that architecture identify the operand of each instruction...

. In older designs the portions of the CPU responsible for instruction decoding were unchangeable hardware devices. However, in more abstract and complicated CPUs and ISAs, a microprogram is often used to assist in translating instructions into various configuration signals for the CPU. This microprogram is sometimes rewritable so that it can be modified to change the way the CPU decodes instructions even after it has been manufactured.

After the fetch and decode steps, the execute step is performed. During this step, various portions of the CPU are connected so they can perform the desired operation. If, for instance, an addition operation was requested, the arithmetic logic unit

Arithmetic logic unit

In computing, an arithmetic logic unit is a digital circuit that performs arithmetic and logical operations.The ALU is a fundamental building block of the central processing unit of a computer, and even the simplest microprocessors contain one for purposes such as maintaining timers...

(ALU) will be connected to a set of inputs and a set of outputs. The inputs provide the numbers to be added, and the outputs will contain the final sum. The ALU contains the circuitry to perform simple arithmetic and logical operations on the inputs (like addition and bitwise operations). If the addition operation produces a result too large for the CPU to handle, an arithmetic overflow flag in a flags register may also be set.

The final step, writeback, simply "writes back" the results of the execute step to some form of memory. Very often the results are written to some internal CPU register for quick access by subsequent instructions. In other cases results may be written to slower, but cheaper and larger, main memory. Some types of instructions manipulate the program counter rather than directly produce result data. These are generally called "jumps" and facilitate behavior like loops, conditional program execution (through the use of a conditional jump), and functions

Subroutine

In computer science, a subroutine is a portion of code within a larger program that performs a specific task and is relatively independent of the remaining code....

in programs. Many instructions will also change the state of digits in a "flags" register. These flags can be used to influence how a program behaves, since they often indicate the outcome of various operations. For example, one type of "compare" instruction considers two values and sets a number in the flags register according to which one is greater. This flag could then be used by a later jump instruction to determine program flow.

After the execution of the instruction and writeback of the resulting data, the entire process repeats, with the next instruction cycle

Instruction cycle

An instruction cycle is the basic operation cycle of a computer. It is the process by which a computer retrieves a program instruction from its memory, determines what actions the instruction requires, and carries out those actions...

normally fetching the next-in-sequence instruction because of the incremented value in the program counter. If the completed instruction was a jump, the program counter will be modified to contain the address of the instruction that was jumped to, and program execution continues normally. In more complex CPUs than the one described here, multiple instructions can be fetched, decoded, and executed simultaneously. This section describes what is generally referred to as the "classic RISC pipeline

Classic RISC pipeline

In the history of computer hardware, some early reduced instruction set computer central processing units used a very similar architectural solution, now called a classic RISC pipeline. Those CPUs were: MIPS, SPARC, Motorola 88000, and later DLX....

", which in fact is quite common among the simple CPUs used in many electronic devices (often called microcontroller). It largely ignores the important role of CPU cache

CPU cache

A CPU cache is a cache used by the central processing unit of a computer to reduce the average time to access memory. The cache is a smaller, faster memory which stores copies of the data from the most frequently used main memory locations...

, and therefore the access stage of the pipeline.

Design and implementation

The basic concept of a CPU is as follows:Hardwired into a CPU's design is a list of basic operations it can perform, called an instruction set

Instruction set

An instruction set, or instruction set architecture , is the part of the computer architecture related to programming, including the native data types, instructions, registers, addressing modes, memory architecture, interrupt and exception handling, and external I/O...

. Such operations may include adding or subtracting two numbers, comparing numbers, or jumping to a different part of a program. Each of these basic operations is represented by a particular sequence of bits

Bit

A bit is the basic unit of information in computing and telecommunications; it is the amount of information stored by a digital device or other physical system that exists in one of two possible distinct states...

; this sequence is called the opcode

Opcode

In computer science engineering, an opcode is the portion of a machine language instruction that specifies the operation to be performed. Their specification and format are laid out in the instruction set architecture of the processor in question...

for that particular operation. Sending a particular opcode to a CPU will cause it to perform the operation represented by that opcode. To execute an instruction in a computer program, the CPU uses the opcode for that instruction as well as its arguments (for instance the two numbers to be added, in the case of an addition operation). A computer program

Computer program