Levenberg-Marquardt algorithm

Encyclopedia

In mathematics

and computing, the Levenberg–Marquardt algorithm (LMA) provides a numerical

solution to the problem of minimizing a function, generally nonlinear, over a space of parameters of the function. These minimization problems arise especially in least squares

curve fitting

and nonlinear programming

.

The LMA interpolates between the Gauss–Newton algorithm (GNA) and the method of gradient descent

. The LMA is more robust

than the GNA, which means that in many cases it finds a solution even if it starts very far off the final minimum. For well-behaved functions and reasonable starting parameters, the LMA tends to be a bit slower than the GNA. LMA can also be viewed as Gauss–Newton using a trust region

approach.

The LMA is a very popular curve-fitting algorithm used in many software applications for solving generic curve-fitting problems. However, the LMA finds only a local minimum, not a global minimum.

becomes minimal.

procedure. To start a minimization, the user has to provide an initial guess for the parameter vector, β. In cases with only one minimum, an uninformed standard guess like βT=(1,1,...,1) will work fine; in cases with multiple minima, the algorithm converges only if the initial guess is already somewhat close to the final solution.

In each iteration step, the parameter vector, β, is replaced by a new estimate, β + δ. To determine δ, the functions are approximated by their linearizations

are approximated by their linearizations

where

is the gradient

(row-vector in this case)

of f with respect to β.

At its minimum, the sum of squares, , the gradient

, the gradient

of with respect to δ will be zero. The above first-order approximation of

with respect to δ will be zero. The above first-order approximation of  gives

gives

Or in vector notation,

Taking the derivative with respect to δ and setting the result to zero gives:

where is the Jacobian matrix whose ith row equals

is the Jacobian matrix whose ith row equals  , and where

, and where  and

and  are vectors with ith component

are vectors with ith component

and

and  , respectively.

, respectively.

This is a set of linear equations which can be solved for δ.

Levenberg's contribution is to replace this equation by a "damped version",

where I is the identity matrix, giving as the increment, δ, to the estimated parameter vector, β.

The (non-negative) damping factor, λ, is adjusted at each iteration. If reduction of S is rapid, a smaller value can be used, bringing the algorithm closer to the Gauss–Newton algorithm, whereas if an iteration gives insufficient reduction in the residual, λ can be increased, giving a step closer to the gradient descent direction. Note that the gradient

of S with respect to β

equals . Therefore, for large values of λ, the step will

. Therefore, for large values of λ, the step will

be taken approximately in the direction of the gradient. If either the length of the calculated step, δ, or the reduction of sum of squares from the latest parameter vector, β + δ, fall below predefined limits, iteration stops and the last parameter vector, β, is considered to be the solution.

Levenberg's algorithm has the disadvantage that if the value of damping factor, λ, is large, inverting JTJ + λI is not used at all. Marquardt provided the insight that we can scale each component of the gradient according to the curvature so that there is larger movement along the directions where the gradient is smaller. This avoids slow convergence in the direction of small gradient. Therefore, Marquardt replaced the identity matrix, I, with the diagonal matrix consisting of the diagonal elements of JTJ, resulting in the Levenberg–Marquardt algorithm:

.

.

A similar damping factor appears in Tikhonov regularization

, which is used to solve linear ill-posed problems, as well as in ridge regression, an estimation

technique in statistics

.

, in particular very slow convergence close to the optimum.

The absolute values of any choice depends on how well-scaled the initial problem is. Marquardt recommended starting with a value λ0 and a factor ν>1. Initially setting λ=λ0 and computing the residual sum of squares S(β) after one step from the starting point with the damping factor of

λ=λ0 and secondly with λ0/ν. If both of these are worse than the initial point then the damping is increased by successive multiplication by ν until a better point is found with a new damping factor of λ0νk for some k.

If use of the damping factor λ/ν results in a reduction in squared residual then this is taken as the new value of λ (and the new optimum location is taken as that obtained with this damping factor) and the process continues; if using λ/ν resulted in a worse residual, but using λ resulted in a better residual then λ is left unchanged and the new optimum is taken as the value obtained with λ as damping factor.

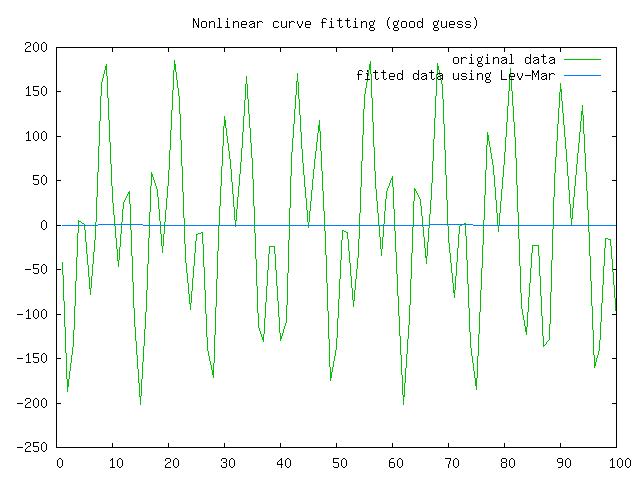

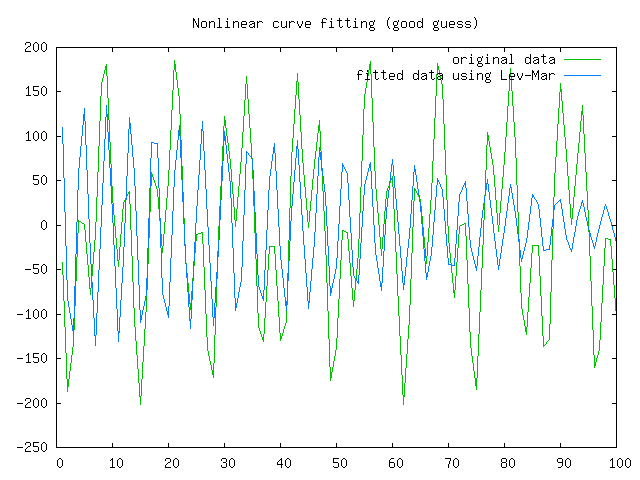

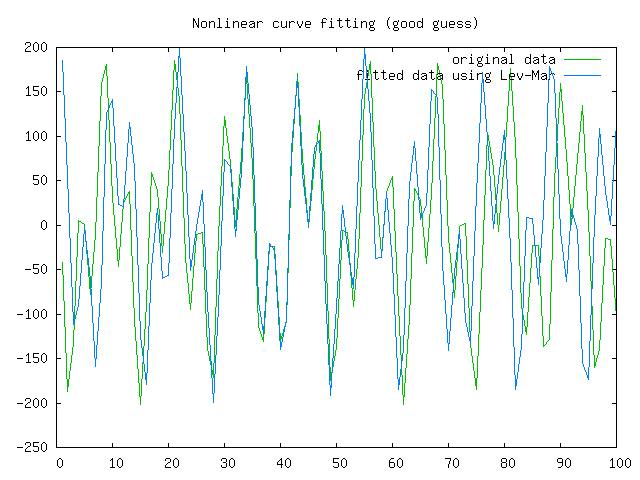

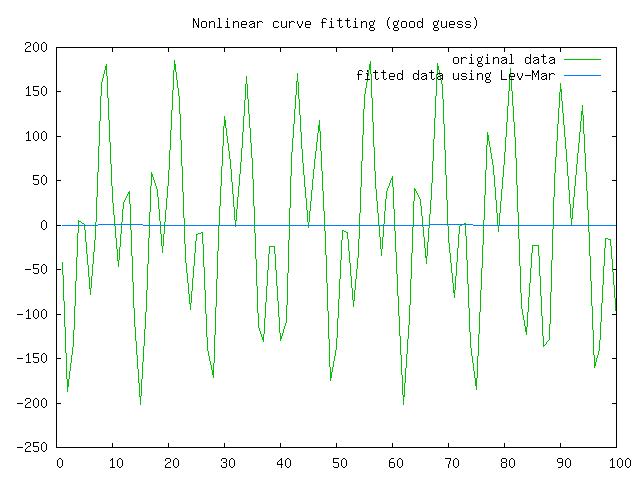

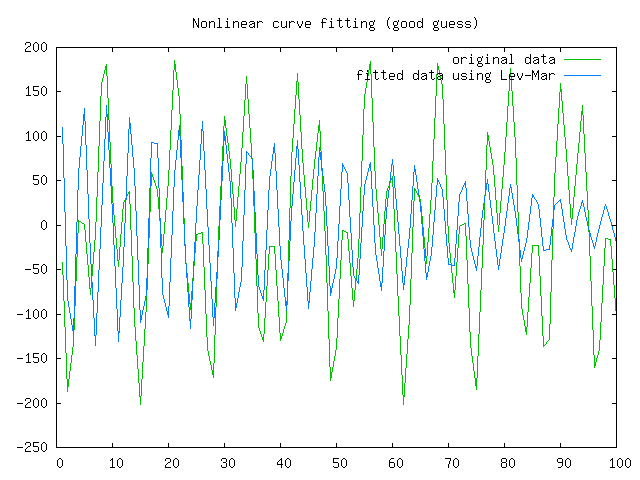

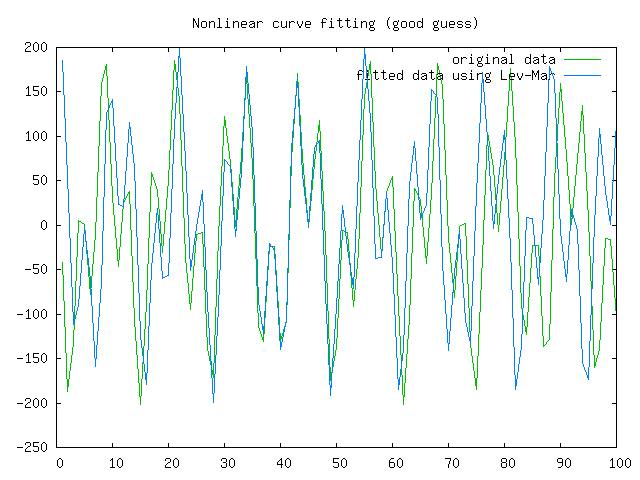

In this example we try to fit the function

In this example we try to fit the function  using the Levenberg–Marquardt algorithm implemented in

using the Levenberg–Marquardt algorithm implemented in

GNU Octave

as the leasqr function. The 3 graphs Fig 1,2,3 show progressively better fitting for the parameters a=100, b=102 used

in the initial curve. Only when the parameters in Fig 3 are chosen closest to the original, are the curves fitting exactly. This equation

is an example of very sensitive initial conditions for the Levenberg–Marquardt algorithm. One reason for this sensitivity is the existence of multiple minima — the function has minima at parameter value

has minima at parameter value  and

and

Mathematics

Mathematics is the study of quantity, space, structure, and change. Mathematicians seek out patterns and formulate new conjectures. Mathematicians resolve the truth or falsity of conjectures by mathematical proofs, which are arguments sufficient to convince other mathematicians of their validity...

and computing, the Levenberg–Marquardt algorithm (LMA) provides a numerical

Numerical analysis

Numerical analysis is the study of algorithms that use numerical approximation for the problems of mathematical analysis ....

solution to the problem of minimizing a function, generally nonlinear, over a space of parameters of the function. These minimization problems arise especially in least squares

Least squares

The method of least squares is a standard approach to the approximate solution of overdetermined systems, i.e., sets of equations in which there are more equations than unknowns. "Least squares" means that the overall solution minimizes the sum of the squares of the errors made in solving every...

curve fitting

Curve fitting

Curve fitting is the process of constructing a curve, or mathematical function, that has the best fit to a series of data points, possibly subject to constraints. Curve fitting can involve either interpolation, where an exact fit to the data is required, or smoothing, in which a "smooth" function...

and nonlinear programming

Nonlinear programming

In mathematics, nonlinear programming is the process of solving a system of equalities and inequalities, collectively termed constraints, over a set of unknown real variables, along with an objective function to be maximized or minimized, where some of the constraints or the objective function are...

.

The LMA interpolates between the Gauss–Newton algorithm (GNA) and the method of gradient descent

Gradient descent

Gradient descent is a first-order optimization algorithm. To find a local minimum of a function using gradient descent, one takes steps proportional to the negative of the gradient of the function at the current point...

. The LMA is more robust

Robustness (computer science)

In computer science, robustness is the ability of a computer system to cope with errors during execution or the ability of an algorithm to continue to operate despite abnormalities in input, calculations, etc. Formal techniques, such as fuzz testing, are essential to showing robustness since this...

than the GNA, which means that in many cases it finds a solution even if it starts very far off the final minimum. For well-behaved functions and reasonable starting parameters, the LMA tends to be a bit slower than the GNA. LMA can also be viewed as Gauss–Newton using a trust region

Trust region

Trust region is a term used in mathematical optimization to denote the subset of the region of the objective function to be optimized that is approximated using a model function . If an adequate model of the objective function is found within the trust region then the region is expanded;...

approach.

The LMA is a very popular curve-fitting algorithm used in many software applications for solving generic curve-fitting problems. However, the LMA finds only a local minimum, not a global minimum.

The problem

The primary application of the Levenberg–Marquardt algorithm is in the least squares curve fitting problem: given a set of m empirical datum pairs of independent and dependent variables, (xi, yi), optimize the parameters β of the model curve f(x,β) so that the sum of the squares of the deviations

becomes minimal.

The solution

Like other numeric minimization algorithms, the Levenberg–Marquardt algorithm is an iterativeIteration

Iteration means the act of repeating a process usually with the aim of approaching a desired goal or target or result. Each repetition of the process is also called an "iteration," and the results of one iteration are used as the starting point for the next iteration.-Mathematics:Iteration in...

procedure. To start a minimization, the user has to provide an initial guess for the parameter vector, β. In cases with only one minimum, an uninformed standard guess like βT=(1,1,...,1) will work fine; in cases with multiple minima, the algorithm converges only if the initial guess is already somewhat close to the final solution.

In each iteration step, the parameter vector, β, is replaced by a new estimate, β + δ. To determine δ, the functions

are approximated by their linearizations

are approximated by their linearizationswhere

is the gradient

Gradient

In vector calculus, the gradient of a scalar field is a vector field that points in the direction of the greatest rate of increase of the scalar field, and whose magnitude is the greatest rate of change....

(row-vector in this case)

of f with respect to β.

At its minimum, the sum of squares,

, the gradient

, the gradientGradient

In vector calculus, the gradient of a scalar field is a vector field that points in the direction of the greatest rate of increase of the scalar field, and whose magnitude is the greatest rate of change....

of

with respect to δ will be zero. The above first-order approximation of

with respect to δ will be zero. The above first-order approximation of  gives

gives

-

.

.

Or in vector notation,

-

.

.

Taking the derivative with respect to δ and setting the result to zero gives:

where

is the Jacobian matrix whose ith row equals

is the Jacobian matrix whose ith row equals  , and where

, and where  and

and  are vectors with ith component

are vectors with ith component and

and  , respectively.

, respectively.This is a set of linear equations which can be solved for δ.

Levenberg's contribution is to replace this equation by a "damped version",

where I is the identity matrix, giving as the increment, δ, to the estimated parameter vector, β.

The (non-negative) damping factor, λ, is adjusted at each iteration. If reduction of S is rapid, a smaller value can be used, bringing the algorithm closer to the Gauss–Newton algorithm, whereas if an iteration gives insufficient reduction in the residual, λ can be increased, giving a step closer to the gradient descent direction. Note that the gradient

Gradient

In vector calculus, the gradient of a scalar field is a vector field that points in the direction of the greatest rate of increase of the scalar field, and whose magnitude is the greatest rate of change....

of S with respect to β

equals

. Therefore, for large values of λ, the step will

. Therefore, for large values of λ, the step willbe taken approximately in the direction of the gradient. If either the length of the calculated step, δ, or the reduction of sum of squares from the latest parameter vector, β + δ, fall below predefined limits, iteration stops and the last parameter vector, β, is considered to be the solution.

Levenberg's algorithm has the disadvantage that if the value of damping factor, λ, is large, inverting JTJ + λI is not used at all. Marquardt provided the insight that we can scale each component of the gradient according to the curvature so that there is larger movement along the directions where the gradient is smaller. This avoids slow convergence in the direction of small gradient. Therefore, Marquardt replaced the identity matrix, I, with the diagonal matrix consisting of the diagonal elements of JTJ, resulting in the Levenberg–Marquardt algorithm:

.

.A similar damping factor appears in Tikhonov regularization

Tikhonov regularization

Tikhonov regularization, named for Andrey Tikhonov, is the most commonly used method of regularization of ill-posed problems. In statistics, the method is known as ridge regression, and, with multiple independent discoveries, it is also variously known as the Tikhonov-Miller method, the...

, which is used to solve linear ill-posed problems, as well as in ridge regression, an estimation

Estimation theory

Estimation theory is a branch of statistics and signal processing that deals with estimating the values of parameters based on measured/empirical data that has a random component. The parameters describe an underlying physical setting in such a way that their value affects the distribution of the...

technique in statistics

Statistics

Statistics is the study of the collection, organization, analysis, and interpretation of data. It deals with all aspects of this, including the planning of data collection in terms of the design of surveys and experiments....

.

Choice of damping parameter

Various more-or-less heuristic arguments have been put forward for the best choice for the damping parameter λ. Theoretical arguments exist showing why some of these choices guaranteed local convergence of the algorithm; however these choices can make the global convergence of the algorithm suffer from the undesirable properties of steepest-descentGradient descent

Gradient descent is a first-order optimization algorithm. To find a local minimum of a function using gradient descent, one takes steps proportional to the negative of the gradient of the function at the current point...

, in particular very slow convergence close to the optimum.

The absolute values of any choice depends on how well-scaled the initial problem is. Marquardt recommended starting with a value λ0 and a factor ν>1. Initially setting λ=λ0 and computing the residual sum of squares S(β) after one step from the starting point with the damping factor of

λ=λ0 and secondly with λ0/ν. If both of these are worse than the initial point then the damping is increased by successive multiplication by ν until a better point is found with a new damping factor of λ0νk for some k.

If use of the damping factor λ/ν results in a reduction in squared residual then this is taken as the new value of λ (and the new optimum location is taken as that obtained with this damping factor) and the process continues; if using λ/ν resulted in a worse residual, but using λ resulted in a better residual then λ is left unchanged and the new optimum is taken as the value obtained with λ as damping factor.

Example

using the Levenberg–Marquardt algorithm implemented in

using the Levenberg–Marquardt algorithm implemented inGNU Octave

GNU Octave

GNU Octave is a high-level language, primarily intended for numerical computations. It provides a convenient command-line interface for solving linear and nonlinear problems numerically, and for performing other numerical experiments using a language that is mostly compatible with MATLAB...

as the leasqr function. The 3 graphs Fig 1,2,3 show progressively better fitting for the parameters a=100, b=102 used

in the initial curve. Only when the parameters in Fig 3 are chosen closest to the original, are the curves fitting exactly. This equation

is an example of very sensitive initial conditions for the Levenberg–Marquardt algorithm. One reason for this sensitivity is the existence of multiple minima — the function

has minima at parameter value

has minima at parameter value  and

and

Descriptions

- Detailed description of the algorithm can be found in Numerical Recipes in C, Chapter 15.5: Nonlinear models

- C. T. Kelley, Iterative Methods for Optimization, SIAM Frontiers in Applied Mathematics, no 18, 1999, ISBN 0-89871-433-8. Online copy

- History of the algorithm in SIAM news

- A tutorial by Ananth Ranganathan

- Methods for Non-Linear Least Squares Problems by K. Madsen, H.B. Nielsen, O. Tingleff is a tutorial discussing non-linear least-squares in general and the Levenberg-Marquardt method in particular

- T. Strutz: Data Fitting and Uncertainty (A practical introduction to weighted least squares and beyond). Vieweg+Teubner, ISBN 978-3-8348-1022-9.

Implementations

- Levenberg-Marquardt is a built-in algorithm with MathematicaMathematicaMathematica is a computational software program used in scientific, engineering, and mathematical fields and other areas of technical computing...

- Levenberg-Marquardt is a built-in algorithm with MatlabMATLABMATLAB is a numerical computing environment and fourth-generation programming language. Developed by MathWorks, MATLAB allows matrix manipulations, plotting of functions and data, implementation of algorithms, creation of user interfaces, and interfacing with programs written in other languages,...

- Levenberg-Marquardt is a built-in algorithm with OriginOrigin (software)Origin is a proprietary computer program for interactive scientific graphing and data analysis. It is produced by OriginLab Corporation, and runs on Microsoft Windows...

- The oldest implementation still in use is lmdif, from MINPACKMINPACKMINPACK is a library of FORTRAN subroutines for the solving of systems of nonlinear equations, or the least squares minimization of the residual of a set of linear or nonlinear equations....

, in FortranFortranFortran is a general-purpose, procedural, imperative programming language that is especially suited to numeric computation and scientific computing...

, in the public domainPublic domainWorks are in the public domain if the intellectual property rights have expired, if the intellectual property rights are forfeited, or if they are not covered by intellectual property rights at all...

. See also:- lmfit, a translation of lmdif into CC (programming language)C is a general-purpose computer programming language developed between 1969 and 1973 by Dennis Ritchie at the Bell Telephone Laboratories for use with the Unix operating system....

/C++C++C++ is a statically typed, free-form, multi-paradigm, compiled, general-purpose programming language. It is regarded as an intermediate-level language, as it comprises a combination of both high-level and low-level language features. It was developed by Bjarne Stroustrup starting in 1979 at Bell...

with an easy-to-use wrapper for curve fitting, public domain. - The GNU Scientific LibraryGNU Scientific LibraryIn computing, the GNU Scientific Library is a software library written in the C programming language for numerical calculations in applied mathematics and science...

library has a C interface to MINPACK. - C/C++ Minpack includes the Levenberg–Marquardt algorithm.

- Several high-level languages and mathematical packages have wrappers for the MINPACKMINPACKMINPACK is a library of FORTRAN subroutines for the solving of systems of nonlinear equations, or the least squares minimization of the residual of a set of linear or nonlinear equations....

routines, among them:- Python library scipySciPySciPy is an open source library of algorithms and mathematical tools for the Python programming language.SciPy contains modules for optimization, linear algebra, integration, interpolation, special functions, FFT, signal and image processing, ODE solvers and other tasks common in science and...

, modulescipy.optimize.leastsq, - IDL, add-on MPFIT.

- R (programming language)R (programming language)R is a programming language and software environment for statistical computing and graphics. The R language is widely used among statisticians for developing statistical software, and R is widely used for statistical software development and data analysis....

has the minpack.lm package.

- Python library scipy

- lmfit, a translation of lmdif into C

- levmar is an implementation in CC (programming language)C is a general-purpose computer programming language developed between 1969 and 1973 by Dennis Ritchie at the Bell Telephone Laboratories for use with the Unix operating system....

/C++C++C++ is a statically typed, free-form, multi-paradigm, compiled, general-purpose programming language. It is regarded as an intermediate-level language, as it comprises a combination of both high-level and low-level language features. It was developed by Bjarne Stroustrup starting in 1979 at Bell...

with support for constraints, distributed under the GNU General Public LicenseGNU General Public LicenseThe GNU General Public License is the most widely used free software license, originally written by Richard Stallman for the GNU Project....

.- levmar includes a MEX fileMEX fileMEX stands for MATLAB Executable. A MEX file provides an interfacebetween MATLAB and subroutines written in C, C++ or Fortran.When compiled, MEX files are dynamically loaded and allow non-MATLAB code to be invoked from within...

interface for MATLABMATLABMATLAB is a numerical computing environment and fourth-generation programming language. Developed by MathWorks, MATLAB allows matrix manipulations, plotting of functions and data, implementation of algorithms, creation of user interfaces, and interfacing with programs written in other languages,... - PerlPerlPerl is a high-level, general-purpose, interpreted, dynamic programming language. Perl was originally developed by Larry Wall in 1987 as a general-purpose Unix scripting language to make report processing easier. Since then, it has undergone many changes and revisions and become widely popular...

(PDLPerl Data LanguagePDL is a set of array programming extensions to the Perl programming language.PDL is an extension to Perl v5, intended for scientific and other data intensive programming tasks...

), pythonPython (programming language)Python is a general-purpose, high-level programming language whose design philosophy emphasizes code readability. Python claims to "[combine] remarkable power with very clear syntax", and its standard library is large and comprehensive...

and HaskellHaskell (programming language)Haskell is a standardized, general-purpose purely functional programming language, with non-strict semantics and strong static typing. It is named after logician Haskell Curry. In Haskell, "a function is a first-class citizen" of the programming language. As a functional programming language, the...

interfaces to levmar are available: see PDL::Fit::Levmar, PyLevmar and HackageDB levmar.

- levmar includes a MEX file

- sparseLM is a CC (programming language)C is a general-purpose computer programming language developed between 1969 and 1973 by Dennis Ritchie at the Bell Telephone Laboratories for use with the Unix operating system....

implementation aimed at minimizing functions with large, arbitrarily sparseSparse matrixIn the subfield of numerical analysis, a sparse matrix is a matrix populated primarily with zeros . The term itself was coined by Harry M. Markowitz....

Jacobians. Includes a MATLAB MEX interface. - InMin library contains a C++ implementation of the algorithm based on the eigen C++ linear algebra library. It has a pure C-language API as well as a Python binding

- ALGLIB has implementations of improved LMA in C# / C++ / Delphi / Visual Basic. Improved algorithm takes less time to converge and can use either Jacobian or exact Hessian.

- NMathNMathNMath is a numerical package for the Microsoft .NET Framework. It is developed by CenterSpace Software. Version 1.0 was released in March, 2003 as NMath Core...

has an implementation for the .NET Framework.NET FrameworkThe .NET Framework is a software framework that runs primarily on Microsoft Windows. It includes a large library and supports several programming languages which allows language interoperability...

. - gnuplotGnuplot- License :Despite gnuplot's name, it is not part of or related to the GNU system and it is not distributed under the GNU General Public License .However, some GNU packages do use gnuplot....

uses its own implementation gnuplot.info. - Java programming languageJava (programming language)Java is a programming language originally developed by James Gosling at Sun Microsystems and released in 1995 as a core component of Sun Microsystems' Java platform. The language derives much of its syntax from C and C++ but has a simpler object model and fewer low-level facilities...

implementations: 1) Javanumerics, 2) LMA-package (a small, user friendly and well documented implementation with examples and support), 3) Apache Commons Math - OOoConv implements the L-M algorithm as an OpenOffice.org Calc spreadsheet.

- SAS, there are multiple ways to access SAS's implementation of the Levenberg-Marquardt algorithm: it can be accessed via NLPLM Call in PROC IML and it can also be accessed through the LSQ statement in PROC NLP, and the METHOD=MARQUARDT option in PROC NLIN.