Microkernel

Encyclopedia

Computer science

Computer science or computing science is the study of the theoretical foundations of information and computation and of practical techniques for their implementation and application in computer systems...

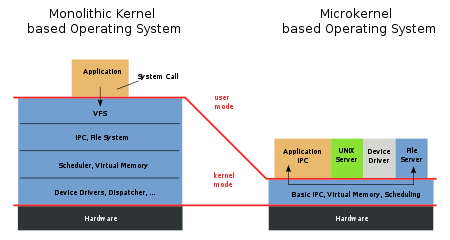

, a microkernel is the near-minimum amount of software that can provide the mechanisms needed to implement an operating system

Operating system

An operating system is a set of programs that manage computer hardware resources and provide common services for application software. The operating system is the most important type of system software in a computer system...

(OS). These mechanisms include low-level address space

Address space

In computing, an address space defines a range of discrete addresses, each of which may correspond to a network host, peripheral device, disk sector, a memory cell or other logical or physical entity.- Overview :...

management, thread

Thread (computer science)

In computer science, a thread of execution is the smallest unit of processing that can be scheduled by an operating system. The implementation of threads and processes differs from one operating system to another, but in most cases, a thread is contained inside a process...

management, and inter-process communication

Inter-process communication

In computing, Inter-process communication is a set of methods for the exchange of data among multiple threads in one or more processes. Processes may be running on one or more computers connected by a network. IPC methods are divided into methods for message passing, synchronization, shared...

(IPC). If the hardware provides multiple rings or CPU modes

CPU modes

CPU modes are operating modes for the central processing unit of some computer architectures that place restrictions on the type and scope of operations that can be performed by certain processes being run by the CPU...

, the microkernel is the only software executing at the most privileged level (generally referred to as supervisor or kernel mode). Traditional operating system functions, such as device driver

Device driver

In computing, a device driver or software driver is a computer program allowing higher-level computer programs to interact with a hardware device....

s, protocol stack

Protocol stack

The protocol stack is an implementation of a computer networking protocol suite. The terms are often used interchangeably. Strictly speaking, the suite is the definition of the protocols, and the stack is the software implementation of them....

s and file system

File system

A file system is a means to organize data expected to be retained after a program terminates by providing procedures to store, retrieve and update data, as well as manage the available space on the device which contain it. A file system organizes data in an efficient manner and is tuned to the...

s, are removed from the microkernel to run in user space

User space

A conventional computer operating system usually segregates virtual memory into kernel space and user space. Kernel space is strictly reserved for running the kernel, kernel extensions, and most device drivers...

. In source code size, microkernels tend to be under 10,000 lines of code, as a general rule. MINIX

Minix

MINIX is a Unix-like computer operating system based on a microkernel architecture created by Andrew S. Tanenbaum for educational purposes; MINIX also inspired the creation of the Linux kernel....

for example has around 4,000 lines of code. kernels larger than 20,000 lines are generally not considered microkernels.

Microkernels developed in the 1980s as a response to changes in the computer world, and several challenges adapting existing "mono-kernels" to these new systems. New device drivers, protocol stacks, file systems and other low-level systems were being developed all the time, code that was normally located in the monolithic kernel, and thus required considerable work and careful code management to work on. Microkernels were developed with the idea that all of these services would be implemented as user-space programs, like any other, allowing them to be worked on monolithically and started and stopped like any other program. This would not only allow these services to be more easily worked on, but also separated the kernel code to allow it to be finely tuned without worrying about unintended side effects. Moreover, it would allow entirely new operating systems to be "built up" on a common core, aiding OS research.

Microkernels were a very hot topic in the 1980s when the first usable local area network

Local area network

A local area network is a computer network that interconnects computers in a limited area such as a home, school, computer laboratory, or office building...

s were being introduced. The same mechanisms that allowed the kernel to be distributed into user space also allowed the system to be distributed across network links. The first microkernels, notably Mach

Mach (kernel)

Mach is an operating system kernel developed at Carnegie Mellon University to support operating system research, primarily distributed and parallel computation. Although Mach is often mentioned as one of the earliest examples of a microkernel, not all versions of Mach are microkernels...

, proved to have disappointing performance, but the inherent advantages appeared so great that it was a major line of research into the late 1990s. However, during this time the speed of computers grew greatly in relation to networking systems, and the disadvantages in performance came to overwhelm the advantages in development terms. Many attempts were made to adapt the existing systems to have better performance, but the overhead was always considerable and most of these efforts required the user-space programs to be moved back into the kernel. By 2000, most large-scale (Mach-like) efforts had ended, although OpenStep

OpenStep

OpenStep was an object-oriented application programming interface specification for an object-oriented operating system that used a non-NeXTSTEP operating system as its core, principally developed by NeXT with Sun Microsystems. OPENSTEP was a specific implementation of the OpenStep API developed...

used an adapted Mach kernel called XNU

XNU

XNU is the computer operating system kernel that Apple Inc. acquired and developed for use in the Mac OS X operating system and released as free and open source software as part of the Darwin operating system...

, which is now used in the OS known as Darwin

Darwin (operating system)

Darwin is an open source POSIX-compliant computer operating system released by Apple Inc. in 2000. It is composed of code developed by Apple, as well as code derived from NeXTSTEP, BSD, and other free software projects....

, which is the open source part of Mac OS X

Mac OS X

Mac OS X is a series of Unix-based operating systems and graphical user interfaces developed, marketed, and sold by Apple Inc. Since 2002, has been included with all new Macintosh computer systems...

Although major work on microkernels largely ended, experimenters continued development. It has since been shown that many of the performance problems of earlier designs were not a fundamental requirement of the concept, but instead due to the designer's desire to use single-purpose systems to implement as many of these services as possible. Using a more pragmatic approach to the problem, including assembly code and relying on the processor to enforce concepts normally supported in software led to a new series of microkernels with dramatically improved performance. The historical term nanokernel has been used to distinguish modern, high-performance microkernels from earlier implementations which still contained many system services. However, as nanokernels have all but replaced their microkernel progenitors, the term has fallen into disuse.

Microkernels are closely related to exokernel

Exokernel

Exokernel is an operating system kernel developed by the MIT Parallel and Distributed Operating Systems group, and also a class of similar operating systems....

s.

They also have much in common with hypervisor

Hypervisor

In computing, a hypervisor, also called virtual machine manager , is one of many hardware virtualization techniques that allow multiple operating systems, termed guests, to run concurrently on a host computer. It is so named because it is conceptually one level higher than a supervisory program...

s,

but the latter make no claim to minimality and are specialized to supporting virtual machine

Virtual machine

A virtual machine is a "completely isolated guest operating system installation within a normal host operating system". Modern virtual machines are implemented with either software emulation or hardware virtualization or both together.-VM Definitions:A virtual machine is a software...

s; indeed, the L4 microkernel frequently finds use in a hypervisor capacity.

Introduction

Early operating system kernels were rather small, partly because computer memory was limited. As the capability of computers grew, the number of devices the kernel had to control also grew. Through the early history of UnixUnix

Unix is a multitasking, multi-user computer operating system originally developed in 1969 by a group of AT&T employees at Bell Labs, including Ken Thompson, Dennis Ritchie, Brian Kernighan, Douglas McIlroy, and Joe Ossanna...

, kernels were generally small, even though those kernels contained device drivers and file system managers. When address spaces increased from 16 to 32 bits, kernel design was no longer cramped by the hardware architecture, and kernels began to grow.

The Berkeley Software Distribution

Berkeley Software Distribution

Berkeley Software Distribution is a Unix operating system derivative developed and distributed by the Computer Systems Research Group of the University of California, Berkeley, from 1977 to 1995...

(BSD) of Unix

Unix

Unix is a multitasking, multi-user computer operating system originally developed in 1969 by a group of AT&T employees at Bell Labs, including Ken Thompson, Dennis Ritchie, Brian Kernighan, Douglas McIlroy, and Joe Ossanna...

began the era of big kernels. In addition to operating a basic system consisting of the CPU, disks and printers, BSD started adding additional file system

File system

A file system is a means to organize data expected to be retained after a program terminates by providing procedures to store, retrieve and update data, as well as manage the available space on the device which contain it. A file system organizes data in an efficient manner and is tuned to the...

s, a complete TCP/IP networking system

Protocol stack

The protocol stack is an implementation of a computer networking protocol suite. The terms are often used interchangeably. Strictly speaking, the suite is the definition of the protocols, and the stack is the software implementation of them....

, and a number of "virtual" devices that allowed the existing programs to work invisibly over the network. This growth continued for many years, resulting in kernels with millions of lines of source code

Source code

In computer science, source code is text written using the format and syntax of the programming language that it is being written in. Such a language is specially designed to facilitate the work of computer programmers, who specify the actions to be performed by a computer mostly by writing source...

. As a result of this growth, kernels were more prone to bugs and became increasingly difficult to maintain.

The microkernel was designed to address the increasing growth of kernels and the difficulties that came with them. In theory, the microkernel design allows for easier management of code due to its division into user space

User space

A conventional computer operating system usually segregates virtual memory into kernel space and user space. Kernel space is strictly reserved for running the kernel, kernel extensions, and most device drivers...

services. This also allows for increased security and stability resulting from the reduced amount of code running in kernel mode. For example, if a networking service crashed due to buffer overflow

Buffer overflow

In computer security and programming, a buffer overflow, or buffer overrun, is an anomaly where a program, while writing data to a buffer, overruns the buffer's boundary and overwrites adjacent memory. This is a special case of violation of memory safety....

, only the networking service's memory would be corrupted, leaving the rest of the system still functional.

Inter-process communication

Inter-process communicationInter-process communication

In computing, Inter-process communication is a set of methods for the exchange of data among multiple threads in one or more processes. Processes may be running on one or more computers connected by a network. IPC methods are divided into methods for message passing, synchronization, shared...

(IPC) is any mechanism which allows separate processes to communicate with each other, usually by sending messages

Message passing

Message passing in computer science is a form of communication used in parallel computing, object-oriented programming, and interprocess communication. In this model, processes or objects can send and receive messages to other processes...

. Shared memory

Shared memory

In computing, shared memory is memory that may be simultaneously accessed by multiple programs with an intent to provide communication among them or avoid redundant copies. Depending on context, programs may run on a single processor or on multiple separate processors...

is strictly speaking also an inter-process communication mechanism, but the abbreviation IPC usually only refers to message passing, and it is the latter that is particularly relevant to microkernels. IPC allows the operating system to be built from a number of small programs called servers, which are used by other programs on the system, invoked via IPC. Most or all support for peripheral hardware is handled in this fashion, with servers for device drivers, network protocol stacks, file systems, graphics, etc.

IPC can be synchronous or asynchronous. Asynchronous IPC is analogous to network communication: the sender dispatches a message and continues executing. The receiver checks (polls) for the availability of the message by attempting a receive, or is alerted to it via some notification mechanism. Asynchronous IPC requires that the kernel maintains buffers and queues for messages, and deals with buffer overflows; it also requires double copying of messages (sender to kernel and kernel to receiver). In synchronous IPC, the first party (sender or receiver) blocks until the other party is ready to perform the IPC. It does not require buffering or multiple copies, but the implicit rendezvous can make programming tricky. Most programmers prefer asynchronous send and synchronous receive.

First-generation microkernels typically supported synchronous as well as asynchronous IPC, and suffered from poor IPC performance. Jochen Liedtke

Jochen Liedtke

Jochen Liedtke was a German computer scientist, noted for his work on microkernels, especially the creation of the L4 microkernel family....

identified the design and implementation of the IPC mechanisms as the underlying reason for this poor performance. In his L4 microkernel

L4 microkernel family

L4 is a family of second-generation microkernels, generally used to implement Unix-like operating systems, but also used in a variety of other systems.L4 was a response to the poor performance of earlier microkernel-base operating systems...

he pioneered methods that lowered IPC costs by an order of magnitude

Order of magnitude

An order of magnitude is the class of scale or magnitude of any amount, where each class contains values of a fixed ratio to the class preceding it. In its most common usage, the amount being scaled is 10 and the scale is the exponent being applied to this amount...

. These include an IPC system call that supports a send as well as a receive operation, making all IPC synchronous, and passing as much data as possible in registers. Furthermore, Liedtke introduced the concept of the direct process switch, where during an IPC execution an (incomplete) context switch

Context switch

A context switch is the computing process of storing and restoring the state of a CPU so that execution can be resumed from the same point at a later time. This enables multiple processes to share a single CPU. The context switch is an essential feature of a multitasking operating system...

is performed from the sender directly to the receiver. If, as in L4, part or all of the message is passed in registers, this transfers the in-register part of the message without any copying at all. Furthermore, the overhead of invoking the scheduler is avoided; this is especially beneficial in the common case where IPC is used in an RPC

Remote procedure call

In computer science, a remote procedure call is an inter-process communication that allows a computer program to cause a subroutine or procedure to execute in another address space without the programmer explicitly coding the details for this remote interaction...

-type fashion by a client invoking a server. Another optimization, called lazy scheduling, avoids traversing scheduling queues during IPC by leaving threads that block during IPC in the ready queue. Once the scheduler is invoked, it moves such threads to the appropriate waiting queue. As in many cases a thread gets unblocked before the next scheduler invocation, this approach saves significant work. Similar approaches have since been adopted by QNX

QNX

QNX is a commercial Unix-like real-time operating system, aimed primarily at the embedded systems market. The product was originally developed by Canadian company, QNX Software Systems, which was later acquired by Canadian BlackBerry-producer Research In Motion.-Description:As a microkernel-based...

and MINIX 3

MINIX 3

MINIX 3 is a project to create a small, highly reliable and functional Unix-like operating system. It is published under the BSD license.The main goal of the project is for the system to be fault-tolerant by detecting and repairing its own faults on the fly, without user intervention...

.

In a client-server system, most communication is essentially synchronous, even if using asynchronous primitives, as the typical operation is a client invoking a server and then waiting for a reply. As it also lends itself to more efficient implementation, modern microkernels generally follow L4's lead and only provide a synchronous IPC primitive. Asynchronous IPC can be implemented on top by using helper threads. However, versions of L4 deployed in commercial products have found it necessary to add an asynchronous notification mechanism to better support asynchronous communication. This signal

Signal (computing)

A signal is a limited form of inter-process communication used in Unix, Unix-like, and other POSIX-compliant operating systems. Essentially it is an asynchronous notification sent to a process in order to notify it of an event that occurred. When a signal is sent to a process, the operating system...

-like mechanism does not carry data and therefore does not require buffering by the kernel.

As synchronous IPC blocks the first party until the other is ready, unrestricted use could easily lead to deadlocks. Furthermore, a client could easily mount a denial-of-service attack on a server by sending a request and never attempting to receive the reply. Therefore synchronous IPC must provide a means to prevent indefinite blocking. Many microkernels provide timeouts

Timeout (telecommunication)

In telecommunication and related engineering , the term timeout or time-out has several meanings, including...

on IPC calls, which limit the blocking time. In practice, choosing sensible timeout values is difficult, and systems almost inevitably use infinite timeouts for clients and zero timeouts for servers. As a consequence, the trend is towards not providing arbitrary timeouts, but only a flag which indicates that the IPC should fail immediately if the partner is not ready. This approach effectively provides a choice of the two timeout values of zero and infinity. Recent versions of L4 and MINIX have gone down this path (older versions of L4 used timeouts, as does QNX).

Servers

Microkernel servers are essentially daemonDaemon (computer software)

In Unix and other multitasking computer operating systems, a daemon is a computer program that runs as a background process, rather than being under the direct control of an interactive user...

programs like any others, except that the kernel grants some of them privileges to interact with parts of physical memory that are otherwise off limits to most programs. This allows some servers, particularly device drivers, to interact directly with hardware.

A basic set of servers for a general-purpose microkernel includes file system servers, device driver servers, networking servers, display servers, and user interface device servers. This set of servers (drawn from QNX

QNX

QNX is a commercial Unix-like real-time operating system, aimed primarily at the embedded systems market. The product was originally developed by Canadian company, QNX Software Systems, which was later acquired by Canadian BlackBerry-producer Research In Motion.-Description:As a microkernel-based...

) provides roughly the set of services offered by a Unix monolithic kernel

Monolithic kernel

A monolithic kernel is an operating system architecture where the entire operating system is working in the kernel space and alone as supervisor mode...

. The necessary servers are started at system startup and provide services, such as file, network, and device access, to ordinary application programs. With such servers running in the environment of a user application, server development is similar to ordinary application development, rather than the build-and-boot process needed for kernel development.

Additionally, many "crashes" can be corrected by simply stopping and restarting the server

Crash-only software

Crash-only software refers to computer programs that handle failures by simply restarting, without attempting any sophisticated recovery. Correctly written components of crash-only software can microreboot to a known-good state without the help of a user...

. However, part of the system state is lost with the failing server, hence this approach requires applications to cope with failure. A good example is a server responsible for TCP/IP

Internet protocol suite

The Internet protocol suite is the set of communications protocols used for the Internet and other similar networks. It is commonly known as TCP/IP from its most important protocols: Transmission Control Protocol and Internet Protocol , which were the first networking protocols defined in this...

connections: If this server is restarted, applications will experience a "lost" connection, a normal occurrence in networked system. For other services, failure is less expected and may require changes to application code. For QNX, restart capability is offered as the QNX High Availability Toolkit.

To make all servers restartable, some microkernels have concentrated on adding various database

Database

A database is an organized collection of data for one or more purposes, usually in digital form. The data are typically organized to model relevant aspects of reality , in a way that supports processes requiring this information...

-like methods such as transaction

Database transaction

A transaction comprises a unit of work performed within a database management system against a database, and treated in a coherent and reliable way independent of other transactions...

s, replication

Replication (computer science)

Replication is the process of sharing information so as to ensure consistency between redundant resources, such as software or hardware components, to improve reliability, fault-tolerance, or accessibility. It could be data replication if the same data is stored on multiple storage devices, or...

and checkpointing to preserve essential state across single server restarts. An example is ChorusOS

ChorusOS

ChorusOS is a microkernel real-time operating system designed for embedded systems. Sun Microsystems acquired Chorus Systèmes, the company which created ChorusOS, in 1997. Sun no longer supports ChorusOS. The founders of Chorus Systems started a new company called Jaluna in August 2002. Jaluna has...

, which was made for high-availability applications in the telecommunication

Telecommunication

Telecommunication is the transmission of information over significant distances to communicate. In earlier times, telecommunications involved the use of visual signals, such as beacons, smoke signals, semaphore telegraphs, signal flags, and optical heliographs, or audio messages via coded...

s world. Chorus included features to allow any "properly written" server to be restarted at any time, with clients using those servers being paused while the server brought itself back into its original state. However, such kernel features are incompatible with the minimality principle, and are thus not provided in modern microkernels, which instead rely on appropriate user-level protocols.

Device drivers

Device driverDevice driver

In computing, a device driver or software driver is a computer program allowing higher-level computer programs to interact with a hardware device....

s frequently perform direct memory access

Direct memory access

Direct memory access is a feature of modern computers that allows certain hardware subsystems within the computer to access system memory independently of the central processing unit ....

(DMA), and therefore can write to arbitrary locations of physical memory, including over kernel data structures. Such drivers must therefore be trusted. It is a common misconception that this means that they must be part of the kernel. In fact, a driver is not inherently more or less trustworthy by being part of the kernel.

While running a device driver in user space does not necessarily reduce the damage a misbehaving driver can cause, in practice it is beneficial for system stability in the presence of buggy (rather than malicious) drivers: memory-access violations by the driver code itself (as opposed to the device) may still be caught by the memory-management hardware. Furthermore, many devices are not DMA-capable, their drivers can be made untrusted by running them in user space. Recently, an increasing number of computers feature IOMMU

IOMMU

In computing, an input/output memory management unit is a memory management unit that connects a DMA-capable I/O bus to the main memory...

s, many of which can be used to restrict a device's access to physical memory. (IBM mainframes have had IO MMUs since the IBM System/360 Model 67

IBM System/360 Model 67

The IBM System/360 Model 67 was an important IBM mainframe model in the late 1960s. Unlike the rest of the S/360 series, it included features to facilitate time-sharing applications, notably a DAT box to support virtual memory and 32-bit addressing...

and System/370

System/370

The IBM System/370 was a model range of IBM mainframes announced on June 30, 1970 as the successors to the System/360 family. The series maintained backward compatibility with the S/360, allowing an easy migration path for customers; this, plus improved performance, were the dominant themes of the...

.) This also allows user-mode drivers to become untrusted.

User-mode drivers actually predate microkernels. The Michigan Terminal System

Michigan Terminal System

The Michigan Terminal System is one of the first time-sharing computer operating systems. Initially developed in 1967 at the University of Michigan for use on IBM S/360-67, S/370 and compatible mainframe computers, it was developed and used by a consortium of eight universities in the United...

(MTS), in 1967, supported user space drivers (including its file system support), the first operating system to be designed with that capability.

Historically, drivers were less of a problem, as the number of devices was small and trusted anyway, so having them in the kernel simplified the design and avoided potential performance problems. This led to the traditional driver-in-the-kernel style of Unix, Linux, and Windows.

With the proliferation of various kinds of peripherals, the amount of driver code escalated and in modern operating systems dominates the kernel in code size.

Essential components and minimality

As a microkernel must allow building arbitrary operating system services on top, it must provide some core functionality. At the least, this includes:- some mechanisms for dealing with address spaceAddress spaceIn computing, an address space defines a range of discrete addresses, each of which may correspond to a network host, peripheral device, disk sector, a memory cell or other logical or physical entity.- Overview :...

s — this is required for managing memory protection; - some execution abstraction to manage CPU allocation — typically threadsThread (computer science)In computer science, a thread of execution is the smallest unit of processing that can be scheduled by an operating system. The implementation of threads and processes differs from one operating system to another, but in most cases, a thread is contained inside a process...

or scheduler activations; and - inter-process communicationInter-process communicationIn computing, Inter-process communication is a set of methods for the exchange of data among multiple threads in one or more processes. Processes may be running on one or more computers connected by a network. IPC methods are divided into methods for message passing, synchronization, shared...

— required to invoke servers running in their own address spaces.

This minimal design was pioneered by Brinch Hansen's Nucleus

RC 4000 Multiprogramming System

The RC 4000 Multiprogramming System was an operating system developed for the RC 4000 minicomputer in 1969. It is historically notable for being the first attempt to break down an operating system into a group of interacting programs communicating via a message passing kernel...

and the hypervisor of IBM's VM

VM (operating system)

VM refers to a family of IBM virtual machine operating systems used on IBM mainframes System/370, System/390, zSeries, System z and compatible systems, including the Hercules emulator for personal computers. The first version, released in 1972, was VM/370, or officially Virtual Machine Facility/370...

. It has since been formalised in Liedtke's minimality principle:

A concept is tolerated inside the microkernel only if moving it outside the kernel, i.e., permitting competing implementations, would prevent the implementation of the system's required functionality.

Everything else can be done in a usermode program, although device drivers implemented as user programs may on some processor architectures require special privileges to access I/O hardware.

Related to the minimality principle, and equally important for microkernel design, is the separation of mechanism and policy

Separation of mechanism and policy

The separation of mechanism and policy is a design principle in computer science. It states that mechanisms should not dictate the policies according to which decisions are made about which operations to authorize, and which resources to...

, it is what enables the construction of arbitrary systems on top of a minimal kernel. Any policy built into the kernel cannot be overwritten at user level and therefore limits the generality of the microkernel.

Policy implemented in user-level servers can be changed by replacing the servers (or letting the application choose between competing servers offering similar services).

For efficiency, most microkernels contain schedulers and manage timers, in violation of the minimality principle and the principle of policy-mechanism separation.

Start up (booting

Booting

In computing, booting is a process that begins when a user turns on a computer system and prepares the computer to perform its normal operations. On modern computers, this typically involves loading and starting an operating system. The boot sequence is the initial set of operations that the...

) of a microkernel-based system requires device driver

Device driver

In computing, a device driver or software driver is a computer program allowing higher-level computer programs to interact with a hardware device....

s, which are not part of the kernel. Typically this means that they are packaged with the kernel in the boot image, and the kernel supports a bootstrap protocol that defines how the drivers are located and started; this is the traditional bootstrap procedure of L4 microkernels

L4 microkernel family

L4 is a family of second-generation microkernels, generally used to implement Unix-like operating systems, but also used in a variety of other systems.L4 was a response to the poor performance of earlier microkernel-base operating systems...

. Some microkernels simplify this by placing some key drivers inside the kernel (in violation of the minimality principle), LynxOS

LynxOS

The LynxOS RTOS is a Unix-like real-time operating system from LynuxWorks . Sometimes known as the Lynx Operating System, LynxOS features full POSIX conformance and, more recently, Linux compatibility...

and the original Minix

Minix

MINIX is a Unix-like computer operating system based on a microkernel architecture created by Andrew S. Tanenbaum for educational purposes; MINIX also inspired the creation of the Linux kernel....

are examples. Some even include a file system

File system

A file system is a means to organize data expected to be retained after a program terminates by providing procedures to store, retrieve and update data, as well as manage the available space on the device which contain it. A file system organizes data in an efficient manner and is tuned to the...

in the kernel to simplify booting. In other cases, GNU GRUB

GNU GRUB

GNU GRUB is a boot loader package from the GNU Project. GRUB is the reference implementation of the Multiboot Specification, which provides a user the choice to boot one of multiple operating systems installed on a computer or select a specific kernel configuration available on a particular...

for example, microkernel-based system may boot via multiboot compatible boot loader. Such systems usually load statically-linked servers to make an initial bootstrap or mount an OS image to continue bootstrapping.

A key component of a microkernel is a good IPC

Inter-process communication

In computing, Inter-process communication is a set of methods for the exchange of data among multiple threads in one or more processes. Processes may be running on one or more computers connected by a network. IPC methods are divided into methods for message passing, synchronization, shared...

system and virtual-memory-manager design that allows implementing page-fault handling and swapping in usermode servers in a safe way. Since all services are performed by usermode programs, efficient means of communication between programs are essential, far more so than in monolithic kernels. The design of the IPC system makes or breaks a microkernel. To be effective, the IPC system must not only have low overhead, but also interact well with CPU scheduling.

Performance

On most mainstream processors, obtaining a service is inherently more expensive in a microkernel-based system than a monolithic system. In the monolithic system, the service is obtained by a single system call, which requires two mode switches (changes of the processor's ringRing (computer security)

In computer science, hierarchical protection domains, often called protection rings, are a mechanism to protect data and functionality from faults and malicious behaviour . This approach is diametrically opposite to that of capability-based security.Computer operating systems provide different...

or CPU mode

CPU modes

CPU modes are operating modes for the central processing unit of some computer architectures that place restrictions on the type and scope of operations that can be performed by certain processes being run by the CPU...

). In the microkernel-based system, the service is obtained by sending an IPC message to a server, and obtaining the result in another IPC message from the server. This requires a context switch

Context switch

A context switch is the computing process of storing and restoring the state of a CPU so that execution can be resumed from the same point at a later time. This enables multiple processes to share a single CPU. The context switch is an essential feature of a multitasking operating system...

if the drivers are implemented as processes, or a function call if they are implemented as procedures. In addition, passing actual data to the server and back may incur extra copying overhead, while in a monolithic system the kernel can directly access the data in the client's buffers.

Performance is therefore a potential issue in microkernel systems. Indeed, the experience of first-generation microkernels such as Mach

Mach (kernel)

Mach is an operating system kernel developed at Carnegie Mellon University to support operating system research, primarily distributed and parallel computation. Although Mach is often mentioned as one of the earliest examples of a microkernel, not all versions of Mach are microkernels...

and ChorusOS

ChorusOS

ChorusOS is a microkernel real-time operating system designed for embedded systems. Sun Microsystems acquired Chorus Systèmes, the company which created ChorusOS, in 1997. Sun no longer supports ChorusOS. The founders of Chorus Systems started a new company called Jaluna in August 2002. Jaluna has...

showed that systems based on them performed very poorly.

However, Jochen Liedtke

Jochen Liedtke

Jochen Liedtke was a German computer scientist, noted for his work on microkernels, especially the creation of the L4 microkernel family....

showed that Mach's performance problems were the result of poor design and implementation, and specifically Mach's excessive cache

Cache

In computer engineering, a cache is a component that transparently stores data so that future requests for that data can be served faster. The data that is stored within a cache might be values that have been computed earlier or duplicates of original values that are stored elsewhere...

footprint.

Liedtke demonstrated with his own L4 microkernel that through careful design and implementation, and especially by following the minimality principle, IPC costs could be reduced by more than an order of magnitude compared to Mach. L4's IPC performance is still unbeaten across a range of architectures.

While these results demonstrate that the poor performance of systems based on first-generation microkernels is not representative for second-generation kernels such as L4, this constitutes no proof that microkernel-based systems can be built with good performance. It has been shown that a monolithic Linux server ported to L4 exhibits only a few percent overhead over native Linux.

However, such a single-server system exhibits few, if any, of the advantages microkernels are supposed to provide by structuring operating system functionality into separate servers.

A number of commercial multi-server systems exist, in particular the real-time systems

Real-time operating system

A real-time operating system is an operating system intended to serve real-time application requests.A key characteristic of a RTOS is the level of its consistency concerning the amount of time it takes to accept and complete an application's task; the variability is jitter...

QNX

QNX

QNX is a commercial Unix-like real-time operating system, aimed primarily at the embedded systems market. The product was originally developed by Canadian company, QNX Software Systems, which was later acquired by Canadian BlackBerry-producer Research In Motion.-Description:As a microkernel-based...

and Integrity

Integrity (operating system)

INTEGRITY is a real-time operating system produced and marketed by Green Hills Software. It is royalty-free, POSIX-certified, and intended for use in embedded systems needing reliability, availability, and fault tolerance. It is built atop the velOSity microkernel and is intended mainly for modern...

. No comprehensive comparison of performance relative to monolithic systems has been published for those multiserver systems. Furthermore, performance does not seem to be the overriding concern for those commercial systems, which instead emphasize reliably quick interrupt handling response times (QNX) and simplicity for the sake of robustness. An attempt to build a high-performance multiserver operating system was the IBM Sawmill Linux project.

However, this project was never completed.

It has been shown in the meantime that user-level device drivers can come close to the performance of in-kernel drivers even for such high-throughput, high-interrupt devices as Gigabit Ethernet. This seems to imply that high-performance multi-server systems are possible.

Security

The security benefits of microkernels have been frequently discussed. In the context of security the minimality principle of microkernels is a direct consequence of the principle of least privilege, according to which all code should have only the privileges needed to provide required functionality. Minimality requires that a system's trusted computing baseTrusted computing base

The trusted computing base of a computer system is the set of all hardware, firmware, and/or software components that are critical to its security, in the sense that bugs or vulnerabilities occurring inside the TCB might jeopardize the security properties of the entire system...

(TCB) should be kept minimal. As the kernel (the code that executes in the privileged mode of the hardware) is always part of the TCB, minimizing it is natural in a security-driven design.

Consequently, microkernel designs have been used for systems designed for high-security applications, including KeyKOS

KeyKOS

KeyKOS is a persistent, pure capability-based operating system for the IBM S/370 mainframe computers. It allows emulating the VM, MVS, and POSIX environments. It is a predecessor of the Extremely Reliable Operating System , and its successors, the CapROS and Coyotos operating systems...

, EROS

Extremely Reliable Operating System

EROS is an operating system developed by The EROS Group, LLC., the Johns Hopkins University, and the University of Pennsylvania. Features include automatic data and process persistence, some preliminary real-time support, and capability-based security. EROS is purely a research operating system,...

and military systems. In fact common criteria

Common Criteria

The Common Criteria for Information Technology Security Evaluation is an international standard for computer security certification...

(CC) at the highest assurance level (Evaluation Assurance Level

Evaluation Assurance Level

The Evaluation Assurance Level of an IT product or system is a numerical grade assigned following the completion of a Common Criteria security evaluation, an international standard in effect since 1999. The increasing assurance levels reflect added assurance requirements that must be met to...

(EAL) 7) has an explicit requirement that the target of evaluation be “simple”, an acknowledgment of the practical impossibility of establishing true trustworthiness for a complex system.

Third generation

Recent work on microkernels has been focusing on formal specifications of the kernel API, and formal proofs of security properties of the API. The first example of this is a mathematical proof of the confinement mechanisms in EROS, based on a simplified model of the EROS API. More recently, a comprehensive set of machine-checked proofs has been performed of the properties of the protection model of seL4, a version of L4.This has led to what is referred to as third-generation microkernels,

characterised by a security-oriented API with resource access controlled by capabilities

Capability-based security

Capability-based security is a concept in the design of secure computing systems, one of the existing security models. A capability is a communicable, unforgeable token of authority. It refers to a value that references an object along with an associated set of access rights...

, virtualization as a first-class concern, novel approaches to kernel resource management,

and a design goal of suitability for formal analysis

Formal methods

In computer science and software engineering, formal methods are a particular kind of mathematically-based techniques for the specification, development and verification of software and hardware systems...

, besides the usual goal of high performance. Examples are Coyotos

Coyotos

Coyotos is a capability-based security-focused microkernel operating system developed by The EROS Group, LLC. It is a successor to the EROS system that was created at the University of Pennsylvania and Johns Hopkins University.- History :...

, seL4, Nova,

and Fiasco.OC.

In the case of seL4, complete formal verification of the implementation has been achieved, i.e. a mathematical proof that the kernel's implementation is consistent with its formal specification. This provides a guarantee that the properties proved about the API actually hold for the real kernel, a degree of assurance which goes beyond even CC EAL7.

Nanokernel

The term nanokernel or picokernel historically referred to:- A kernel where the total amount of kernel code, i.e. code executing in the privileged mode of the hardware, is very small. The term picokernel was sometimes used to further emphasize small size. The term nanokernel was coined by Jonathan S. Shapiro in the paper The KeyKOS NanoKernel Architecture. It was a sardonic response to Mach, which claimed to be a microkernel while being monolithic, essentially unstructured, and slower than the systems it sought to replace. Subsequent reuse of and response to the term, including the picokernel coinage, suggest that the point was largely missed. Both nanokernel and picokernel have subsequently come to have the same meaning expressed by the term microkernel.

- A virtualization layer underneath an operating system; this is more correctly referred to as a hypervisorHypervisorIn computing, a hypervisor, also called virtual machine manager , is one of many hardware virtualization techniques that allow multiple operating systems, termed guests, to run concurrently on a host computer. It is so named because it is conceptually one level higher than a supervisory program...

. - A hardware abstraction layer that forms the lowest-level part of a kernel, sometimes used to provide real-timeReal-time computingIn computer science, real-time computing , or reactive computing, is the study of hardware and software systems that are subject to a "real-time constraint"— e.g. operational deadlines from event to system response. Real-time programs must guarantee response within strict time constraints...

functionality to normal OS's, like Adeos.

There is also at least one case where the term nanokernel is used to refer not to a small kernel, but one that supports a nanosecond

Nanosecond

A nanosecond is one billionth of a second . One nanosecond is to one second as one second is to 31.7 years.The word nanosecond is formed by the prefix nano and the unit second. Its symbol is ns....

clock resolution.

See also

- Kernel (computer science)

- ExokernelExokernelExokernel is an operating system kernel developed by the MIT Parallel and Distributed Operating Systems group, and also a class of similar operating systems....

, a research kernel architecture with a more minimalist approach to kernel technology. - Hybrid kernelHybrid kernelA hybrid kernel is a kernel architecture based on combining aspects of microkernel and monolithic kernel architectures used in computer operating systems. The category is controversial due to the similarity to monolithic kernel; the term has been dismissed by Linus Torvalds as simple marketing...

- Monolithic kernelMonolithic kernelA monolithic kernel is an operating system architecture where the entire operating system is working in the kernel space and alone as supervisor mode...

- Loadable kernel moduleLoadable Kernel ModuleIn computing, a loadable kernel module is an object file that contains code to extend the running kernel, or so-called base kernel, of an operating system...

- Exokernel

- Trusted computing baseTrusted computing baseThe trusted computing base of a computer system is the set of all hardware, firmware, and/or software components that are critical to its security, in the sense that bugs or vulnerabilities occurring inside the TCB might jeopardize the security properties of the entire system...

Further reading

- scientific articles about microkernels (on CiteSeerCiteSeerCiteSeer was a public search engine and digital library for scientific and academic papers. It is often considered to be the first automated citation indexing system and was considered a predecessor of academic search tools such as Google Scholar and Microsoft Academic Search. It was replaced by...

), including:- - the basic QNX reference.

- -the basic reliable reference.

- - the basic Mach reference.

- MicroKernel page from the Portland Pattern RepositoryPortland Pattern RepositoryThe Portland Pattern Repository is a repository for computer programming design patterns. It was accompanied by a companion website, WikiWikiWeb, which was the world's first wiki....

- The Tanenbaum–Torvalds debate

- The Tanenbaum-Torvalds Debate, 1992.01.29

- Tanenbaum, A. S. "Can We Make Operating Systems Reliable and Secure?".

- Torvalds, L. Linus Torvalds about the microkernels again, 2006.05.09

- Shapiro, J. "Debunking Linus's Latest".

- Tanenbaum, A. S. "Tanenbaum-Torvalds Debate: Part II".