Location estimation in sensor networks

Encyclopedia

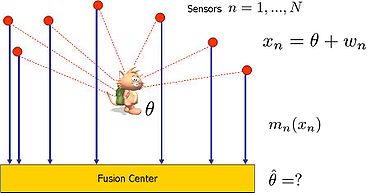

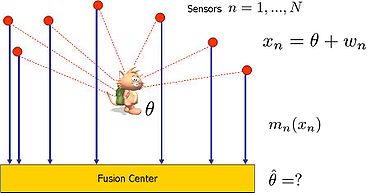

Location estimation in wireless sensor networks is the problem of estimating

the location of an object from a set of noisy measurements, when the measurements are acquired in a distributed

manner by a set of sensors.

The CodeBlue systemhttp://www.eecs.harvard.edu/~mdw/proj/codeblue/ of Harvard university

is an example where a

vast number of sensors distributed among hospital facilities

allow staff to locate a patient in distress. In addition, the sensor

array enables online recording of medical information while

allowing the patient to move around. Military applications (e.g.

locating an intruder into a secured area) are also good candidates

for setting a wireless sensor network.

Let

Let  denote the position of interest. A set of

denote the position of interest. A set of  sensors

sensors

acquire measurements contaminated by an

contaminated by an

additive noise owing some known or unknown probability density function

owing some known or unknown probability density function

(PDF). The sensors transmit measurements to a central processor. The th sensor encodes

th sensor encodes

by a function

by a function  . The application processing the data applies a pre-defined estimation rule

. The application processing the data applies a pre-defined estimation rule

. The set of message functions

. The set of message functions

and the fusion rule

and the fusion rule  are

are

designed to minimize estimation error.

For example: minimizing the mean squared error

(MSE),

.

.

Ideally, sensors transmit their measurements

exactly to the processing center, that is . In this

. In this

settings, the maximum likelihood estimator (MLE) is an unbiased estimator whose MSE is

is an unbiased estimator whose MSE is

assuming a white Gaussian noise

assuming a white Gaussian noise

. The next sections suggest

. The next sections suggest

alternative designs when the sensors are bandwidth constrained to

1 bit transmission, that is =0 or 1.

=0 or 1.

, in which a suggestion for a

, in which a suggestion for a

system design is as follows

Estimation theory

Estimation theory is a branch of statistics and signal processing that deals with estimating the values of parameters based on measured/empirical data that has a random component. The parameters describe an underlying physical setting in such a way that their value affects the distribution of the...

the location of an object from a set of noisy measurements, when the measurements are acquired in a distributed

manner by a set of sensors.

Motivation

Many civilian and military applications require monitoring that can identify objects in a specific area, such as monitoring the front entrance of a private house by a single camera. Monitored areas that are large relative to objects of interest often require multiple sensors (e.g., infra-red detectors) at multiple locations. A centralized observer or computer application monitors the sensors. The communication to Power and bandwidth requirements call for efficient design of the sensor, transmission, and processing.The CodeBlue systemhttp://www.eecs.harvard.edu/~mdw/proj/codeblue/ of Harvard university

Harvard University

Harvard University is a private Ivy League university located in Cambridge, Massachusetts, United States, established in 1636 by the Massachusetts legislature. Harvard is the oldest institution of higher learning in the United States and the first corporation chartered in the country...

is an example where a

vast number of sensors distributed among hospital facilities

allow staff to locate a patient in distress. In addition, the sensor

array enables online recording of medical information while

allowing the patient to move around. Military applications (e.g.

locating an intruder into a secured area) are also good candidates

for setting a wireless sensor network.

Setting

denote the position of interest. A set of

denote the position of interest. A set of  sensors

sensorsacquire measurements

contaminated by an

contaminated by anadditive noise

owing some known or unknown probability density function

owing some known or unknown probability density functionProbability density function

In probability theory, a probability density function , or density of a continuous random variable is a function that describes the relative likelihood for this random variable to occur at a given point. The probability for the random variable to fall within a particular region is given by the...

(PDF). The sensors transmit measurements to a central processor. The

th sensor encodes

th sensor encodes by a function

by a function  . The application processing the data applies a pre-defined estimation rule

. The application processing the data applies a pre-defined estimation rule . The set of message functions

. The set of message functions and the fusion rule

and the fusion rule  are

aredesigned to minimize estimation error.

For example: minimizing the mean squared error

Mean squared error

In statistics, the mean squared error of an estimator is one of many ways to quantify the difference between values implied by a kernel density estimator and the true values of the quantity being estimated. MSE is a risk function, corresponding to the expected value of the squared error loss or...

(MSE),

.

.Ideally, sensors transmit their measurements

exactly to the processing center, that is

. In this

. In thissettings, the maximum likelihood estimator (MLE)

is an unbiased estimator whose MSE is

is an unbiased estimator whose MSE is assuming a white Gaussian noise

assuming a white Gaussian noise . The next sections suggest

. The next sections suggestalternative designs when the sensors are bandwidth constrained to

1 bit transmission, that is

=0 or 1.

=0 or 1.Known noise PDF

We begin with an example of a Gaussian noise , in which a suggestion for a

, in which a suggestion for asystem design is as follows

-

-

Here is a parameter leveraging our prior knowledge of the

is a parameter leveraging our prior knowledge of the

approximate location of . In this design, the random value

. In this design, the random value

of is distributed Bernoulli~

is distributed Bernoulli~ . The

. The

processing center averages the received bits to form an estimate

of

of  , which is then used to find an estimate of

, which is then used to find an estimate of  . It can be verified that for the optimal (and

. It can be verified that for the optimal (and

infeasible) choice of the variance of this estimator

the variance of this estimator

is which is only

which is only  times the

times the

variance of MLE without bandwidth constraint. The variance

increases as deviates from the real value of

deviates from the real value of  , but it can be shown that as long as

, but it can be shown that as long as  the factor in the MSE remains approximately 2. Choosing a suitable value for

the factor in the MSE remains approximately 2. Choosing a suitable value for  is a major disadvantage of this method since our model does not assume prior knowledge about the approximated location of

is a major disadvantage of this method since our model does not assume prior knowledge about the approximated location of  . A coarse estimation can be used to overcome this limitation. However, it requires additional hardware in each of

. A coarse estimation can be used to overcome this limitation. However, it requires additional hardware in each of

the sensors.

A system design with arbitrary (but known) noise PDF can be found in . In this setting it is assumed that both and

and

the noise are confined to some known interval

are confined to some known interval  . The

. The

estimator of also reaches an MSE which is a constant factor

times . In this method, the prior knowledge of

. In this method, the prior knowledge of  replaces

replaces

the parameter of the previous approach.

of the previous approach.

Unknown noise parameters

A noise model may be sometimes available while the exact PDF parameters are unknown (e.g. a Gaussian PDF with unknown ). The idea proposed in for this setting is to use two

). The idea proposed in for this setting is to use two

thresholds , such that

, such that  sensors are designed

sensors are designed

with , and the other

, and the other  sensors use

sensors use

. The processing center estimation rule is generated as follows:

. The processing center estimation rule is generated as follows:

-

-

As before, prior knowledge is necessary to set values for

to have an MSE with a reasonable factor

to have an MSE with a reasonable factor

of the unconstrained MLE variance.

Unknown noise PDF

We now describe the system design of for the case that the structure of the noise

PDF is unknown. The following model is considered for this scenario:

-

-

-

In addition, the message functions are limited to have the form

-

where each is a subset of

is a subset of  . The fusion estimator is also restricted to be linear, i.e.

. The fusion estimator is also restricted to be linear, i.e.

.

.

The design should set the decision intervals and the

and the

coefficients . Intuitively, we would allocate

. Intuitively, we would allocate  sensors to encode the first bit of

sensors to encode the first bit of  by setting their decision interval to be

by setting their decision interval to be  , then

, then  sensors would encode the second bit by setting their decision interval to

sensors would encode the second bit by setting their decision interval to

and so on. It can be shown that these decision

and so on. It can be shown that these decision

intervals and the corresponding set of coefficients

produce a universal -unbiased estimator, which is an

-unbiased estimator, which is an

estimator satisfying

for every possible value of and for every realization of

and for every realization of  . In fact, this intuitive

. In fact, this intuitive

design of the decision intervals is also optimal in the following

sense. The above design requires

to satisfy the universal

to satisfy the universal

-unbiased property while theoretical arguments show that

-unbiased property while theoretical arguments show that

an optimal (and a more complex) design of the decision intervals

would require , that is:

, that is:

the number of sensors is nearly optimal. It is also argued in

that if the targeted MSE

uses a small

uses a small

enough , then this design requires a factor of 4 in the

, then this design requires a factor of 4 in the

number of sensors to achieve the same variance of the MLE in

the unconstrained bandwidth settings.

Additional information

The design of the sensor array requires optimizing the power

allocation as well as minimizing the communication traffic of the

entire system. The design suggested in incorporates probabilistic quantization in

sensors and a simple optimization program that is solved in the

fusion center only once. The fusion center then broadcasts a set

of parameters to the sensors that allows them to finalize their

design of messaging functions as to meet the energy

as to meet the energy

constraints. Another work employs a similar approach to address

distributed detection in wireless sensor arrays .

External links

- CodeBlue Harvard group working on wireless sensor network technology to a range of medical applications.

-

-

-

-

-

-

-