Minifloat

Encyclopedia

In computing

, minifloats are floating point

values represented with very few bit

s. Predictably, they are not well suited for general purpose numerical calculations. They are used for special purposes most often in computer graphics where iterations are small and precision has aesthetic effects. Additionally they are frequently encountered as a pedagogical tool in computer science courses to demonstrate the properties and structures of floating point arithmetic and IEEE 754

numbers.

Minifloats with 16 bit

s are half-precision numbers (opposed to single and double precision

). There are also minifloats with 8 bits or even less.

Minifloats can be designed following the principles of the IEEE 754

standard. In this case they must obey the (not explicitly written) rules for the frontier between subnormal and normal numbers and they must have special patterns for infinity

and NaN

. Normalized numbers are stored with a biased exponent

. The new revision of the standard, IEEE 754-2008, has 16-bit binary minifloats

.

The Radeon R300

and R420

GPUs used an "fp24" floating-point format with 7 bits of exponent and 16 bits (+1 implicit) of mantissa.

In the G.711

standard for audio companding

designed by ITU-T

the data encoding with the A-law essentially encodes a 13 bit signed integer as a 1.3.4 minifloat.

In computer graphics minifloats are sometimes used to represent only integral values. If at the same time subnormal values should exist, the least subnormal number has to be 1. This statement can be used to calculate the bias value. The following example demonstrates the calculation as well as the underlying principles.

Numbers in a different base are marked as ...(base). Example 101(2) = 5. The bit patterns have spaces to visualize their parts.

0 0000 001 = 0.0012 × 2x = 0.125 × 2x = 1 (least subnormal number)

...

0 0000 111 = 0.1112 × 2x = 0.875 × 2x = 7 (greatest subnormal number)

0 0001 000 = 1.0002 × 2x = 1 × 2x = 8 (least normalized number)

0 0001 001 = 1.0012 × 2x = 1.125 × 2x = 9

...

0 0010 000 = 1.0002 × 2x+1 = 1 × 2x+1 = 16

0 0010 001 = 1.0012 × 2x+1 = 1.125 × 2x+1 = 18

...

0 1110 000 = 1.0002 × 2x+13 = 1.000 × 2x+13 = 65536

0 1110 001 = 1.0012 × 2x+13 = 1.125 × 2x+13 = 73728

...

0 1110 110 = 1.1102 × 2x+13 = 1.750 × 2x+13 = 114688

0 1110 111 = 1.1112 × 2x+13 = 1.875 × 2x+13 = 122880 (greatest normalized number)

1 1111 000 = −infinity

If the exponent field were not treated specially, the value would be

0 1111 000 = 1.0002 × 2x+14 = 217 = 131072

Without the IEEE 754 special handling of the largest exponent, the greatest possible value would be

0 1111 111 = 1.1112 × 2x+14 = 1.875 * 217 = 245760

Integral minifloats in one byte have a greater range of ±122880 than twos complement integer with a range −128 to +127. The greater range is compensated by a poor precision, because there are only 4 mantissa bits, equivalent to slightly more than one decimal place.

Integral minifloats in one byte have a greater range of ±122880 than twos complement integer with a range −128 to +127. The greater range is compensated by a poor precision, because there are only 4 mantissa bits, equivalent to slightly more than one decimal place.

There are only 242 different values (if +0 and −0 are regarded as different), because 14 bit patterns represent NaN.

The values between 0 and 16 have the same bit pattern as minifloat or twos complement integer. The first pattern with a different value is 00010001, which is 18 as a minifloat and 17 as a twos complement integer.

This coincidence does not occur at all with negative values, because this minifloat is a signed-magnitude format.

The (vertical) real line on the right shows clearly the varying density of the floating point values - a property which is common to any floating point system. This varying density results in a curve similar to the exponential function.

Although the curve may appear smooth, this is not the case. The graph actually consists of distinct points, and these points lie on line segments with discrete slopes. The value of the exponent bits determines the absolute precision of the mantissa bits, and it is this precision that determines the slope of each linear segment.

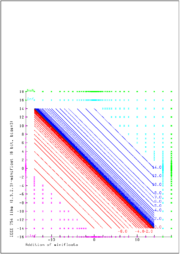

The graphic demonstrates the addition of even smaller (1.3.2.3)-minifloats with 7 bits. This floating point system follows the rules of IEEE 754 exactly. NaN as operand produces always NaN results. Inf − Inf and (−Inf) + Inf results in NaN too (green area). Inf can be augmented and decremented by finite values without change. Sums with finite operands can give an infinite result (i.e. 14.0+3.0 − +Inf as a result is the cyan area, −Inf is the magenta area). The range of the finite operands is filled with the curves x+y=c, where c is always one of the representable float values (blue and red for positive and negative results respectively).

The graphic demonstrates the addition of even smaller (1.3.2.3)-minifloats with 7 bits. This floating point system follows the rules of IEEE 754 exactly. NaN as operand produces always NaN results. Inf − Inf and (−Inf) + Inf results in NaN too (green area). Inf can be augmented and decremented by finite values without change. Sums with finite operands can give an infinite result (i.e. 14.0+3.0 − +Inf as a result is the cyan area, −Inf is the magenta area). The range of the finite operands is filled with the curves x+y=c, where c is always one of the representable float values (blue and red for positive and negative results respectively).

Computing

Computing is usually defined as the activity of using and improving computer hardware and software. It is the computer-specific part of information technology...

, minifloats are floating point

Floating point

In computing, floating point describes a method of representing real numbers in a way that can support a wide range of values. Numbers are, in general, represented approximately to a fixed number of significant digits and scaled using an exponent. The base for the scaling is normally 2, 10 or 16...

values represented with very few bit

Bit

A bit is the basic unit of information in computing and telecommunications; it is the amount of information stored by a digital device or other physical system that exists in one of two possible distinct states...

s. Predictably, they are not well suited for general purpose numerical calculations. They are used for special purposes most often in computer graphics where iterations are small and precision has aesthetic effects. Additionally they are frequently encountered as a pedagogical tool in computer science courses to demonstrate the properties and structures of floating point arithmetic and IEEE 754

IEEE floating-point standard

IEEE 754–1985 was an industry standard for representingfloating-pointnumbers in computers, officially adopted in 1985 and superseded in 2008 byIEEE 754-2008. During its 23 years, it was the most widely used format for...

numbers.

Minifloats with 16 bit

Bit

A bit is the basic unit of information in computing and telecommunications; it is the amount of information stored by a digital device or other physical system that exists in one of two possible distinct states...

s are half-precision numbers (opposed to single and double precision

Double precision

In computing, double precision is a computer number format that occupies two adjacent storage locations in computer memory. A double-precision number, sometimes simply called a double, may be defined to be an integer, fixed point, or floating point .Modern computers with 32-bit storage locations...

). There are also minifloats with 8 bits or even less.

Minifloats can be designed following the principles of the IEEE 754

IEEE floating-point standard

IEEE 754–1985 was an industry standard for representingfloating-pointnumbers in computers, officially adopted in 1985 and superseded in 2008 byIEEE 754-2008. During its 23 years, it was the most widely used format for...

standard. In this case they must obey the (not explicitly written) rules for the frontier between subnormal and normal numbers and they must have special patterns for infinity

Infinity

Infinity is a concept in many fields, most predominantly mathematics and physics, that refers to a quantity without bound or end. People have developed various ideas throughout history about the nature of infinity...

and NaN

NaN

In computing, NaN is a value of the numeric data type representing an undefined or unrepresentable value, especially in floating-point calculations...

. Normalized numbers are stored with a biased exponent

Exponent bias

In IEEE 754 floating point numbers, the exponent is biased in the engineering sense of the word – the value stored is offset from the actual value by the exponent bias....

. The new revision of the standard, IEEE 754-2008, has 16-bit binary minifloats

Half precision floating-point format

In computing, half precision is a binary floating-point computer number format that occupies 16 bits in computer memory.In IEEE 754-2008 the 16-bit base 2 format is officially referred to as binary16...

.

The Radeon R300

Radeon R300

The Radeon R300 is the third generation of Radeon graphics chips from ATI Technologies. The line features 3D acceleration based upon Direct3D 9.0 and OpenGL 2.0, a major improvement in features and performance compared to the preceding Radeon R200 design. R300 was the first fully Direct3D...

and R420

Radeon R420

The Radeon R420 core from ATI Technologies was the company's basis for its 3rd-generation DirectX 9.0/OpenGL 2.0-capable graphics cards. Used first on the Radeon X800, R420 was produced on a 0.13 micrometer low-K process and used GDDR-3 memory...

GPUs used an "fp24" floating-point format with 7 bits of exponent and 16 bits (+1 implicit) of mantissa.

In the G.711

G.711

G.711 is an ITU-T standard for audio companding. It is primarily used in telephony. The standard was released for usage in 1972. Its formal name is Pulse code modulation of voice frequencies. It is required standard in many technologies, for example in H.320 and H.323 specifications. It can also...

standard for audio companding

Companding

In telecommunication, signal processing, and thermodynamics, companding is a method of mitigating the detrimental effects of a channel with limited dynamic range...

designed by ITU-T

ITU-T

The ITU Telecommunication Standardization Sector is one of the three sectors of the International Telecommunication Union ; it coordinates standards for telecommunications....

the data encoding with the A-law essentially encodes a 13 bit signed integer as a 1.3.4 minifloat.

In computer graphics minifloats are sometimes used to represent only integral values. If at the same time subnormal values should exist, the least subnormal number has to be 1. This statement can be used to calculate the bias value. The following example demonstrates the calculation as well as the underlying principles.

Example

A minifloat in one byte (8 bit) with one sign bit, four exponent bits and three mantissa bits (in short a 1.4.3.−2 minifloat) should be used to represent integral values. All IEEE 754 principles should be valid. The only free value is the bias, which will come out as −2. The unknown exponent is called for the moment x.Numbers in a different base are marked as ...(base). Example 101(2) = 5. The bit patterns have spaces to visualize their parts.

Subnormal numbers

The mantissa is extended with 0.:0 0000 001 = 0.0012 × 2x = 0.125 × 2x = 1 (least subnormal number)

...

0 0000 111 = 0.1112 × 2x = 0.875 × 2x = 7 (greatest subnormal number)

Normalized numbers

The mantissa is extended with 1.:0 0001 000 = 1.0002 × 2x = 1 × 2x = 8 (least normalized number)

0 0001 001 = 1.0012 × 2x = 1.125 × 2x = 9

...

0 0010 000 = 1.0002 × 2x+1 = 1 × 2x+1 = 16

0 0010 001 = 1.0012 × 2x+1 = 1.125 × 2x+1 = 18

...

0 1110 000 = 1.0002 × 2x+13 = 1.000 × 2x+13 = 65536

0 1110 001 = 1.0012 × 2x+13 = 1.125 × 2x+13 = 73728

...

0 1110 110 = 1.1102 × 2x+13 = 1.750 × 2x+13 = 114688

0 1110 111 = 1.1112 × 2x+13 = 1.875 × 2x+13 = 122880 (greatest normalized number)

Infinity

0 1111 000 = +infinity1 1111 000 = −infinity

If the exponent field were not treated specially, the value would be

0 1111 000 = 1.0002 × 2x+14 = 217 = 131072

Not a Number

x 1111 yyy = NaN (if yyy ≠ 000)Without the IEEE 754 special handling of the largest exponent, the greatest possible value would be

0 1111 111 = 1.1112 × 2x+14 = 1.875 * 217 = 245760

Value of the bias

If the least subnormal value (second line above) should be 1, the value of x has to be x = 3. Therefore the bias has to be −2, that is every stored exponent has to be decreased by −2 or has to be increased by 2, to get the numerical exponent.Properties of this example

There are only 242 different values (if +0 and −0 are regarded as different), because 14 bit patterns represent NaN.

The values between 0 and 16 have the same bit pattern as minifloat or twos complement integer. The first pattern with a different value is 00010001, which is 18 as a minifloat and 17 as a twos complement integer.

This coincidence does not occur at all with negative values, because this minifloat is a signed-magnitude format.

The (vertical) real line on the right shows clearly the varying density of the floating point values - a property which is common to any floating point system. This varying density results in a curve similar to the exponential function.

Although the curve may appear smooth, this is not the case. The graph actually consists of distinct points, and these points lie on line segments with discrete slopes. The value of the exponent bits determines the absolute precision of the mantissa bits, and it is this precision that determines the slope of each linear segment.

Addition