Specified complexity

Encyclopedia

Specified complexity is an argument proposed by William Dembski and used by him and others to promote intelligent design

. According to Dembski, the concept is intended to formalize a property that singles out patterns that are both specified and complex. Dembski states that specified complexity is a reliable marker of design by an intelligent agent, a central tenet to intelligent design which Dembski argues for in opposition to modern evolutionary theory

. The concept of specified complexity is widely regarded as mathematically unsound and has not been the basis for further independent work in information theory

, the theory of complex systems

, or biology

. Specified complexity is one of the two main arguments used by intelligent design proponents, the other being irreducible complexity

.

In Dembski's terminology, a specified pattern is one that admits short descriptions, whereas a complex pattern is one that is unlikely to occur by chance. Dembski argues that it is impossible for specified complexity to exist in patterns displayed by configurations formed by unguided processes. Therefore, Dembski argues, the fact that specified complex patterns can be found in living things indicates some kind of guidance in their formation, which is indicative of intelligence. Dembski further argues that one can rigorously show by applying no free lunch theorems the inability of evolutionary algorithms to select or generate configurations of high specified complexity.

In intelligent design literature, an intelligent agent

is one that chooses between different possibilities and has, by supernatural means and methods, caused life to arise. Specified complexity is what Dembski terms an "explanatory filter" which can recognize design by detecting complex specified information (CSI). The filter is based on the premise that the categories of regularity, chance, and design are, according to Dembski, "mutually exclusive and exhaustive." Complex specified information detects design because it detects what characterizes intelligent agency; it detects the actualization of one among many competing possibilities.

A study by Wesley Elsberry and Jeffrey Shallit states that "Dembski's work is riddled with inconsistencies, equivocation, flawed use of mathematics, poor scholarship, and misrepresentation of others' results". Another objection concerns Dembski's calculation of probabilities. According to Martin Nowak

, a Harvard professor of mathematics and evolutionary biology "We cannot calculate the probability that an eye came about. We don't have the information to make the calculation". Critics also reject applying specified complexity to infer design as an argument from ignorance

.

to denote what distinguishes living things from non-living things:

The term was later employed by physicist Paul Davies

in a similar manner:

. Specified complexity is fundamental to his approach to intelligent design, and each of his subsequent books has also dealt significantly with the concept. He has stated that, in his opinion, "if there is a way to detect design, specified complexity is it."

Dembski asserts that specified complexity is present in a configuration when it can be described by a pattern that displays a large amount of independently specified information and is also complex, which he defines as having a low probability of occurrence. He provides the following examples to demonstrate the concept: "A single letter of the alphabet is specified without being complex. A long sentence of random letters is complex without being specified. A Shakespearean sonnet is both complex and specified."

In his earlier papers Dembski defined complex specified information (CSI) as being present in a specified event whose probability did not exceed 1 in 10150, which he calls the universal probability bound

. In that context, "specified" meant what in later work he called "pre-specified", that is specified before any information about the outcome is known. The value of the universal probability bound corresponds to the inverse of the upper limit of "the total number of [possible] specified events throughout cosmic history," as calculated by Dembski. Anything below this bound has CSI. The terms "specified complexity" and "complex specified information" are used interchangeably. In more recent papers Dembski has redefined the universal probability bound, with reference to another number, corresponding to the total number of bit operations that could possibly have been performed in the entire history of the universe.

Dembski asserts that CSI exists in numerous features of living things, such as DNA and other functional biological molecules, and argues that it cannot be generated by the only known natural mechanisms of physical law

and chance

, or by their combination. He argues that this is so because laws can only shift around or lose information, but do not produce it, and chance can produce complex unspecified information, or simple specified information, but not CSI; he provides a mathematical analysis that he claims demonstrates that law and chance working together cannot generate CSI, either. Moreover, he claims that CSI is holistic

, with the whole being greater than the sum of the parts, and that this decisively eliminates Darwinian evolution as a possible means of its creation. Dembski maintains that by process of elimination, CSI is best explained as being due to intelligence, and is therefore a reliable indicator of design

.

as follows:

Dembski notes that the term "Law of Conservation of Information" was previously used by Peter Medawar

in his book The Limits of Science (1984) "to describe the weaker claim that deterministic laws cannot produce novel information." The actual validity and utility of Dembski's proposed law are uncertain; it is neither widely used by the scientific community nor cited in mainstream scientific literature. A 2002 essay by Erik Tellgren provided a mathematical rebuttal of Dembski's law and concludes that it is "mathematically unsubstantiated."

as formulated by Ronald Fisher

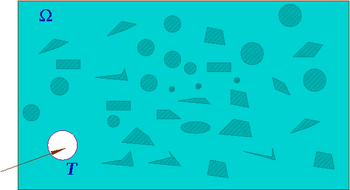

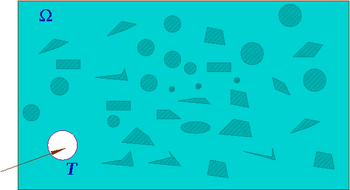

. In general terms, Dembski proposes to view design inference as a statistical test to reject a chance hypothesis P on a space of outcomes Ω.

Dembski's proposed test is based on the Kolmogorov complexity

of a pattern T that is exhibited by an event E that has occurred. Mathematically, E is a subset of Ω, the pattern T specifies a set of outcomes in Ω and E is a subset of T. Quoting Dembski

Kolmogorov complexity provides a measure of the computational resources needed to specify a pattern (such as a DNA sequence or a sequence of alphabetic characters). Given a pattern T, the number of other patterns may have Kolmogorov complexity no larger than that of T is denoted by φ(T). The number φ(T) thus provides a ranking of patterns from the simplest to the most complex. For example, for a pattern T which describes the bacterial flagellum

, Dembski claims to obtain the upper bound φ(T) ≤ 1020.

Dembski defines specified complexity of the pattern T under the chance hypothesis P as

where P(T) is the probability of observing the pattern T, R is the number of "replicational resources" available "to witnessing agents". R corresponds roughly to repeated attempts to create and discern a pattern. Dembski then asserts that R can be bounded by 10120. This number is supposedly justified by a result of Seth Lloyd in which he determines that the number of elementary logic operations that can have performed in the universe over its entire history cannot exceed 10120 operations on 1090 bits.

Dembski's main claim is that the following test can be used to infer design for a configuration: There is a target pattern T that applies to the configuration and whose specified complexity exceeds 1. This condition can be restated as the inequality

According to Dembski, the number of such "replicational resources" can be bounded by "the maximal number of bit operations that the known, observable universe could have performed throughout its entire multi-billion year history", which according to Lloyd is 10120.

However, according to Elsberry and Shallit, "[specified complexity] has not been defined formally in any reputable peer-reviewed mathematical journal, nor (to the best of our knowledge) adopted by any researcher in information theory."

However, Dembski says that the precise calculation of the relevant probability "has yet to be done", although he also claims that some methods for calculating these probabilities "are now in place".

These methods assume that all of the constituent parts of the flagellum must have been generated completely at random, a scenario that biologists do not seriously consider. He justifies this approach by appealing to Michael Behe

's concept of "irreducible complexity" (IC), which leads him to assume that the flagellum could not come about by any gradual or step-wise process. The validity of Dembski's particular calculation is thus wholly dependent on Behe's IC concept, and therefore susceptible to its criticisms, of which there are many.

To arrive at the ranking upper bound of 1020 patterns, Dembski considers a specification pattern for the flagellum defined by the (natural language) predicate "bidirectional rotary motor-driven propeller", which he regards as being determined by four independently chosen basic concepts. He furthermore assumes that English has the capability to express at most 105 basic concepts (an upper bound on the size of a dictionary). Dembski then claims that we can obtain the rough upper bound of

for the set of patterns described by four basic concepts or fewer.

From the standpoint of Kolmogorov complexity theory, this calculation is problematic. Quoting Ellsberry and Shallit "Natural language specification without restriction, as Dembski tacitly permits, seems problematic. For one thing, it results in the Berry paradox

". These authors add: "We have no objection to natural language specifications per se, provided there is some evident way to translate them to Dembski's formal framework. But what, precisely, is the space of events Ω here?"

When Dembski's mathematical claims on specific complexity are interpreted to make them meaningful and conform to minimal standards of mathematical usage, they usually turn out to be false. Dembski often sidesteps these criticisms by responding that he is not "in the business of offering a strict mathematical proof

for the inability of material mechanisms to generate specified complexity". Yet on page 150 of No Free Lunch he claims he can prove his thesis mathematically: "In this section I will present an in-principle mathematical argument for why natural causes are incapable of generating complex specified information." Others have pointed out that a crucial calculation on page 297 of No Free Lunch is off by a factor of approximately 1065.

Dembski's calculations show how a simple smooth function

cannot gain information. He therefore concludes that there must be a designer to obtain CSI. However, natural selection has a branching mapping from one to many (replication) followed by pruning mapping of the many back down to a few (selection). When information is replicated, some copies can be differently modified while others remain the same, allowing information to increase. These increasing and reductional mappings were not modeled by Dembski. In other words, Dembski's calculations do not model birth and death. This basic flaw in his modeling renders all of Dembski's subsequent calculations and reasoning in No Free Lunch irrelevant because his basic model does not reflect reality. Since the basis of No Free Lunch relies on this flawed argument, the entire thesis of the book collapses.

According to Martin Nowak, a Harvard professor of mathematics and evolutionary biology "We cannot calculate the probability that an eye came about. We don't have the information to make the calculation".

Dembski's critics note that specified complexity, as originally defined by Leslie Orgel, is precisely what Darwinian evolution is supposed to create. Critics maintain that Dembski uses "complex" as most people would use "absurdly improbable". They also claim that his argument is a tautology

: CSI cannot occur naturally because Dembski has defined it thus. They argue that to successfully demonstrate the existence of CSI, it would be necessary to show that some biological feature undoubtedly has an extremely low probability of occurring by any natural means whatsoever, something which Dembski and others have almost never attempted to do. Such calculations depend on the accurate assessment of numerous contributing probabilities, the determination of which is often necessarily subjective. Hence, CSI can at most provide a "very high probability", but not absolute certainty.

Another criticism refers to the problem of "arbitrary but specific outcomes". For example, if a coin is tossed randomly 1000 times, the probability of any particular outcome occurring is roughly one in 10300. For any particular specific outcome of the coin-tossing process, the a priori probability that this pattern occurred is thus one in 10300, which is astronomically smaller than Dembski's universal probability bound of one in 10150. Yet we know that the post hoc probability of its happening is exactly one, since we observed it happening. This is similar to the observation that it is unlikely that any given person will win a lottery, but, eventually, a lottery will have a winner; to argue that it is very unlikely that any one player would win is not the same as proving that there is the same chance that no one will win. Similarly, it has been argued that "a space of possibilities is merely being explored, and we, as pattern-seeking animals, are merely imposing patterns, and therefore targets, after the fact."

Apart from such theoretical considerations, critics cite reports of evidence of the kind of evolutionary "spontanteous generation" that Dembski claims is too improbable to occur naturally. For example, in 1982, B.G. Hall published research demonstrating that after removing a gene that allows sugar digestion in certain bacteria, those bacteria, when grown in media rich in sugar, rapidly evolve new sugar-digesting enzymes to replace those removed. Another widely cited example is the discovery of nylon eating bacteria that produce enzymes only useful for digesting synthetic materials that did not exist prior to the invention of nylon

in 1935.

Other commentators have noted that evolution through selection is frequently used to design certain electronic, aeronautic and automotive systems which are considered problems too complex for human "intelligent designers". This strongly contradicts the argument that an intelligent designer is required for the most complex systems. Such evolutionary techniques can lead to designs that are difficult to understand or evaluate since no human understands which trade-offs were made in the evolutionary process, something which mimics our poor understanding of biological systems.

Dembski's book No Free Lunch was criticised for not addressing the work of researchers who use computer simulations to investigate artificial life

. According to Jeffrey Shallit

:

Intelligent design

Intelligent design is the proposition that "certain features of the universe and of living things are best explained by an intelligent cause, not an undirected process such as natural selection." It is a form of creationism and a contemporary adaptation of the traditional teleological argument for...

. According to Dembski, the concept is intended to formalize a property that singles out patterns that are both specified and complex. Dembski states that specified complexity is a reliable marker of design by an intelligent agent, a central tenet to intelligent design which Dembski argues for in opposition to modern evolutionary theory

Evolution

Evolution is any change across successive generations in the heritable characteristics of biological populations. Evolutionary processes give rise to diversity at every level of biological organisation, including species, individual organisms and molecules such as DNA and proteins.Life on Earth...

. The concept of specified complexity is widely regarded as mathematically unsound and has not been the basis for further independent work in information theory

Information theory

Information theory is a branch of applied mathematics and electrical engineering involving the quantification of information. Information theory was developed by Claude E. Shannon to find fundamental limits on signal processing operations such as compressing data and on reliably storing and...

, the theory of complex systems

Complex systems

Complex systems present problems in mathematical modelling.The equations from which complex system models are developed generally derive from statistical physics, information theory and non-linear dynamics, and represent organized but unpredictable behaviors of systems of nature that are considered...

, or biology

Biology

Biology is a natural science concerned with the study of life and living organisms, including their structure, function, growth, origin, evolution, distribution, and taxonomy. Biology is a vast subject containing many subdivisions, topics, and disciplines...

. Specified complexity is one of the two main arguments used by intelligent design proponents, the other being irreducible complexity

Irreducible complexity

Irreducible complexity is an argument by proponents of intelligent design that certain biological systems are too complex to have evolved from simpler, or "less complete" predecessors, through natural selection acting upon a series of advantageous naturally occurring, chance mutations...

.

In Dembski's terminology, a specified pattern is one that admits short descriptions, whereas a complex pattern is one that is unlikely to occur by chance. Dembski argues that it is impossible for specified complexity to exist in patterns displayed by configurations formed by unguided processes. Therefore, Dembski argues, the fact that specified complex patterns can be found in living things indicates some kind of guidance in their formation, which is indicative of intelligence. Dembski further argues that one can rigorously show by applying no free lunch theorems the inability of evolutionary algorithms to select or generate configurations of high specified complexity.

In intelligent design literature, an intelligent agent

Intelligent designer

An intelligent designer, also referred to as an intelligent agent, is the hypothetical willed and self-aware entity that the intelligent design movement argues had some role in the origin and/or development of life...

is one that chooses between different possibilities and has, by supernatural means and methods, caused life to arise. Specified complexity is what Dembski terms an "explanatory filter" which can recognize design by detecting complex specified information (CSI). The filter is based on the premise that the categories of regularity, chance, and design are, according to Dembski, "mutually exclusive and exhaustive." Complex specified information detects design because it detects what characterizes intelligent agency; it detects the actualization of one among many competing possibilities.

A study by Wesley Elsberry and Jeffrey Shallit states that "Dembski's work is riddled with inconsistencies, equivocation, flawed use of mathematics, poor scholarship, and misrepresentation of others' results". Another objection concerns Dembski's calculation of probabilities. According to Martin Nowak

Martin Nowak

Martin A. Nowak is Professor of Biology and Mathematics and Director of the Program for Evolutionary Dynamics at Harvard University.-Career:Martin Nowak studied biochemistry and mathematics at the University of Vienna, and earned his Ph. D. in 1989, working with Peter Schuster on quasi-species...

, a Harvard professor of mathematics and evolutionary biology "We cannot calculate the probability that an eye came about. We don't have the information to make the calculation". Critics also reject applying specified complexity to infer design as an argument from ignorance

Argument from ignorance

Argument from ignorance, also known as argumentum ad ignorantiam or "appeal to ignorance" , is a fallacy in informal logic. It asserts that a proposition is true because it has not yet been proven false, it is "generally accepted"...

.

Orgel's original use

The term "specified complexity" was originally coined by origin of life researcher Leslie OrgelLeslie Orgel

Leslie Eleazer Orgel FRS was a British chemist.Born in London, England, Orgel received his B.A. in chemistry with first class honours from Oxford University in 1949...

to denote what distinguishes living things from non-living things:

In brief, living organisms are distinguished by their specified complexity. Crystals are usually taken as the prototypes of simple well-specified structures, because they consist of a very large number of identical molecules packed together in a uniform way. Lumps of granite or random mixtures of polymers are examples of structures that are complex but not specified. The crystals fail to qualify as living because they lack complexity; the mixtures of polymers fail to qualify because they lack specificity.

The term was later employed by physicist Paul Davies

Paul Davies

Paul Charles William Davies, AM is an English physicist, writer and broadcaster, currently a professor at Arizona State University as well as the Director of BEYOND: Center for Fundamental Concepts in Science...

in a similar manner:

Living organisms are mysterious not for their complexity per se, but for their tightly specified complexity

Dembski's definition

For Dembski, specified complexity is a property which can be observed in living things. However, whereas Orgel used the term for biological features which are considered in science to have arisen through a process of evolution, Dembski says that it describes features which cannot form through "undirected" evolution—and concludes that it allows one to infer intelligent design. While Orgel employed the concept in a qualitative way, Dembski's use is intended to be quantitative. Dembski's use of the concept dates to his 1998 monograph The Design InferenceThe Design Inference

The Design Inference: Eliminating Chance through Small Probabilities is a book by American philosopher William A. Dembski, a proponent of intelligent design, which sets out to establish a mechanism by which evidence of intelligent design in nature could be inferred...

. Specified complexity is fundamental to his approach to intelligent design, and each of his subsequent books has also dealt significantly with the concept. He has stated that, in his opinion, "if there is a way to detect design, specified complexity is it."

Dembski asserts that specified complexity is present in a configuration when it can be described by a pattern that displays a large amount of independently specified information and is also complex, which he defines as having a low probability of occurrence. He provides the following examples to demonstrate the concept: "A single letter of the alphabet is specified without being complex. A long sentence of random letters is complex without being specified. A Shakespearean sonnet is both complex and specified."

In his earlier papers Dembski defined complex specified information (CSI) as being present in a specified event whose probability did not exceed 1 in 10150, which he calls the universal probability bound

Universal probability bound

A universal probability bound is a probabilistic threshold whose existence is asserted by William A. Dembski and is used by him in his works promoting intelligent design...

. In that context, "specified" meant what in later work he called "pre-specified", that is specified before any information about the outcome is known. The value of the universal probability bound corresponds to the inverse of the upper limit of "the total number of [possible] specified events throughout cosmic history," as calculated by Dembski. Anything below this bound has CSI. The terms "specified complexity" and "complex specified information" are used interchangeably. In more recent papers Dembski has redefined the universal probability bound, with reference to another number, corresponding to the total number of bit operations that could possibly have been performed in the entire history of the universe.

Dembski asserts that CSI exists in numerous features of living things, such as DNA and other functional biological molecules, and argues that it cannot be generated by the only known natural mechanisms of physical law

Physical law

A physical law or scientific law is "a theoretical principle deduced from particular facts, applicable to a defined group or class of phenomena, and expressible by the statement that a particular phenomenon always occurs if certain conditions be present." Physical laws are typically conclusions...

and chance

Randomness

Randomness has somewhat differing meanings as used in various fields. It also has common meanings which are connected to the notion of predictability of events....

, or by their combination. He argues that this is so because laws can only shift around or lose information, but do not produce it, and chance can produce complex unspecified information, or simple specified information, but not CSI; he provides a mathematical analysis that he claims demonstrates that law and chance working together cannot generate CSI, either. Moreover, he claims that CSI is holistic

Holism

Holism is the idea that all the properties of a given system cannot be determined or explained by its component parts alone...

, with the whole being greater than the sum of the parts, and that this decisively eliminates Darwinian evolution as a possible means of its creation. Dembski maintains that by process of elimination, CSI is best explained as being due to intelligence, and is therefore a reliable indicator of design

Design

Design as a noun informally refers to a plan or convention for the construction of an object or a system while “to design” refers to making this plan...

.

Law of conservation of information

Dembski formulates and proposes a law of conservation of informationInformation

Information in its most restricted technical sense is a message or collection of messages that consists of an ordered sequence of symbols, or it is the meaning that can be interpreted from such a message or collection of messages. Information can be recorded or transmitted. It can be recorded as...

as follows:

This strong proscriptive claim, that natural causes can only transmit CSI but never originate it, I call the Law of Conservation of Information.

Immediate corollaries of the proposed law are the following:

- The specified complexity in a closed system of natural causes remains constant or decreases.

- The specified complexity cannot be generated spontaneously, originate endogenously or organize itself

Self-organizationSelf-organization is the process where a structure or pattern appears in a system without a central authority or external element imposing it through planning...

(as these terms are used in origins-of-life research).- The specified complexity in a closed system of natural causes either has been in the system eternally or was at some point added exogenously (implying that the system, though now closed, was not always closed).

- In particular any closed system of natural causes that is also of finite duration received whatever specified complexity it contains before it became a closed system.

Dembski notes that the term "Law of Conservation of Information" was previously used by Peter Medawar

Peter Medawar

Sir Peter Brian Medawar OM CBE FRS was a British biologist, whose work on graft rejection and the discovery of acquired immune tolerance was fundamental to the practice of tissue and organ transplants...

in his book The Limits of Science (1984) "to describe the weaker claim that deterministic laws cannot produce novel information." The actual validity and utility of Dembski's proposed law are uncertain; it is neither widely used by the scientific community nor cited in mainstream scientific literature. A 2002 essay by Erik Tellgren provided a mathematical rebuttal of Dembski's law and concludes that it is "mathematically unsubstantiated."

Specificity

In a more recent paper, Dembski provides an account which he claims is simpler and adheres more closely to the theory of statistical hypothesis testingStatistical hypothesis testing

A statistical hypothesis test is a method of making decisions using data, whether from a controlled experiment or an observational study . In statistics, a result is called statistically significant if it is unlikely to have occurred by chance alone, according to a pre-determined threshold...

as formulated by Ronald Fisher

Ronald Fisher

Sir Ronald Aylmer Fisher FRS was an English statistician, evolutionary biologist, eugenicist and geneticist. Among other things, Fisher is well known for his contributions to statistics by creating Fisher's exact test and Fisher's equation...

. In general terms, Dembski proposes to view design inference as a statistical test to reject a chance hypothesis P on a space of outcomes Ω.

Dembski's proposed test is based on the Kolmogorov complexity

Kolmogorov complexity

In algorithmic information theory , the Kolmogorov complexity of an object, such as a piece of text, is a measure of the computational resources needed to specify the object...

of a pattern T that is exhibited by an event E that has occurred. Mathematically, E is a subset of Ω, the pattern T specifies a set of outcomes in Ω and E is a subset of T. Quoting Dembski

Thus, the event E might be a die toss that lands six and T might be the composite event consisting of all die tosses that land on an even face.

Kolmogorov complexity provides a measure of the computational resources needed to specify a pattern (such as a DNA sequence or a sequence of alphabetic characters). Given a pattern T, the number of other patterns may have Kolmogorov complexity no larger than that of T is denoted by φ(T). The number φ(T) thus provides a ranking of patterns from the simplest to the most complex. For example, for a pattern T which describes the bacterial flagellum

Flagellum

A flagellum is a tail-like projection that protrudes from the cell body of certain prokaryotic and eukaryotic cells, and plays the dual role of locomotion and sense organ, being sensitive to chemicals and temperatures outside the cell. There are some notable differences between prokaryotic and...

, Dembski claims to obtain the upper bound φ(T) ≤ 1020.

Dembski defines specified complexity of the pattern T under the chance hypothesis P as

where P(T) is the probability of observing the pattern T, R is the number of "replicational resources" available "to witnessing agents". R corresponds roughly to repeated attempts to create and discern a pattern. Dembski then asserts that R can be bounded by 10120. This number is supposedly justified by a result of Seth Lloyd in which he determines that the number of elementary logic operations that can have performed in the universe over its entire history cannot exceed 10120 operations on 1090 bits.

Dembski's main claim is that the following test can be used to infer design for a configuration: There is a target pattern T that applies to the configuration and whose specified complexity exceeds 1. This condition can be restated as the inequality

Dembski's explanation of specified complexity

Dembski's expression σ is unrelated to any known concept in information theory, though he claims he can justify its relevance as follows: An intelligent agent S witnesses an event E and assigns it to some reference class of events Ω and within this reference class considers it as satisfying a specification T. Now consider the quantity φ(T) × P(T) (where P is the "chance" hypothesis):

Think of S as trying to determine whether an archer, who has just shot an arrow at a large wall, happened to hit a tiny target on that wall by chance. The arrow, let us say, is indeed sticking squarely in this tiny target. The problem, however, is that there are lots of other tiny targets on the wall. Once all those other targets are factored in, is it still unlikely that the archer could have hit any of them by chance?

In addition, we need to factor in what I call the replicational resources associated with T, that is, all the opportunities to bring about an event of Ts descriptive complexity and improbability by multiple agents witnessing multiple events.

According to Dembski, the number of such "replicational resources" can be bounded by "the maximal number of bit operations that the known, observable universe could have performed throughout its entire multi-billion year history", which according to Lloyd is 10120.

However, according to Elsberry and Shallit, "[specified complexity] has not been defined formally in any reputable peer-reviewed mathematical journal, nor (to the best of our knowledge) adopted by any researcher in information theory."

Calculation of specified complexity

Thus far, Dembski's only attempt at calculating the specified complexity of a naturally occurring biological structure is in his book No Free Lunch, for the bacterial flagellum of E. coli. This structure can be described by the pattern "bidirectional rotary motor-driven propeller". Dembski estimates that there are at most 1020 patterns described by four basic concepts or fewer, and so his test for design will apply if

However, Dembski says that the precise calculation of the relevant probability "has yet to be done", although he also claims that some methods for calculating these probabilities "are now in place".

These methods assume that all of the constituent parts of the flagellum must have been generated completely at random, a scenario that biologists do not seriously consider. He justifies this approach by appealing to Michael Behe

Michael Behe

Michael J. Behe is an American biochemist, author, and intelligent design advocate. He currently serves as professor of biochemistry at Lehigh University in Pennsylvania and as a senior fellow of the Discovery Institute's Center for Science and Culture...

's concept of "irreducible complexity" (IC), which leads him to assume that the flagellum could not come about by any gradual or step-wise process. The validity of Dembski's particular calculation is thus wholly dependent on Behe's IC concept, and therefore susceptible to its criticisms, of which there are many.

To arrive at the ranking upper bound of 1020 patterns, Dembski considers a specification pattern for the flagellum defined by the (natural language) predicate "bidirectional rotary motor-driven propeller", which he regards as being determined by four independently chosen basic concepts. He furthermore assumes that English has the capability to express at most 105 basic concepts (an upper bound on the size of a dictionary). Dembski then claims that we can obtain the rough upper bound of

for the set of patterns described by four basic concepts or fewer.

From the standpoint of Kolmogorov complexity theory, this calculation is problematic. Quoting Ellsberry and Shallit "Natural language specification without restriction, as Dembski tacitly permits, seems problematic. For one thing, it results in the Berry paradox

Berry paradox

The Berry paradox is a self-referential paradox arising from the expression "the smallest possible integer not definable by a given number of words". Bertrand Russell, the first to discuss the paradox in print, attributed it to G. G...

". These authors add: "We have no objection to natural language specifications per se, provided there is some evident way to translate them to Dembski's formal framework. But what, precisely, is the space of events Ω here?"

Criticisms

The soundness of Dembski's concept of specified complexity and the validity of arguments based on this concept are widely disputed. A frequent criticism (see Elsberry and Shallit) is that Dembski has used the terms "complexity", "information" and "improbability" interchangeably. These numbers measure properties of things of different types: Complexity measures how hard it is to describe an object (such as a bitstring), information measures how close to uniform a random probability distribution is and improbability measures how unlikely an event is given a probability distribution.When Dembski's mathematical claims on specific complexity are interpreted to make them meaningful and conform to minimal standards of mathematical usage, they usually turn out to be false. Dembski often sidesteps these criticisms by responding that he is not "in the business of offering a strict mathematical proof

Mathematical proof

In mathematics, a proof is a convincing demonstration that some mathematical statement is necessarily true. Proofs are obtained from deductive reasoning, rather than from inductive or empirical arguments. That is, a proof must demonstrate that a statement is true in all cases, without a single...

for the inability of material mechanisms to generate specified complexity". Yet on page 150 of No Free Lunch he claims he can prove his thesis mathematically: "In this section I will present an in-principle mathematical argument for why natural causes are incapable of generating complex specified information." Others have pointed out that a crucial calculation on page 297 of No Free Lunch is off by a factor of approximately 1065.

Dembski's calculations show how a simple smooth function

Smooth function

In mathematical analysis, a differentiability class is a classification of functions according to the properties of their derivatives. Higher order differentiability classes correspond to the existence of more derivatives. Functions that have derivatives of all orders are called smooth.Most of...

cannot gain information. He therefore concludes that there must be a designer to obtain CSI. However, natural selection has a branching mapping from one to many (replication) followed by pruning mapping of the many back down to a few (selection). When information is replicated, some copies can be differently modified while others remain the same, allowing information to increase. These increasing and reductional mappings were not modeled by Dembski. In other words, Dembski's calculations do not model birth and death. This basic flaw in his modeling renders all of Dembski's subsequent calculations and reasoning in No Free Lunch irrelevant because his basic model does not reflect reality. Since the basis of No Free Lunch relies on this flawed argument, the entire thesis of the book collapses.

According to Martin Nowak, a Harvard professor of mathematics and evolutionary biology "We cannot calculate the probability that an eye came about. We don't have the information to make the calculation".

Dembski's critics note that specified complexity, as originally defined by Leslie Orgel, is precisely what Darwinian evolution is supposed to create. Critics maintain that Dembski uses "complex" as most people would use "absurdly improbable". They also claim that his argument is a tautology

Tautology (logic)

In logic, a tautology is a formula which is true in every possible interpretation. Philosopher Ludwig Wittgenstein first applied the term to redundancies of propositional logic in 1921; it had been used earlier to refer to rhetorical tautologies, and continues to be used in that alternate sense...

: CSI cannot occur naturally because Dembski has defined it thus. They argue that to successfully demonstrate the existence of CSI, it would be necessary to show that some biological feature undoubtedly has an extremely low probability of occurring by any natural means whatsoever, something which Dembski and others have almost never attempted to do. Such calculations depend on the accurate assessment of numerous contributing probabilities, the determination of which is often necessarily subjective. Hence, CSI can at most provide a "very high probability", but not absolute certainty.

Another criticism refers to the problem of "arbitrary but specific outcomes". For example, if a coin is tossed randomly 1000 times, the probability of any particular outcome occurring is roughly one in 10300. For any particular specific outcome of the coin-tossing process, the a priori probability that this pattern occurred is thus one in 10300, which is astronomically smaller than Dembski's universal probability bound of one in 10150. Yet we know that the post hoc probability of its happening is exactly one, since we observed it happening. This is similar to the observation that it is unlikely that any given person will win a lottery, but, eventually, a lottery will have a winner; to argue that it is very unlikely that any one player would win is not the same as proving that there is the same chance that no one will win. Similarly, it has been argued that "a space of possibilities is merely being explored, and we, as pattern-seeking animals, are merely imposing patterns, and therefore targets, after the fact."

Apart from such theoretical considerations, critics cite reports of evidence of the kind of evolutionary "spontanteous generation" that Dembski claims is too improbable to occur naturally. For example, in 1982, B.G. Hall published research demonstrating that after removing a gene that allows sugar digestion in certain bacteria, those bacteria, when grown in media rich in sugar, rapidly evolve new sugar-digesting enzymes to replace those removed. Another widely cited example is the discovery of nylon eating bacteria that produce enzymes only useful for digesting synthetic materials that did not exist prior to the invention of nylon

Nylon

Nylon is a generic designation for a family of synthetic polymers known generically as polyamides, first produced on February 28, 1935, by Wallace Carothers at DuPont's research facility at the DuPont Experimental Station...

in 1935.

Other commentators have noted that evolution through selection is frequently used to design certain electronic, aeronautic and automotive systems which are considered problems too complex for human "intelligent designers". This strongly contradicts the argument that an intelligent designer is required for the most complex systems. Such evolutionary techniques can lead to designs that are difficult to understand or evaluate since no human understands which trade-offs were made in the evolutionary process, something which mimics our poor understanding of biological systems.

Dembski's book No Free Lunch was criticised for not addressing the work of researchers who use computer simulations to investigate artificial life

Artificial life

Artificial life is a field of study and an associated art form which examine systems related to life, its processes, and its evolution through simulations using computer models, robotics, and biochemistry. The discipline was named by Christopher Langton, an American computer scientist, in 1986...

. According to Jeffrey Shallit

Jeffrey Shallit

Jeffrey Outlaw Shallit is a computer scientist, number theorist, a noted advocate for civil liberties on the Internet, and a noted critic of intelligent design. He is married to Anna Lubiw, also a computer scientist....

:

The field of artificial life evidently poses a significant challenge to Dembski's claims about the failure of evolutionary algorithms to generate complexity. Indeed, artificial life researchers regularly find their simulations of evolution producing the sorts of novelties and increased complexity that Dembski claims are impossible.

See also

- List of topics characterized as pseudoscience

- Teleological argumentTeleological argumentA teleological or design argument is an a posteriori argument for the existence of God based on apparent design and purpose in the universe. The argument is based on an interpretation of teleology wherein purpose and intelligent design appear to exist in nature beyond the scope of any such human...

- Texas sharpshooter fallacyTexas sharpshooter fallacyThe Texas sharpshooter fallacy is a logical fallacy in which pieces of information that have no relationship to one another are called out for their similarities, and that similarity is used for claiming the existence of a pattern. This fallacy is the philosophical/rhetorical application of the...

External links

- Not a Free Lunch But a Box of Chocolates - A critique of William Dembski's book No Free Lunch by Richard Wein, from TalkOrigins

- Information Theory and Creationism William Dembski by Rich Baldwin, from Information Theory and Creationism, compiled by Ian Musgrave and Rich Baldwin

- Critique of No Free Lunch by H. Allen Orr from the Boston Review

- Dissecting Dembski's "Complex Specified Information" by Dr. Thomas D. Schneider.

- William Dembski's treatment of the No Free Lunch theorems is written in jello by No Free Lunch theorems co-founder, David Wolpert

- The Evolution List - Genetic ID and the Explanatory Filter by Allen MacNeill.

- Design Inference Website - The writing of William A. Dembski

- Committee for Skeptical Inquiry - Reality Check, The Emperor's New Designer Clothes - Victor J. Stenger

- Darwin@Home Web site - open-source software that demonstrates evolution in artificial life, written by Gerald de Jong