N-body simulation

Encyclopedia

.jpg)

Dynamical system

A dynamical system is a concept in mathematics where a fixed rule describes the time dependence of a point in a geometrical space. Examples include the mathematical models that describe the swinging of a clock pendulum, the flow of water in a pipe, and the number of fish each springtime in a...

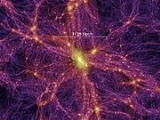

of particles, usually under the influence of physical forces, such as gravity (see n-body problem

N-body problem

The n-body problem is the problem of predicting the motion of a group of celestial objects that interact with each other gravitationally. Solving this problem has been motivated by the need to understand the motion of the Sun, planets and the visible stars...

). In cosmology, they are used to study processes of non-linear structure formation

Structure formation

Structure formation refers to a fundamental problem in physical cosmology. The universe, as is now known from observations of the cosmic microwave background radiation, began in a hot, dense, nearly uniform state approximately 13.7 Gyr ago...

such as the process of forming galaxy filament

Galaxy filament

In physical cosmology, galaxy filaments, also called supercluster complexes or great walls, are, so far, the largest known cosmic structures in the universe. They are massive, thread-like structures with a typical length of 50 to 80 megaparsecs h-1 that form the boundaries between large voids in...

s and galaxy halos from dark matter

Dark matter

In astronomy and cosmology, dark matter is matter that neither emits nor scatters light or other electromagnetic radiation, and so cannot be directly detected via optical or radio astronomy...

in physical cosmology

Physical cosmology

Physical cosmology, as a branch of astronomy, is the study of the largest-scale structures and dynamics of the universe and is concerned with fundamental questions about its formation and evolution. For most of human history, it was a branch of metaphysics and religion...

. Direct N-body simulations are used to study the dynamical evolution of star clusters.

Nature of the particles

The 'particles' treated by the simulation may or may not correspond to physical objects which are particulate in nature. For example, an N-body simulation of a star cluster might have a particle per star, so each particle has some physical significance. On the other hand a simulation of a gas cloudInterstellar cloud

Interstellar cloud is the generic name given to an accumulation of gas, plasma and dust in our and other galaxies. Put differently, an interstellar cloud is a denser-than-average region of the interstellar medium. Depending on the density, size and temperature of a given cloud, the hydrogen in it...

cannot afford to have a particle for each atom or molecule of gas as this would require billions of particles for each gram of material (see Avogadro constant), so a single 'particle' would represent some much larger quantity of gas. This quantity need not have any physical significance, but must be chosen as a compromise between accuracy and manageable computer requirements.

Direct gravitational N-body simulations

In direct gravitational N-body simulations, the equations of motion of a system of N particles under the influence of their mutual gravitational forces are integrated numerically without any simplifying approximations. The first direct N-body simulations were carried out by Sebastian von HoernerSebastian von Hoerner

Sebastian Rudolf Karl von Hoerner was a German astrophysicist and radio astronomer.Born in Görlitz, Lower Silesia, he was influential in discussions of SETI...

at the Astronomisches Rechen-Institut

Astronomical Calculation Institute (University of Heidelberg)

The Astronomisches Rechen-Institut , or ARI, is a research institute in Heidelberg, Germany. The ARI is currently part of the Zentrum für Astronomie der Universität Heidelberg ". Formerly, the ARI belonged to the state of Baden-Württemberg.The ARI has a rich history...

in Heidelberg

Heidelberg

-Early history:Between 600,000 and 200,000 years ago, "Heidelberg Man" died at nearby Mauer. His jaw bone was discovered in 1907; with scientific dating, his remains were determined to be the earliest evidence of human life in Europe. In the 5th century BC, a Celtic fortress of refuge and place of...

, Germany

Germany

Germany , officially the Federal Republic of Germany , is a federal parliamentary republic in Europe. The country consists of 16 states while the capital and largest city is Berlin. Germany covers an area of 357,021 km2 and has a largely temperate seasonal climate...

. Sverre Aarseth at the University of Cambridge

University of Cambridge

The University of Cambridge is a public research university located in Cambridge, United Kingdom. It is the second-oldest university in both the United Kingdom and the English-speaking world , and the seventh-oldest globally...

(UK) has dedicated his entire scientific life to the development of a series of highly efficient N-body codes for astrophysical applications which use adaptive (hierarchical) time steps, an Ahmad-Cohen neighbour scheme and regularization of close encounters. Regularization is a mathematical trick to remove the singularity in the Newtonian law of gravitation for two particles which approach each other arbitrarily close. Sverre Aarseth's codes are used to study the dynamics of star clusters, planetary systems and galactic nuclei.

General relativity simulations

Many simulations are large enough that the effects of general relativityGeneral relativity

General relativity or the general theory of relativity is the geometric theory of gravitation published by Albert Einstein in 1916. It is the current description of gravitation in modern physics...

in establishing a Friedmann-Lemaitre-Robertson-Walker cosmology are significant. This is incorporated in the simulation as an evolving measure of distance (or scale factor

Scale factor (Universe)

The scale factor or cosmic scale factor parameter of the Friedmann equations is a function of time which represents the relative expansion of the universe. It is sometimes called the Robertson-Walker scale factor...

) in a comoving coordinate system, which causes the particles to slow in comoving coordinates (as well as due to the redshift

Redshift

In physics , redshift happens when light seen coming from an object is proportionally increased in wavelength, or shifted to the red end of the spectrum...

ing of their physical energy). However, the contributions of general relativity and the finite speed of gravity

Speed of gravity

In the context of classical theories of gravitation, the speed of gravity is the speed at which changes in a gravitational field propagate. This is the speed at which a change in the distribution of energy and momentum of matter results in subsequent alteration, at a distance, of the gravitational...

can otherwise be ignored, as typical dynamical timescales are long compared to the light crossing time for the simulation, and the space-time curvature induced by the particles and the particle velocities are small. The boundary conditions of these cosmological simulations are usually periodic (or toroidal), so that one edge of the simulation volume matches up with the opposite edge.

Calculation optimizations

N-body simulations are simple in principle, because they merely involve integrating the 6N ordinary differential equationOrdinary differential equation

In mathematics, an ordinary differential equation is a relation that contains functions of only one independent variable, and one or more of their derivatives with respect to that variable....

s defining the particle motions in Newtonian gravity. In practice, the number N of particles involved is usually very large (typical simulations include many millions, the Millennium simulation

Millennium simulation

The Millennium Run, or Millennium Simulation referring to its size, was a computer N-body simulation used to investigate how matter in the Universe evolved over time...

includes ten billion) and the number of particle-particle interactions needing to be computed increases as N2, and so ordinary methods of integrating numerical differential equations, such as the Runge-Kutta method, are inadequate. Therefore, a number of refinements are commonly used.

One of the simplest refinements is that each particle carries with it its own timestep variable, so that particles with widely different dynamical times don't all have to be evolved forward at the rate of that with the shortest time.

There are two basic algorithms by which the simulation may be optimised.

Tree methods

In tree methods such as a Barnes–Hut simulation, the volume is usually divided up into cubic cells in an octreeOctree

An octree is a tree data structure in which each internal node has exactly eight children. Octrees are most often used to partition a three dimensional space by recursively subdividing it into eight octants. Octrees are the three-dimensional analog of quadtrees. The name is formed from oct + tree,...

, so that only particles from nearby cells need to be treated individually, and particles in distant cells can be treated as a single large particle centered at its center of mass (or as a low-order multipole expansion). This can dramatically reduce the number of particle pair interactions that must be computed. To prevent the simulation from becoming swamped by computing particle-particle interactions, the cells must be refined to smaller cells in denser parts of the simulation which contain many particles per cell. For simulations where particles are not evenly distributed, the well-separated pair decomposition methods of Callahan and Kosaraju yield optimal O(n log n) time per iteration with fixed dimension.

Particle mesh method

Another possibility is the particle mesh methodParticle Mesh

Particle Mesh is a computational method for determining the forces in a system of particles. These particles could be atoms, stars, or fluid components and so the method is applicable to many fields, including molecular dynamics and astrophysics. The basic principle is that a system of particles...

in which space is discretised on a mesh and, for the purposes of computing the gravitational potential, particles are assumed to be divided between the nearby vertices of the mesh. Finding the potential energy Φ is easy, because the Poisson equation

where G is Newton's constant and

is the density (number of particles at the mesh points), is trivial to solve by using the fast Fourier transform

is the density (number of particles at the mesh points), is trivial to solve by using the fast Fourier transformFast Fourier transform

A fast Fourier transform is an efficient algorithm to compute the discrete Fourier transform and its inverse. "The FFT has been called the most important numerical algorithm of our lifetime ." There are many distinct FFT algorithms involving a wide range of mathematics, from simple...

to go to the frequency domain

Frequency domain

In electronics, control systems engineering, and statistics, frequency domain is a term used to describe the domain for analysis of mathematical functions or signals with respect to frequency, rather than time....

where the Poisson equation has the simple form

where

is the comoving wavenumber and the hats denote Fourier transforms. The gravitational field can now be found by multiplying by

is the comoving wavenumber and the hats denote Fourier transforms. The gravitational field can now be found by multiplying by  and computing the inverse Fourier transform (or computing the inverse transform and then using some other method). Since this method is limited by the mesh size, in practice a smaller mesh or some other technique (such as combining with a tree or simple particle-particle algorithm) is used to compute the small-scale forces. Sometimes an adaptive mesh is used, in which the mesh cells are much smaller in the denser regions of the simulation.

and computing the inverse Fourier transform (or computing the inverse transform and then using some other method). Since this method is limited by the mesh size, in practice a smaller mesh or some other technique (such as combining with a tree or simple particle-particle algorithm) is used to compute the small-scale forces. Sometimes an adaptive mesh is used, in which the mesh cells are much smaller in the denser regions of the simulation.Two-particle systems

Although there are millions or billions of particles in typical simulations, they typically correspond to a real particle with a very large mass, typically 109 solar massSolar mass

The solar mass , , is a standard unit of mass in astronomy, used to indicate the masses of other stars and galaxies...

es. This can introduce problems with short-range interactions between the particles such as the formation of two-particle binary systems. As the particles are meant to represent large numbers of dark matter particles or groups of stars, these binaries are unphysical. To prevent this, a softened Newtonian force law is used, which does not diverge as the inverse-square radius at short distances. Most simulations implement this quite naturally by running the simulations on cells of finite size. It is important to implement the discretization procedure in such a way that particles always exert a vanishing force on themselves.

Incorporating baryons, leptons and photons into simulations

Many simulations simulate only cold dark matterCold dark matter

Cold dark matter is the improvement of the big bang theory that contains the additional assumption that most of the matter in the Universe consists of material that cannot be observed by its electromagnetic radiation and whose constituent particles move slowly...

, and thus include only the gravitational force. Incorporating baryon

Baryon

A baryon is a composite particle made up of three quarks . Baryons and mesons belong to the hadron family, which are the quark-based particles...

s, lepton

Lepton

A lepton is an elementary particle and a fundamental constituent of matter. The best known of all leptons is the electron which governs nearly all of chemistry as it is found in atoms and is directly tied to all chemical properties. Two main classes of leptons exist: charged leptons , and neutral...

s and photon

Photon

In physics, a photon is an elementary particle, the quantum of the electromagnetic interaction and the basic unit of light and all other forms of electromagnetic radiation. It is also the force carrier for the electromagnetic force...

s into the simulations dramatically increases their complexity and often radical simplifications of the underlying physics must be made. However, this is an extremely important area and many modern simulations are now trying to understand processes that occur during galaxy formation which could account for galaxy bias.

See also

- Millennium Run

- Structure formationStructure formationStructure formation refers to a fundamental problem in physical cosmology. The universe, as is now known from observations of the cosmic microwave background radiation, began in a hot, dense, nearly uniform state approximately 13.7 Gyr ago...

- Large-scale structure of the cosmos

- GADGETGADGETGADGET is a freely available code for cosmological N-body/SPH simulations written by Volker Springel at the Max Planck Institute for Astrophysics...

- Galaxy formation and evolutionGalaxy formation and evolutionThe study of galaxy formation and evolution is concerned with the processes that formed a heterogeneous universe from a homogeneous beginning, the formation of the first galaxies, the way galaxies change over time, and the processes that have generated the variety of structures observed in nearby...

- n-body problemN-body problemThe n-body problem is the problem of predicting the motion of a group of celestial objects that interact with each other gravitationally. Solving this problem has been motivated by the need to understand the motion of the Sun, planets and the visible stars...

- natural unitsNatural unitsIn physics, natural units are physical units of measurement based only on universal physical constants. For example the elementary charge e is a natural unit of electric charge, or the speed of light c is a natural unit of speed...

- Virgo ConsortiumVirgo ConsortiumThe Virgo Consortium was founded in 1994 for Cosmological Supercomputer Simulations in response to the UK's High Performance Computing Initiative. Virgo developed rapidly into an international collaboration between dozen scientists in the UK, Germany, Netherlands, Canada, USA and Japan-Nodes:The...