Matrix (mathematics)

Encyclopedia

Mathematics

Mathematics is the study of quantity, space, structure, and change. Mathematicians seek out patterns and formulate new conjectures. Mathematicians resolve the truth or falsity of conjectures by mathematical proofs, which are arguments sufficient to convince other mathematicians of their validity...

, a matrix (plural matrices, or less commonly matrixes) is a rectangular array of numbers, symbols, or expressions. The individual items in a matrix are called its elements or entries. An example of a matrix with six elements is

Matrices of the same size can be added

Matrix addition

In mathematics, matrix addition is the operation of adding two matrices by adding the corresponding entries together. However, there are other operations which could also be considered as a kind of addition for matrices, the direct sum and the Kronecker sum....

or subtracted element by element. The rule for matrix multiplication

Matrix multiplication

In mathematics, matrix multiplication is a binary operation that takes a pair of matrices, and produces another matrix. If A is an n-by-m matrix and B is an m-by-p matrix, the result AB of their multiplication is an n-by-p matrix defined only if the number of columns m of the left matrix A is the...

is more complicated, and two matrices can be multiplied only when the number of columns in the first equals the number of rows in the second. A major application of matrices is to represent linear transformation

Linear transformation

In mathematics, a linear map, linear mapping, linear transformation, or linear operator is a function between two vector spaces that preserves the operations of vector addition and scalar multiplication. As a result, it always maps straight lines to straight lines or 0...

s, that is, generalizations of linear functions such as . For example, the rotation

Rotation (mathematics)

In geometry and linear algebra, a rotation is a transformation in a plane or in space that describes the motion of a rigid body around a fixed point. A rotation is different from a translation, which has no fixed points, and from a reflection, which "flips" the bodies it is transforming...

of vectors in three dimensional space is a linear transformation. If R is a rotation matrix and v is a column vector (a matrix with only one column) describing the position of a point in space, the product Rv is a column vector describing the position of that point after a rotation. The product of two matrices is a matrix that represents the composition

Function composition

In mathematics, function composition is the application of one function to the results of another. For instance, the functions and can be composed by computing the output of g when it has an argument of f instead of x...

of two linear transformations. Another application of matrices is in the solution of a system of linear equations. If the matrix is square, it is possible to deduce some of its properties by computing its determinant

Determinant

In linear algebra, the determinant is a value associated with a square matrix. It can be computed from the entries of the matrix by a specific arithmetic expression, while other ways to determine its value exist as well...

. For example, a square matrix has an inverse if and only if its determinant is not zero. Eigenvalues and eigenvectors provide insight into the geometry of linear transformations.

Matrices find applications in most scientific fields. In physics

Physics

Physics is a natural science that involves the study of matter and its motion through spacetime, along with related concepts such as energy and force. More broadly, it is the general analysis of nature, conducted in order to understand how the universe behaves.Physics is one of the oldest academic...

, matrices are used to study electrical circuits, optics, and quantum mechanics

Quantum mechanics

Quantum mechanics, also known as quantum physics or quantum theory, is a branch of physics providing a mathematical description of much of the dual particle-like and wave-like behavior and interactions of energy and matter. It departs from classical mechanics primarily at the atomic and subatomic...

. In computer graphics

Computer graphics

Computer graphics are graphics created using computers and, more generally, the representation and manipulation of image data by a computer with help from specialized software and hardware....

, matrices are used to project a 3-dimensional image onto a 2-dimensional screen, and to create realistic-seeming motion. Matrix calculus

Matrix calculus

In mathematics, matrix calculus is a specialized notation for doing multivariable calculus, especially over spaces of matrices, where it defines the matrix derivative. This notation was to describe systems of differential equations, and taking derivatives of matrix-valued functions with respect...

generalizes classical analytical

Mathematical analysis

Mathematical analysis, which mathematicians refer to simply as analysis, has its beginnings in the rigorous formulation of infinitesimal calculus. It is a branch of pure mathematics that includes the theories of differentiation, integration and measure, limits, infinite series, and analytic functions...

notions such as derivative

Derivative

In calculus, a branch of mathematics, the derivative is a measure of how a function changes as its input changes. Loosely speaking, a derivative can be thought of as how much one quantity is changing in response to changes in some other quantity; for example, the derivative of the position of a...

s and exponentials to higher dimensions.

A major branch of numerical analysis

Numerical analysis

Numerical analysis is the study of algorithms that use numerical approximation for the problems of mathematical analysis ....

is devoted to the development of efficient algorithms for matrix computations, a subject that is centuries old and is today an expanding area of research. Matrix decomposition methods simplify computations, both theoretically and practically. Algorithms that are tailored to the structure of particular matrix structures, e.g. sparse matrices

Sparse matrix

In the subfield of numerical analysis, a sparse matrix is a matrix populated primarily with zeros . The term itself was coined by Harry M. Markowitz....

and near-diagonal matrices

Diagonal matrix

In linear algebra, a diagonal matrix is a matrix in which the entries outside the main diagonal are all zero. The diagonal entries themselves may or may not be zero...

, expedite computations in finite element method

Finite element method

The finite element method is a numerical technique for finding approximate solutions of partial differential equations as well as integral equations...

and other computations. Infinite matrices occur in planetary theory and in atomic theory. A simple example is the matrix representing the derivative operator, which acts on the Taylor series

Taylor series

In mathematics, a Taylor series is a representation of a function as an infinite sum of terms that are calculated from the values of the function's derivatives at a single point....

of a function.

Definition

A matrix is a rectangularRectangle

In Euclidean plane geometry, a rectangle is any quadrilateral with four right angles. The term "oblong" is occasionally used to refer to a non-square rectangle...

arrangement of mathematical expressions that can be simply number

Number

A number is a mathematical object used to count and measure. In mathematics, the definition of number has been extended over the years to include such numbers as zero, negative numbers, rational numbers, irrational numbers, and complex numbers....

s. For example,

-

An alternative notation uses large parentheses instead of box brackets:

-

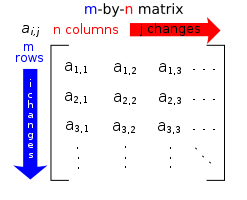

The horizontal and vertical lines in a matrix are called rows and columns, respectively. The numbers in the matrix are called its entries or its elements. To specify the size of a matrix, a matrix with m rows and n columns is called an m-by-n matrix or m × n matrix, while m and n are called its dimensions. The above is a 4-by-3 matrix.

A matrix with one row (a 1 × n matrix) is called a row vector, and a matrix with one column (an m × 1 matrix) is called a column vector. Any row or column of a matrix determines a row or column vector, obtained by removing all other rows or columns respectively from the matrix. For example, the row vector for the third row of the above matrix A is

When a row or column of a matrix is interpreted as a value, this refers to the corresponding row or column vector. For instance one may say that two different rows of a matrix are equal, meaning they determine the same row vector. In some cases the value of a row or column should be interpreted just as a sequence of values (an element of Rn if entries are real numbers) rather than as a matrix, for instance when saying that the rows of a matrix are equal to the corresponding columns of its transposeTransposeIn linear algebra, the transpose of a matrix A is another matrix AT created by any one of the following equivalent actions:...

matrix.

Most of this article focuses on real and complex matrices, i.e., matrices whose elements are real or complex, respectively. More general types of entries are discussed below.

Notation

The specifics of matrices notation varies widely, with some prevailing trends. Matrices are usually denoted using upper-case letters, while the corresponding lower-case letters, with two subscript indices, represent the entries. In addition to using upper-case letters to symbolize matrices, many authors use a special typographical styleEmphasis (typography)In typography, emphasis is the exaggeration of words in a text with a font in a different style from the rest of the text—to emphasize them.- Methods and use :...

, commonly boldface upright (non-italic), to further distinguish matrices from other mathematical objects. An alternative notation involves the use of a double-underline with the variable name, with or without boldface style, (e.g., ).

).

The entry in the i-th row and the j-th column of a matrix is typically referred to as the i,j, (i,j), or (i,j)th entry of the matrix. For example, the (2,3) entry of the above matrix A is 7. The (i, j)th entry of a matrix A is most commonly written as ai,j. Alternative notations for that entry are A[i,j] or Ai,j.

Sometimes a matrix is referred to by giving a formula for its (i,j)th entry, often with double parenthesis around the formula for the entry, for example, if the (i,j)th entry of A were given by aij, A would be denoted ((aij)).

An asterisk is commonly used to refer to whole rows or columns in a matrix. For example, ai,∗ refers to the ith row of A, and a∗,j refers to the jth column of A. The set of all m-by-n matrices is denoted (m, n).

(m, n).

A common shorthand is- A = [ai,j]i = 1,...,m; j = 1,...,n or more briefly A = [ai,j]m×n

to define an m × n matrix A. Usually the entries ai,j are defined separately for all integers and . They can however sometimes be given by one formula; for example the 3-by-4 matrix

can alternatively be specified by A = [i − j]i = 1,2,3; j = 1,...,4, or simply A = ((i-j)), where the size of the matrix is understood.

Some programming languages start the numbering of rows and columns at zero, in which case the entries of an m-by-n matrix are indexed by and . This article follows the more common convention in mathematical writing where enumeration starts from 1.

Basic operations

There are a number of operations that can be applied to modify matrices called matrix addition, scalar multiplication and transposition. These form the basic techniques to deal with matrices.Operation Definition Example Addition The sum A+B of two m-by-n matrices A and B is calculated entrywise:i,j = Ai,j + Bi,j, where 1 ≤ i ≤ m and 1 ≤ j ≤ n.

Scalar multiplication The scalar multiplication cA of a matrix A and a number c (also called a scalar Scalar (mathematics)In linear algebra, real numbers are called scalars and relate to vectors in a vector space through the operation of scalar multiplication, in which a vector can be multiplied by a number to produce another vector....

in the parlance of abstract algebraAbstract algebraAbstract algebra is the subject area of mathematics that studies algebraic structures, such as groups, rings, fields, modules, vector spaces, and algebras...

) is given by multiplying every entry of A by c:- (c

Transpose The transpose of an m-by-n matrix A is the n-by-m matrix AT (also denoted Atr or tA) formed by turning rows into columns and vice versa: - (AT)i,j = Aj,i.

Familiar properties of numbers extend to these operations of matrices: for example, addition is commutative, i.e., the matrix sum does not depend on the order of the summands: A + B = B + A.

The transpose is compatible with addition and scalar multiplication, as expressed by (cA)T = c(AT) and (A + B)T = AT + BT. Finally, (AT)T = A.

Row operations are ways to change matrices. There are three types of row operations: row switching, that is interchanging two rows of a matrix; row multiplication, multiplying all entries of a row by a non-zero constant; and finally row addition, which means adding a multiple of a row to another row. These row operations are used in a number of ways including solving linear equations and finding inverses.

Matrix multiplication, linear equations and linear transformations

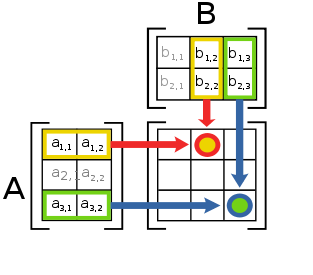

Multiplication of two matrices is defined only if the number of columns of the left matrix is the same as the number of rows of the right matrix. If A is an m-by-n matrix and B is an n-by-p matrix, then their matrix product AB is the m-by-p matrix whose entries are given by dot product Dot productIn mathematics, the dot product or scalar product is an algebraic operation that takes two equal-length sequences of numbers and returns a single number obtained by multiplying corresponding entries and then summing those products...

Dot productIn mathematics, the dot product or scalar product is an algebraic operation that takes two equal-length sequences of numbers and returns a single number obtained by multiplying corresponding entries and then summing those products...

of the corresponding row of A and the corresponding column of B:

where 1 ≤ i ≤ m and 1 ≤ j ≤ p. For example, the underlined entry 1 in the product is calculated as

Matrix multiplication satisfies the rules (AB)C = A(BC) (associativityAssociativityIn mathematics, associativity is a property of some binary operations. It means that, within an expression containing two or more occurrences in a row of the same associative operator, the order in which the operations are performed does not matter as long as the sequence of the operands is not...

), and (A+B)C = AC+BC as well as C(A+B) = CA+CB (left and right distributivityDistributivityIn mathematics, and in particular in abstract algebra, distributivity is a property of binary operations that generalizes the distributive law from elementary algebra.For example:...

), whenever the size of the matrices is such that the various products are defined. The product AB may be defined without BA being defined, namely if A and B are m-by-n and n-by-k matrices, respectively, and Even if both products are defined, they need not be equal, i.e., generally one has

i.e., matrix multiplication is not commutativeCommutativityIn mathematics an operation is commutative if changing the order of the operands does not change the end result. It is a fundamental property of many binary operations, and many mathematical proofs depend on it...

, in marked contrast to (rational, real, or complex) numbers whose product is independent of the order of the factors. An example of two matrices not commuting with each other is:

whereas

The identity matrixIdentity matrixIn linear algebra, the identity matrix or unit matrix of size n is the n×n square matrix with ones on the main diagonal and zeros elsewhere. It is denoted by In, or simply by I if the size is immaterial or can be trivially determined by the context...

In of size n is the n-by-n matrix in which all the elements on the main diagonal are equal to 1 and all other elements are equal to 0, e.g.-

It is called identity matrix because multiplication with it leaves a matrix unchanged:

Besides the ordinary matrix multiplication just described, there exist other less frequently used operations on matrices that can be considered forms of multiplication, such as the Hadamard product and the Kronecker productKronecker productIn mathematics, the Kronecker product, denoted by ⊗, is an operation on two matrices of arbitrary size resulting in a block matrix. It gives the matrix of the tensor product with respect to a standard choice of basis. The Kronecker product should not be confused with the usual matrix...

. They arise in solving matrix equations such as the Sylvester equationSylvester equationThe Sylvester equation, commonly encountered in control theory, is the matrix equation of the formA X + X B = C,where A,B,X,C are n \times n matrices. A,B,C are known...

.

Linear equations

A particular case of matrix multiplication is tightly linked to linear equations: if x designates a column vector (i.e., n×1-matrix) of n variables x1, x2, ..., xn, and A is an m-by-n matrix, then the matrix equation -

where b is some m×1-column vector, is equivalent to the system of linear equations

- A1,1x1 + A1,2x2 + ... + A1,nxn = b1

- ...

- Am,1x1 + Am,2x2 + ... + Am,nxn = bm .

This way, matrices can be used to compactly write and deal with multiple linear equations, i.e., systems of linear equations.

Linear transformations

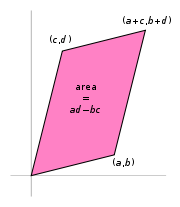

For example, the 2×2 matrix

can be viewed as the transform of the unit square

Unit square

In mathematics, a unit square is a square whose sides have length 1. Often, "the" unit square refers specifically to the square in the Cartesian plane with corners at , , , and .-In the real plane:...

into a parallelogram

Parallelogram

In Euclidean geometry, a parallelogram is a convex quadrilateral with two pairs of parallel sides. The opposite or facing sides of a parallelogram are of equal length and the opposite angles of a parallelogram are of equal measure...

with vertices at , , , and . The parallelogram pictured at the right is obtained by multiplying A with each of the column vectors

and

and  in turn. These vectors define the vertices of the unit square.

in turn. These vectors define the vertices of the unit square.The following table shows a number of 2-by-2 matrices with the associated linear maps of R2. The blue original is mapped to the green grid and shapes, the origin (0,0) is marked with a black point.

| Horizontal shear with m=1.25. | Horizontal flip | Squeeze mapping Squeeze mapping In linear algebra, a squeeze mapping is a type of linear map that preserves Euclidean area of regions in the Cartesian plane, but is not a Euclidean motion.For a fixed positive real number r, the mapping →... with r=3/2 |

Scaling Scaling (geometry) In Euclidean geometry, uniform scaling is a linear transformation that enlarges or shrinks objects by a scale factor that is the same in all directions. The result of uniform scaling is similar to the original... by a factor of 3/2 |

Rotation by π/6R = 30° |

|

|

|

|

|

Under the 1-to-1 correspondence

Bijection

A bijection is a function giving an exact pairing of the elements of two sets. A bijection from the set X to the set Y has an inverse function from Y to X. If X and Y are finite sets, then the existence of a bijection means they have the same number of elements...

between matrices and linear maps, matrix multiplication corresponds to composition

Function composition

In mathematics, function composition is the application of one function to the results of another. For instance, the functions and can be composed by computing the output of g when it has an argument of f instead of x...

of maps: if a k-by-m matrix B represents another linear map g : Rm → Rk, then the composition is represented by BA since(x) = g(f(x)) = g(Ax) = B(Ax) = (BA)x.

The last equality follows from the above-mentioned associativity of matrix multiplication.

The rank of a matrix A is the maximum number of linearly independent

Linear independence

In linear algebra, a family of vectors is linearly independent if none of them can be written as a linear combination of finitely many other vectors in the collection. A family of vectors which is not linearly independent is called linearly dependent...

row vectors of the matrix, which is the same as the maximum number of linearly independent column vectors. Equivalently it is the dimension of the image

Image (mathematics)

In mathematics, an image is the subset of a function's codomain which is the output of the function on a subset of its domain. Precisely, evaluating the function at each element of a subset X of the domain produces a set called the image of X under or through the function...

of the linear map represented by A. The rank-nullity theorem

Rank-nullity theorem

In mathematics, the rank–nullity theorem of linear algebra, in its simplest form, states that the rank and the nullity of a matrix add up to the number of columns of the matrix. Specifically, if A is an m-by-n matrix over some field, thenThis applies to linear maps as well...

states that the dimension of the kernel of a matrix plus the rank equals the number of columns of the matrix.

Square matrices

A square matrix is a matrix with the same number of rows and columns. An n-by-n matrix is known as a square matrix of order n. Any two square matrices of the same order can be added and multiplied. A square matrix A is called invertible or non-singular if there exists a matrix B such thatThis is equivalent to BA = In. Moreover, if B exists, it is unique and is called the inverse matrix of A, denoted A−1.

The entries Ai,i form the main diagonal of a matrix. The trace, tr(A) of a square matrix A is the sum of its diagonal entries. While, as mentioned above, matrix multiplication is not commutative, the trace of the product of two matrices is independent of the order of the factors: tr(AB) = tr(BA).

Also, the trace of a matrix is equal to that of its transpose, i.e., tr(A) = tr(AT).

If all entries outside the main diagonal are zero, A is called a diagonal matrix

Diagonal matrix

In linear algebra, a diagonal matrix is a matrix in which the entries outside the main diagonal are all zero. The diagonal entries themselves may or may not be zero...

. If only all entries above (below) the main diagonal are zero, A is called a lower triangular matrix

Triangular matrix

In the mathematical discipline of linear algebra, a triangular matrix is a special kind of square matrix where either all the entries below or all the entries above the main diagonal are zero...

(upper triangular matrix, respectively). For example, if n = 3, they look like

(diagonal),

(diagonal),  (lower) and

(lower) and  (upper triangular matrix).

(upper triangular matrix).Determinant

The determinant det(A) or |A| of a square matrix A is a number encoding certain properties of the matrix. A matrix is invertible if and only ifIf and only if

In logic and related fields such as mathematics and philosophy, if and only if is a biconditional logical connective between statements....

its determinant is nonzero. Its absolute value

Absolute value

In mathematics, the absolute value |a| of a real number a is the numerical value of a without regard to its sign. So, for example, the absolute value of 3 is 3, and the absolute value of -3 is also 3...

equals the area (in R2) or volume (in R3) of the image of the unit square (or cube), while its sign corresponds to the orientation of the corresponding linear map: the determinant is positive if and only if the orientation is preserved.

The determinant of 2-by-2 matrices is given by

When the determinant is equal to one, then the matrix represents an equi-areal mapping. The determinant of 3-by-3 matrices involves 6 terms (rule of Sarrus

Rule of Sarrus

Sarrus' rule or Sarrus' scheme is a method and a memorization scheme to compute the determinant of a 3×3 matrix. It is named after the French mathematician Pierre Frédéric Sarrus....

). The more lengthy Leibniz formula generalises these two formulae to all dimensions.

The determinant of a product of square matrices equals the product of their determinants: det(AB) = det(A) · det(B). Adding a multiple of any row to another row, or a multiple of any column to another column, does not change the determinant. Interchanging two rows or two columns affects the determinant by multiplying it by −1. Using these operations, any matrix can be transformed to a lower (or upper) triangular matrix, and for such matrices the determinant equals the product of the entries on the main diagonal; this provides a method to calculate the determinant of any matrix. Finally, the Laplace expansion

Laplace expansion

In linear algebra, the Laplace expansion, named after Pierre-Simon Laplace, also called cofactor expansion, is an expression for the determinant |B| of...

expresses the determinant in terms of minors

Minor (linear algebra)

In linear algebra, a minor of a matrix A is the determinant of some smaller square matrix, cut down from A by removing one or more of its rows or columns...

, i.e., determinants of smaller matrices. This expansion can be used for a recursive definition of determinants (taking as starting case the determinant of a 1-by-1 matrix, which is its unique entry, or even the determinant of a 0-by-0 matrix, which is 1), that can be seen to be equivalent to the Leibniz formula. Determinants can be used to solve linear system

Linear system

A linear system is a mathematical model of a system based on the use of a linear operator.Linear systems typically exhibit features and properties that are much simpler than the general, nonlinear case....

s using Cramer's rule

Cramer's rule

In linear algebra, Cramer's rule is a theorem, which gives an expression for the solution of a system of linear equations with as many equations as unknowns, valid in those cases where there is a unique solution...

, where the division of the determinants of two related square matrices equates to the value of each of the system's variables.

Eigenvalues and eigenvectors

A number λ and a non-zero vector v satisfyingare called an eigenvalue and an eigenvector of A, respectively.Eigen means "own" in German

German language

German is a West Germanic language, related to and classified alongside English and Dutch. With an estimated 90 – 98 million native speakers, German is one of the world's major languages and is the most widely-spoken first language in the European Union....

and in Dutch

Dutch language

Dutch is a West Germanic language and the native language of the majority of the population of the Netherlands, Belgium, and Suriname, the three member states of the Dutch Language Union. Most speakers live in the European Union, where it is a first language for about 23 million and a second...

. The number λ is an eigenvalue of an n×n-matrix A if and only if A−λIn is not invertible, which is equivalent

Logical equivalence

In logic, statements p and q are logically equivalent if they have the same logical content.Syntactically, p and q are equivalent if each can be proved from the other...

to

The function pA(t) = det(A−tI) is called the characteristic polynomial

Characteristic polynomial

In linear algebra, one associates a polynomial to every square matrix: its characteristic polynomial. This polynomial encodes several important properties of the matrix, most notably its eigenvalues, its determinant and its trace....

of A, its degree

Degree of a polynomial

The degree of a polynomial represents the highest degree of a polynominal's terms , should the polynomial be expressed in canonical form . The degree of an individual term is the sum of the exponents acting on the term's variables...

is n. Therefore pA(t) has at most n different roots, i.e., eigenvalues of the matrix. They may be complex even if the entries of A are real. According to the Cayley–Hamilton theorem

Cayley–Hamilton theorem

In linear algebra, the Cayley–Hamilton theorem states that every square matrix over a commutative ring satisfies its own characteristic equation....

, pA(A) = 0, that is to say, the characteristic polynomial applied to the matrix itself yields the zero matrix.

Symmetry

A square matrix A that is equal to its transpose, i.e., A = AT, is a symmetric matrix. If instead, A was equal to the negative of its transpose, i.e., A = −AT, then A is a skew-symmetric matrixSkew-symmetric matrix

In mathematics, and in particular linear algebra, a skew-symmetric matrix is a square matrix A whose transpose is also its negative; that is, it satisfies the equation If the entry in the and is aij, i.e...

. In complex matrices, symmetry is often replaced by the concept of Hermitian matrices, which satisfy A∗ = A, where the star or asterisk

Asterisk

An asterisk is a typographical symbol or glyph. It is so called because it resembles a conventional image of a star. Computer scientists and mathematicians often pronounce it as star...

denotes the conjugate transpose

Conjugate transpose

In mathematics, the conjugate transpose, Hermitian transpose, Hermitian conjugate, or adjoint matrix of an m-by-n matrix A with complex entries is the n-by-m matrix A* obtained from A by taking the transpose and then taking the complex conjugate of each entry...

of the matrix, i.e., the transpose of the complex conjugate

Complex conjugate

In mathematics, complex conjugates are a pair of complex numbers, both having the same real part, but with imaginary parts of equal magnitude and opposite signs...

of A.

By the spectral theorem

Spectral theorem

In mathematics, particularly linear algebra and functional analysis, the spectral theorem is any of a number of results about linear operators or about matrices. In broad terms the spectral theorem provides conditions under which an operator or a matrix can be diagonalized...

, real symmetric matrices and complex Hermitian matrices have an eigenbasis; i.e., every vector is expressible as a linear combination

Linear combination

In mathematics, a linear combination is an expression constructed from a set of terms by multiplying each term by a constant and adding the results...

of eigenvectors. In both cases, all eigenvalues are real. This theorem can be generalized to infinite-dimensional situations related to matrices with infinitely many rows and columns, see below.

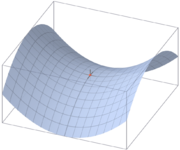

Definiteness

| Matrix A; definiteness; associated quadratic form QA(x,y); set of vectors (x,y) such that QA(x,y)=1 |

|

|

|

| positive definite | indefinite |

| 1/4 x2 + 1/4y2 | 1/4 x2 − 1/4 y2 |

| Ellipse Ellipse In geometry, an ellipse is a plane curve that results from the intersection of a cone by a plane in a way that produces a closed curve. Circles are special cases of ellipses, obtained when the cutting plane is orthogonal to the cone's axis... |

Hyperbola Hyperbola In mathematics a hyperbola is a curve, specifically a smooth curve that lies in a plane, which can be defined either by its geometric properties or by the kinds of equations for which it is the solution set. A hyperbola has two pieces, called connected components or branches, which are mirror... |

A symmetric n×n-matrix is called positive-definite

Positive-definite matrix

In linear algebra, a positive-definite matrix is a matrix that in many ways is analogous to a positive real number. The notion is closely related to a positive-definite symmetric bilinear form ....

(respectively negative-definite; indefinite), if for all nonzero vectors x ∈ Rn the associated quadratic form

Quadratic form

In mathematics, a quadratic form is a homogeneous polynomial of degree two in a number of variables. For example,4x^2 + 2xy - 3y^2\,\!is a quadratic form in the variables x and y....

given by

- Q(x) = xTAx

takes only positive values (respectively only negative values; both some negative and some positive values). If the quadratic form takes only non-negative (respectively only non-positive) values, the symmetric matrix is called positive-semidefinite (respectively negative-semidefinite); hence the matrix is indefinite precisely when it is neither positive-semidefinite nor negative-semidefinite.

A symmetric matrix is positive-definite if and only if all its eigenvalues are positive. The table at the right shows two possibilities for 2-by-2 matrices.

Allowing as input two different vectors instead yields the bilinear form associated to A:

- BA (x, y) = xTAy.

Computational aspects

In addition to theoretical knowledge of properties of matrices and their relation to other fields, it is important for practical purposes to perform matrix calculations effectively and precisely. The domain studying these matters is called numerical linear algebraNumerical linear algebra

Numerical linear algebra is the study of algorithms for performing linear algebra computations, most notably matrix operations, on computers. It is often a fundamental part of engineering and computational science problems, such as image and signal processing, Telecommunication, computational...

. As with other numerical situations, two main aspects are the complexity of algorithms and their numerical stability

Numerical stability

In the mathematical subfield of numerical analysis, numerical stability is a desirable property of numerical algorithms. The precise definition of stability depends on the context, but it is related to the accuracy of the algorithm....

. Many problems can be solved by both direct algorithms or iterative approaches. For example, finding eigenvectors can be done by finding a sequence of vectors xn converging

Limit of a sequence

The limit of a sequence is, intuitively, the unique number or point L such that the terms of the sequence become arbitrarily close to L for "large" values of n...

to an eigenvector when n tends to infinity

Infinity

Infinity is a concept in many fields, most predominantly mathematics and physics, that refers to a quantity without bound or end. People have developed various ideas throughout history about the nature of infinity...

.

Determining the complexity of an algorithm means finding upper bound

Upper bound

In mathematics, especially in order theory, an upper bound of a subset S of some partially ordered set is an element of P which is greater than or equal to every element of S. The term lower bound is defined dually as an element of P which is lesser than or equal to every element of S...

s or estimates of how many elementary operations such as additions and multiplications of scalars are necessary to perform some algorithm, e.g., multiplication of matrices. For example, calculating the matrix product of two n-by-n matrix using the definition given above needs n3 multiplications, since for any of the n2 entries of the product, n multiplications are necessary. The Strassen algorithm

Strassen algorithm

In the mathematical discipline of linear algebra, the Strassen algorithm, named after Volker Strassen, is an algorithm used for matrix multiplication...

outperforms this "naive" algorithm; it needs only n2.807 multiplications. A refined approach also incorporates specific features of the computing devices.

In many practical situations additional information about the matrices involved is known. An important case are sparse matrices

Sparse matrix

In the subfield of numerical analysis, a sparse matrix is a matrix populated primarily with zeros . The term itself was coined by Harry M. Markowitz....

, i.e., matrices most of whose entries are zero. There are specifically adapted algorithms for, say, solving linear systems Ax = b for sparse matrices A, such as the conjugate gradient method

Conjugate gradient method

In mathematics, the conjugate gradient method is an algorithm for the numerical solution of particular systems of linear equations, namely those whose matrix is symmetric and positive-definite. The conjugate gradient method is an iterative method, so it can be applied to sparse systems that are too...

.

An algorithm is, roughly speaking, numerically stable, if little deviations (such as rounding errors) do not lead to big deviations in the result. For example, calculating the inverse of a matrix via Laplace's formula (Adj (A) denotes the adjugate matrix

Adjugate matrix

In linear algebra, the adjugate or classical adjoint of a square matrix is a matrix that plays a role similar to the inverse of a matrix; it can however be defined for any square matrix without the need to perform any divisions....

of A)

- A−1 = Adj(A) / det(A)

may lead to significant rounding errors if the determinant of the matrix is very small. The norm of a matrix can be used to capture the conditioning

Condition number

In the field of numerical analysis, the condition number of a function with respect to an argument measures the asymptotically worst case of how much the function can change in proportion to small changes in the argument...

of linear algebraic problems, such as computing a matrix' inverse.

Although most computer languages are not designed with commands or libraries for matrices, as early as the 1970s, some engineering desktop computers such as the HP 9830

HP 9830

The HP 9800 was a family of what were initially called programmable calculators and later desktop computers made by Hewlett-Packard, replacing their first HP 9100 calculator...

had ROM cartridges to add BASIC commands for matrices. Some computer languages such as APL were designed to manipulate matrices, and various mathematical programs can be used to aid computing with matrices.

Matrix decomposition methods

There are several methods to render matrices into a more easily accessible form. They are generally referred to as matrix transformation or matrix decomposition techniques. The interest of all these decomposition techniques is that they preserve certain properties of the matrices in question, such as determinant, rank or inverse, so that these quantities can be calculated after applying the transformation, or that certain matrix operations are algorithmically easier to carry out for some types of matrices.The LU decomposition

LU decomposition

In linear algebra, LU decomposition is a matrix decomposition which writes a matrix as the product of a lower triangular matrix and an upper triangular matrix. The product sometimes includes a permutation matrix as well. This decomposition is used in numerical analysis to solve systems of linear...

factors matrices as a product of lower (L) and an upper triangular matrices

Triangular matrix

In the mathematical discipline of linear algebra, a triangular matrix is a special kind of square matrix where either all the entries below or all the entries above the main diagonal are zero...

(U). Once this decomposition is calculated, linear systems can be solved more efficiently, by a simple technique called forward and back substitution. Likewise, inverses of triangular matrices are algorithmically easier to calculate. The Gaussian elimination is a similar algorithm; it transforms any matrix to row echelon form

Row echelon form

In linear algebra a matrix is in row echelon form if* All nonzero rows are above any rows of all zeroes, and...

. Both methods proceed by multiplying the matrix by suitable elementary matrices, which correspond to permuting rows or columns

Permutation matrix

In mathematics, in matrix theory, a permutation matrix is a square binary matrix that has exactly one entry 1 in each row and each column and 0s elsewhere...

and adding multiples of one row to another row. Singular value decomposition

Singular value decomposition

In linear algebra, the singular value decomposition is a factorization of a real or complex matrix, with many useful applications in signal processing and statistics....

expresses any matrix A as a product UDV∗, where U and V are unitary matrices and D is a diagonal matrix.

The eigendecomposition or diagonalization expresses A as a product VDV−1, where D is a diagonal matrix and V is a suitable invertible matrix. If A can be written in this form, it is called diagonalizable

Diagonalizable matrix

In linear algebra, a square matrix A is called diagonalizable if it is similar to a diagonal matrix, i.e., if there exists an invertible matrix P such that P −1AP is a diagonal matrix...

. More generally, and applicable to all matrices, the Jordan decomposition transforms a matrix into Jordan normal form

Jordan normal form

In linear algebra, a Jordan normal form of a linear operator on a finite-dimensional vector space is an upper triangular matrix of a particular form called Jordan matrix, representing the operator on some basis...

, that is to say matrices whose only nonzero entries are the eigenvalues λ1 to λn of A, placed on the main diagonal and possibly entries equal to one directly above the main diagonal, as shown at the right. Given the eigendecomposition, the nth power of A (i.e., n-fold iterated matrix multiplication) can be calculated via

- An = (VDV−1)n = VDV−1VDV−1...VDV−1 = VDnV−1

and the power of a diagonal matrix can be calculated by taking the corresponding powers of the diagonal entries, which is much easier than doing the exponentiation for A instead. This can be used to compute the matrix exponential

Matrix exponential

In mathematics, the matrix exponential is a matrix function on square matrices analogous to the ordinary exponential function. Abstractly, the matrix exponential gives the connection between a matrix Lie algebra and the corresponding Lie group....

eA, a need frequently arising in solving linear differential equation

Linear differential equation

Linear differential equations are of the formwhere the differential operator L is a linear operator, y is the unknown function , and the right hand side ƒ is a given function of the same nature as y...

s, matrix logarithms and square roots of matrices

Square root of a matrix

In mathematics, the square root of a matrix extends the notion of square root from numbers to matrices. A matrix B is said to be a square root of A if the matrix product B · B is equal to A.-Properties:...

. To avoid numerically ill-conditioned

Condition number

In the field of numerical analysis, the condition number of a function with respect to an argument measures the asymptotically worst case of how much the function can change in proportion to small changes in the argument...

situations, further algorithms such as the Schur decomposition

Schur decomposition

In the mathematical discipline of linear algebra, the Schur decomposition or Schur triangulation, named after Issai Schur, is a matrix decomposition.- Statement :...

can be employed.

Abstract algebraic aspects and generalizations

Matrices can be generalized in different ways. Abstract algebra uses matrices with entries in more general fieldsField (mathematics)

In abstract algebra, a field is a commutative ring whose nonzero elements form a group under multiplication. As such it is an algebraic structure with notions of addition, subtraction, multiplication, and division, satisfying certain axioms...

or even rings

Ring (mathematics)

In mathematics, a ring is an algebraic structure consisting of a set together with two binary operations usually called addition and multiplication, where the set is an abelian group under addition and a semigroup under multiplication such that multiplication distributes over addition...

, while linear algebra codifies properties of matrices in the notion of linear maps. It is possible to consider matrices with infinitely many columns and rows. Another extension are tensors, which can be seen as higher-dimensional arrays of numbers, as opposed to vectors, which can often be realised as sequences of numbers, while matrices are rectangular or two-dimensional array of numbers. Matrices, subject to certain requirements tend to form groups

Group (mathematics)

In mathematics, a group is an algebraic structure consisting of a set together with an operation that combines any two of its elements to form a third element. To qualify as a group, the set and the operation must satisfy a few conditions called group axioms, namely closure, associativity, identity...

known as matrix groups.

Matrices with more general entries

This article focuses on matrices whose entries are real or complex numberComplex number

A complex number is a number consisting of a real part and an imaginary part. Complex numbers extend the idea of the one-dimensional number line to the two-dimensional complex plane by using the number line for the real part and adding a vertical axis to plot the imaginary part...

s. However, matrices can be considered with much more general types of entries than real or complex numbers. As a first step of generalization, any field

Field (mathematics)

In abstract algebra, a field is a commutative ring whose nonzero elements form a group under multiplication. As such it is an algebraic structure with notions of addition, subtraction, multiplication, and division, satisfying certain axioms...

, i.e., a set where addition

Addition

Addition is a mathematical operation that represents combining collections of objects together into a larger collection. It is signified by the plus sign . For example, in the picture on the right, there are 3 + 2 apples—meaning three apples and two other apples—which is the same as five apples....

, subtraction

Subtraction

In arithmetic, subtraction is one of the four basic binary operations; it is the inverse of addition, meaning that if we start with any number and add any number and then subtract the same number we added, we return to the number we started with...

, multiplication

Multiplication

Multiplication is the mathematical operation of scaling one number by another. It is one of the four basic operations in elementary arithmetic ....

and division

Division (mathematics)

right|thumb|200px|20 \div 4=5In mathematics, especially in elementary arithmetic, division is an arithmetic operation.Specifically, if c times b equals a, written:c \times b = a\,...

operations are defined and well-behaved, may be used instead of R or C, for example rational number

Rational number

In mathematics, a rational number is any number that can be expressed as the quotient or fraction a/b of two integers, with the denominator b not equal to zero. Since b may be equal to 1, every integer is a rational number...

s or finite field

Finite field

In abstract algebra, a finite field or Galois field is a field that contains a finite number of elements. Finite fields are important in number theory, algebraic geometry, Galois theory, cryptography, and coding theory...

s. For example, coding theory

Coding theory

Coding theory is the study of the properties of codes and their fitness for a specific application. Codes are used for data compression, cryptography, error-correction and more recently also for network coding...

makes use of matrices over finite fields. Wherever eigenvalues are considered, as these are roots of a polynomial they may exist only in a larger field than that of the coefficients of the matrix; for instance they may be complex in case of a matrix with real entries. The possibility to reinterpret the entries of a matrix as elements of a larger field (e.g., to view a real matrix as a complex matrix whose entries happen to be all real) then allows considering each square matrix to possess a full set of eigenvalues. Alternatively one can consider only matrices with entries in an algebraically closed field

Algebraically closed field

In mathematics, a field F is said to be algebraically closed if every polynomial with one variable of degree at least 1, with coefficients in F, has a root in F.-Examples:...

, such as C, from the outset.

More generally, abstract algebra makes great use of matrices with entries in a ring

Ring (mathematics)

In mathematics, a ring is an algebraic structure consisting of a set together with two binary operations usually called addition and multiplication, where the set is an abelian group under addition and a semigroup under multiplication such that multiplication distributes over addition...

R. Rings are a more general notion than fields in that no division operation exists. The very same addition and multiplication operations of matrices extend to this setting, too. The set M(n, R) of all square n-by-n matrices over R is a ring called matrix ring

Matrix ring

In abstract algebra, a matrix ring is any collection of matrices forming a ring under matrix addition and matrix multiplication. The set of n×n matrices with entries from another ring is a matrix ring, as well as some subsets of infinite matrices which form infinite matrix rings...

, isomorphic to the endomorphism ring

Endomorphism ring

In abstract algebra, one associates to certain objects a ring, the object's endomorphism ring, which encodes several internal properties of the object; this may be denoted End...

of the left R-module

Module (mathematics)

In abstract algebra, the concept of a module over a ring is a generalization of the notion of vector space, wherein the corresponding scalars are allowed to lie in an arbitrary ring...

Rn. If the ring R is commutative

Commutative ring

In ring theory, a branch of abstract algebra, a commutative ring is a ring in which the multiplication operation is commutative. The study of commutative rings is called commutative algebra....

, i.e., its multiplication is commutative, then M(n, R) is a unitary noncommutative (unless n = 1) associative algebra

Associative algebra

In mathematics, an associative algebra A is an associative ring that has a compatible structure of a vector space over a certain field K or, more generally, of a module over a commutative ring R...

over R. The determinant

Determinant

In linear algebra, the determinant is a value associated with a square matrix. It can be computed from the entries of the matrix by a specific arithmetic expression, while other ways to determine its value exist as well...

of square matrices over a commutative ring R can still be defined using the Leibniz formula; such a matrix is invertible if and only if its determinant is invertible in R, generalising the situation over a field F, where every nonzero element is invertible. Matrices over superrings are called supermatrices

Supermatrix

In mathematics and theoretical physics, a supermatrix is a Z2-graded analog of an ordinary matrix. Specifically, a supermatrix is a 2×2 block matrix with entries in a superalgebra...

.

Matrices do not always have all their entries in the same ring – or even in any ring at all. One special but common case is block matrices

Block matrix

In the mathematical discipline of matrix theory, a block matrix or a partitioned matrix is a matrix broken into sections called blocks. Looking at it another way, the matrix is written in terms of smaller matrices. We group the rows and columns into adjacent 'bunches'. A partition is the rectangle...

, which may be considered as matrices whose entries themselves are matrices. The entries need not be quadratic matrices, and thus need not be members of any ordinary ring

Ring (mathematics)

In mathematics, a ring is an algebraic structure consisting of a set together with two binary operations usually called addition and multiplication, where the set is an abelian group under addition and a semigroup under multiplication such that multiplication distributes over addition...

; but their sizes must fulfil certain compatibility conditions.

Relationship to linear maps

Linear maps Rn → Rm are equivalent to m-by-n matrices, as described above. More generally, any linear map between finite-dimensional vector spaceVector space

A vector space is a mathematical structure formed by a collection of vectors: objects that may be added together and multiplied by numbers, called scalars in this context. Scalars are often taken to be real numbers, but one may also consider vector spaces with scalar multiplication by complex...

s can be described by a matrix A = (aij), after choosing bases

Basis (linear algebra)

In linear algebra, a basis is a set of linearly independent vectors that, in a linear combination, can represent every vector in a given vector space or free module, or, more simply put, which define a "coordinate system"...

v1, ..., vn of V, and w1, ..., wm of W (so n is the dimension of V and m is the dimension of W), which is such that

In other words, column j of A expresses the image of vj in terms of the basis vectors wi of W; thus this relation uniquely determines the entries of the matrix A. Note that the matrix depends on the choice of the bases: different choices of bases give rise to different, but equivalent matrices. Many of the above concrete notions can be reinterpreted in this light, for example, the transpose matrix AT describes the transpose of the linear map given by A, with respect to the dual bases

Dual space

In mathematics, any vector space, V, has a corresponding dual vector space consisting of all linear functionals on V. Dual vector spaces defined on finite-dimensional vector spaces can be used for defining tensors which are studied in tensor algebra...

.

More generally, the set of m×n matrices can be used to represent the R-linear maps between the free modules Rm and Rn for an arbitrary ring R with unity. When n = m composition of these maps is possible, and this gives rise to the matrix ring

Matrix ring

In abstract algebra, a matrix ring is any collection of matrices forming a ring under matrix addition and matrix multiplication. The set of n×n matrices with entries from another ring is a matrix ring, as well as some subsets of infinite matrices which form infinite matrix rings...

of n×n matrices representing the endomorphism ring

Endomorphism ring

In abstract algebra, one associates to certain objects a ring, the object's endomorphism ring, which encodes several internal properties of the object; this may be denoted End...

of Rn.

Matrix groups

A groupGroup (mathematics)

In mathematics, a group is an algebraic structure consisting of a set together with an operation that combines any two of its elements to form a third element. To qualify as a group, the set and the operation must satisfy a few conditions called group axioms, namely closure, associativity, identity...

is a mathematical structure consisting of a set of objects together with a binary operation

Binary operation

In mathematics, a binary operation is a calculation involving two operands, in other words, an operation whose arity is two. Examples include the familiar arithmetic operations of addition, subtraction, multiplication and division....

, i.e., an operation combining any two objects to a third, subject to certain requirements. A group in which the objects are matrices and the group operation is matrix multiplication is called a matrix group.Additionally, the group is required to be closed in the general linear group. Since in a group every element has to be invertible, the most general matrix groups are the groups of all invertible matrices of a given size, called the general linear group

General linear group

In mathematics, the general linear group of degree n is the set of n×n invertible matrices, together with the operation of ordinary matrix multiplication. This forms a group, because the product of two invertible matrices is again invertible, and the inverse of an invertible matrix is invertible...

s.

Any property of matrices that is preserved under matrix products and inverses can be used to define further matrix groups. For example, matrices with a given size and with a determinant of 1 form a subgroup

Subgroup

In group theory, given a group G under a binary operation *, a subset H of G is called a subgroup of G if H also forms a group under the operation *. More precisely, H is a subgroup of G if the restriction of * to H x H is a group operation on H...

of (i.e., a smaller group contained in) their general linear group, called a special linear group

Special linear group

In mathematics, the special linear group of degree n over a field F is the set of n×n matrices with determinant 1, with the group operations of ordinary matrix multiplication and matrix inversion....

. Orthogonal matrices

Orthogonal matrix

In linear algebra, an orthogonal matrix , is a square matrix with real entries whose columns and rows are orthogonal unit vectors ....

, determined by the condition

- MTM = I,

form the orthogonal group

Orthogonal group

In mathematics, the orthogonal group of degree n over a field F is the group of n × n orthogonal matrices with entries from F, with the group operation of matrix multiplication...

. They are called orthogonal since the associated linear transformations of Rn preserve angles in the sense that the scalar product of two vectors is unchanged after applying M to them: · (Mw) = v · w.

Every finite group

Finite group

In mathematics and abstract algebra, a finite group is a group whose underlying set G has finitely many elements. During the twentieth century, mathematicians investigated certain aspects of the theory of finite groups in great depth, especially the local theory of finite groups, and the theory of...

is isomorphic to a matrix group, as one can see by considering the regular representation

Regular representation

In mathematics, and in particular the theory of group representations, the regular representation of a group G is the linear representation afforded by the group action of G on itself by translation....

of the symmetric group

Symmetric group

In mathematics, the symmetric group Sn on a finite set of n symbols is the group whose elements are all the permutations of the n symbols, and whose group operation is the composition of such permutations, which are treated as bijective functions from the set of symbols to itself...

. General groups can be studied using matrix groups, which are comparatively well-understood, by means of representation theory

Representation theory

Representation theory is a branch of mathematics that studies abstract algebraic structures by representing their elements as linear transformations of vector spaces, and studiesmodules over these abstract algebraic structures...

.

Infinite matrices

It is also possible to consider matrices with infinitely many rows and/or columns even if, being infinite objects, one cannot write down such matrices explicitly. All that matters is that for every element in the set indexing rows, and every element in the set indexing columns, there is a well-defined entry (these index sets need not even be subsets of the natural numbers). The basic operations of addition, subtraction, scalar multiplication and transposition can still be defined without problem; however matrix multiplication may involve infinite summations to define the resulting entries, and these are not defined in general.If R is any ring with unity, then the ring of endomorphisms of

as a right R module is isomorphic to the ring of column finite matrices

as a right R module is isomorphic to the ring of column finite matrices  whose entries are indexed by

whose entries are indexed by  , and whose columns each contain only finitely many nonzero entries. The endomorphisms of M considered as a left R module result in an analogous object, the row finite matrices

, and whose columns each contain only finitely many nonzero entries. The endomorphisms of M considered as a left R module result in an analogous object, the row finite matrices  whose rows each only have finitely many nonzero entries.

whose rows each only have finitely many nonzero entries.If infinite matrices are used to describe linear maps, then only those matrices can be used all of whose columns have but a finite number of nonzero entries, for the following reason. For a matrix A to describe a linear map f: V→W, bases for both spaces must have been chosen; recall that by definition this means that every vector in the space can be written uniquely as a (finite) linear combination

Linear combination

In mathematics, a linear combination is an expression constructed from a set of terms by multiplying each term by a constant and adding the results...

of basis vectors, so that written as a (column) vector v of coefficients, only finitely many entries vi are nonzero. Now the columns of A describe the images by f of individual basis vectors of V in the basis of W, which is only meaningful if these columns have only finitely many nonzero entries. There is no restriction on the rows of A however: in the product A·v there are only finitely many nonzero coefficients of v involved, so every one of its entries, even if it is given as an infinite sum of products, involves only finitely many nonzero terms and is therefore well defined. Moreover this amounts to forming a linear combination of the columns of A that effectively involves only finitely many of them, whence the result has only finitely many nonzero entries, because each of those columns do. One also sees that products of two matrices of the given type is well defined (provided as usual that the column-index and row-index sets match), is again of the same type, and corresponds to the composition of linear maps.

If R is a normed ring, then the condition of row or column finiteness can be relaxed. With the norm in place, absolutely convergent series can be used instead of finite sums. For example, the matrices whose column sums are absolutely convergent sequences form a ring. Analogously of course, the matrices whose row sums are absolutely convergent series also form a ring.

In that vein, infinite matrices can also be used to describe operators on Hilbert spaces, where convergence and continuity

Continuous function

In mathematics, a continuous function is a function for which, intuitively, "small" changes in the input result in "small" changes in the output. Otherwise, a function is said to be "discontinuous". A continuous function with a continuous inverse function is called "bicontinuous".Continuity of...

questions arise, which again results in certain constraints that have to be imposed. However, the explicit point of view of matrices tends to obfuscate the matter,"Not much of matrix theory carries over to infinite-dimensional spaces, and what does is not so useful, but it sometimes helps." and the abstract and more powerful tools of functional analysis

Functional analysis

Functional analysis is a branch of mathematical analysis, the core of which is formed by the study of vector spaces endowed with some kind of limit-related structure and the linear operators acting upon these spaces and respecting these structures in a suitable sense...

can be used instead.

Empty matrices

An empty matrix is a matrix in which the number of rows or columns (or both) is zero. Empty matrices help dealing with maps involving the zero vector space. For example, if A is a 3-by-0 matrix A and B is a 0-by-3 matrix, then AB is the 3-by-3 zero matrix corresponding to the null map from a 3-dimensional space V to itself, while BA is a 0-by-0 matrix. There is no common notation for empty matrices, but most computer algebra systemComputer algebra system

A computer algebra system is a software program that facilitates symbolic mathematics. The core functionality of a CAS is manipulation of mathematical expressions in symbolic form.-Symbolic manipulations:...

s allow creating and computing with them. The determinant of the 0-by-0 matrix is 1 as follows from regarding the empty product

Empty product

In mathematics, an empty product, or nullary product, is the result of multiplying no factors. It is equal to the multiplicative identity 1, given that it exists for the multiplication operation in question, just as the empty sum—the result of adding no numbers—is zero, or the additive...

occurring in the Leibniz formula for the determinant as 1. This value is also consistent with the fact that the identity map from any finite dimensional space to itself has determinant 1, a fact that is often used as a part of the characterization of determinants.

Applications

There are numerous applications of matrices, both in mathematics and other sciences. Some of them merely take advantage of the compact representation of a set of numbers in a matrix. For example, in game theoryGame theory

Game theory is a mathematical method for analyzing calculated circumstances, such as in games, where a person’s success is based upon the choices of others...

and economics

Economics

Economics is the social science that analyzes the production, distribution, and consumption of goods and services. The term economics comes from the Ancient Greek from + , hence "rules of the house"...

, the payoff matrix encodes the payoff for two players, depending on which out of a given (finite) set of alternatives the players choose. Text mining

Text mining

Text mining, sometimes alternately referred to as text data mining, roughly equivalent to text analytics, refers to the process of deriving high-quality information from text. High-quality information is typically derived through the devising of patterns and trends through means such as...

and automated thesaurus

Thesaurus

A thesaurus is a reference work that lists words grouped together according to similarity of meaning , in contrast to a dictionary, which contains definitions and pronunciations...

compilation makes use of document-term matrices

Document-term matrix

A document-term matrix or term-document matrix is a mathematical matrix that describes the frequency of terms that occur in a collection of documents. In a document-term matrix, rows correspond to documents in the collection and columns correspond to terms. There are various schemes for determining...

such as tf-idf to track frequencies of certain words in several documents.

Complex numbers can be represented by particular real 2-by-2 matrices via

under which addition and multiplication of complex numbers and matrices correspond to each other. For example, 2-by-2 rotation matrices represent the multiplication with some complex number of absolute value

Absolute value

In mathematics, the absolute value |a| of a real number a is the numerical value of a without regard to its sign. So, for example, the absolute value of 3 is 3, and the absolute value of -3 is also 3...

1, as above. A similar interpretation is possible for quaternion

Quaternion

In mathematics, the quaternions are a number system that extends the complex numbers. They were first described by Irish mathematician Sir William Rowan Hamilton in 1843 and applied to mechanics in three-dimensional space...

s.

Early encryption

Encryption

In cryptography, encryption is the process of transforming information using an algorithm to make it unreadable to anyone except those possessing special knowledge, usually referred to as a key. The result of the process is encrypted information...

techniques such as the Hill cipher

Hill cipher

In classical cryptography, the Hill cipher is a polygraphic substitution cipher based on linear algebra. Invented by Lester S. Hill in 1929, it was the first polygraphic cipher in which it was practical to operate on more than three symbols at once. The following discussion assumes an elementary...

also used matrices. However, due to the linear nature of matrices, these codes are comparatively easy to break. Computer graphics

Computer graphics

Computer graphics are graphics created using computers and, more generally, the representation and manipulation of image data by a computer with help from specialized software and hardware....

uses matrices both to represent objects and to calculate transformations of objects using affine rotation matrices to accomplish tasks such as projecting a three-dimensional object onto a two-dimensional screen, corresponding to a theoretical camera observation. Matrices over a polynomial ring

Polynomial ring

In mathematics, especially in the field of abstract algebra, a polynomial ring is a ring formed from the set of polynomials in one or more variables with coefficients in another ring. Polynomial rings have influenced much of mathematics, from the Hilbert basis theorem, to the construction of...

are important in the study of control theory

Control theory

Control theory is an interdisciplinary branch of engineering and mathematics that deals with the behavior of dynamical systems. The desired output of a system is called the reference...

.

Chemistry

Chemistry

Chemistry is the science of matter, especially its chemical reactions, but also its composition, structure and properties. Chemistry is concerned with atoms and their interactions with other atoms, and particularly with the properties of chemical bonds....

makes use of matrices in various ways, particularly since the use of quantum theory

Quantum mechanics

Quantum mechanics, also known as quantum physics or quantum theory, is a branch of physics providing a mathematical description of much of the dual particle-like and wave-like behavior and interactions of energy and matter. It departs from classical mechanics primarily at the atomic and subatomic...

to discuss molecular bonding

Chemical bond

A chemical bond is an attraction between atoms that allows the formation of chemical substances that contain two or more atoms. The bond is caused by the electromagnetic force attraction between opposite charges, either between electrons and nuclei, or as the result of a dipole attraction...

and spectroscopy

Spectroscopy

Spectroscopy is the study of the interaction between matter and radiated energy. Historically, spectroscopy originated through the study of visible light dispersed according to its wavelength, e.g., by a prism. Later the concept was expanded greatly to comprise any interaction with radiative...

. Examples are the overlap matrix

Overlap matrix

The overlap matrix is a square matrix, used in quantum chemistry to describe the inter-relationship of a set of basis vectors of a quantum system. In particular, if the vectors are orthogonal to one another, the overlap matrix will be diagonal. In addition, if the basis vectors form an...

and the Fock matrix

Fock matrix

In the Hartree-Fock method of quantum mechanics, the Fock matrix is a matrix approximating the single-electron energy operator of a given quantum system in a given set of basis vectors....

used in solving the Roothaan equations

Roothaan equations

The Roothaan equations are a representation of the Hartree-Fock equation in a non orthonormal basis set which can be of Gaussian-type or Slater-type. It applies to closed-shell molecules or atoms where all molecular orbitals or atomic orbitals, respectively, are doubly occupied. This is generally...

to obtain the molecular orbital

Molecular orbital

In chemistry, a molecular orbital is a mathematical function describing the wave-like behavior of an electron in a molecule. This function can be used to calculate chemical and physical properties such as the probability of finding an electron in any specific region. The term "orbital" was first...

s of the Hartree–Fock method.

Graph theory

The adjacency matrixAdjacency matrix

In mathematics and computer science, an adjacency matrix is a means of representing which vertices of a graph are adjacent to which other vertices...

of a finite graph is a basic notion of graph theory

Graph theory

In mathematics and computer science, graph theory is the study of graphs, mathematical structures used to model pairwise relations between objects from a certain collection. A "graph" in this context refers to a collection of vertices or 'nodes' and a collection of edges that connect pairs of...

. It saves which vertices of the graph are connected by an edge. Matrices containing just two different values (0 and 1 meaning for example "yes" and "no") are called logical matrices. The distance (or cost) matrix

Distance matrix

In mathematics, computer science and graph theory, a distance matrix is a matrix containing the distances, taken pairwise, of a set of points...

contains information about distances of the edges. These concepts can be applied to website

Website

A website, also written as Web site, web site, or simply site, is a collection of related web pages containing images, videos or other digital assets. A website is hosted on at least one web server, accessible via a network such as the Internet or a private local area network through an Internet...

s connected hyperlink

Hyperlink

In computing, a hyperlink is a reference to data that the reader can directly follow, or that is followed automatically. A hyperlink points to a whole document or to a specific element within a document. Hypertext is text with hyperlinks...

s or cities connected by roads etc., in which case (unless the road network is extremely dense) the matrices tend to be sparse

Sparse matrix

In the subfield of numerical analysis, a sparse matrix is a matrix populated primarily with zeros . The term itself was coined by Harry M. Markowitz....

, i.e., contain few nonzero entries. Therefore, specifically tailored matrix algorithms can be used in network theory

Network theory

Network theory is an area of computer science and network science and part of graph theory. It has application in many disciplines including statistical physics, particle physics, computer science, biology, economics, operations research, and sociology...

.

Analysis and geometry

The Hessian matrixHessian matrix

In mathematics, the Hessian matrix is the square matrix of second-order partial derivatives of a function; that is, it describes the local curvature of a function of many variables. The Hessian matrix was developed in the 19th century by the German mathematician Ludwig Otto Hesse and later named...

of a differentiable function

Differentiable function

In calculus , a differentiable function is a function whose derivative exists at each point in its domain. The graph of a differentiable function must have a non-vertical tangent line at each point in its domain...

ƒ: Rn → R consists of the second derivative

Second derivative

In calculus, the second derivative of a function ƒ is the derivative of the derivative of ƒ. Roughly speaking, the second derivative measures how the rate of change of a quantity is itself changing; for example, the second derivative of the position of a vehicle with respect to time is...

s of ƒ with respect to the several coordinate directions, i.e.

Critical point (mathematics)

In calculus, a critical point of a function of a real variable is any value in the domain where either the function is not differentiable or its derivative is 0. The value of the function at a critical point is a critical value of the function...

x = (x1, ..., xn), i.e., a point where the first partial derivatives

of ƒ vanish, the function has a local minimum if the Hessian matrix is positive definite. Quadratic programming

of ƒ vanish, the function has a local minimum if the Hessian matrix is positive definite. Quadratic programmingQuadratic programming

Quadratic programming is a special type of mathematical optimization problem. It is the problem of optimizing a quadratic function of several variables subject to linear constraints on these variables....

can be used to find global minima or maxima of quadratic functions closely related to the ones attached to matrices (see above).

Another matrix frequently used in geometrical situations is the Jacobi matrix of a differentiable map f: Rn → Rm. If f1, ..., fm denote the components of f, then the Jacobi matrix is defined as

If n > m, and if the rank of the Jacobi matrix attains its maximal value m, f is locally invertible at that point, by the implicit function theorem

Implicit function theorem

In multivariable calculus, the implicit function theorem is a tool which allows relations to be converted to functions. It does this by representing the relation as the graph of a function. There may not be a single function whose graph is the entire relation, but there may be such a function on...

.

Partial differential equation

Partial differential equation

In mathematics, partial differential equations are a type of differential equation, i.e., a relation involving an unknown function of several independent variables and their partial derivatives with respect to those variables...