File system fragmentation

Encyclopedia

In computing

, file system fragmentation, sometimes called file system aging, is the inability of a file system

to lay out related data sequentially (contiguously), an inherent phenomenon in storage

-backed file systems that allow in-place modification of their contents. It is a special case of data fragmentation. File system fragmentation increases disk head movement or seeks, which are known to hinder throughput

. The correction to existing fragmentation is to reorganize files and free space back into contiguous areas, a process called defragmentation

.

and application

s are installed or other archives are unpacked, laying out separate files sequentially also means that related files are likely to be positioned close to each other.

However, as existing files are deleted or truncated, new regions of free space are created. When existing files are appended to, it is often impossible to resume the write exactly where the file used to end, as another file may already be allocated there — thus, a new fragment has to be allocated. As time goes on, and the same factors are continuously present, free space as well as frequently appended files tend to fragment more. Shorter regions of free space also mean that the allocator is no longer able to allocate new files contiguously, and has to break them into fragments. This is especially true when the file system is more full — longer contiguous regions of free space are less likely to occur.

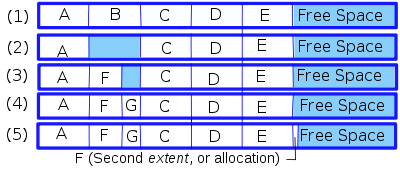

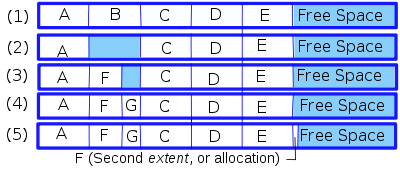

Note that the following is a simplification of an otherwise complicated subject. The method which is about to be explained has been the general practice for allocating files on disk and other random-access storage for over 30 years. Some operating systems do not simply allocate files one after the other, and some use various methods to try to prevent fragmentation, but in general, sooner or later, for the reasons explained in the following explanation, fragmentation will occur as time goes by on any system where files are routinely deleted or expanded. Consider the following scenario, as shown by the image on the right:

A new disk has had 5 files saved on it, named A, B, C, D and E, and each file is using 10 blocks of space (here the block size is unimportant.) As the free space is contiguous the files are located one after the other (Example (1).)

A new disk has had 5 files saved on it, named A, B, C, D and E, and each file is using 10 blocks of space (here the block size is unimportant.) As the free space is contiguous the files are located one after the other (Example (1).)

If file B is deleted, a second region of 10 blocks of free space is created, and the disk becomes fragmented. The file system could defragment the disk immediately after a deletion, which would incur a severe performance penalty at unpredictable times, but in general the empty space is simply left there, marked in a table as available for later use, then used again as needed (Example (2).)

Now if a new file F requires 7 blocks of space, it can be placed into the first 7 blocks of the space formerly holding the file B, and the 3 blocks following it will remain available (Example (3).) If another new file G is added, and needs only three blocks, it could then occupy the space after F and before C (Example (4).)

If subsequently F needs to be expanded, since the space immediately following it is occupied, there are three options: (1) add a new block somewhere else and indicate that F has a second extent, (2) move files in the way of the expansion elsewhere, to allow F to remain contiguous; or (3) move file F so it can be one contiguous file of the new, larger size. The second option is probably impractical for performance reasons, as is the third when the file is very large. Indeed the third option is impossible when there is no single contiguous free space large enough to hold the new file. Thus the usual practice is simply to create an extent somewhere else and chain the new extent onto the old one (Example (5).)

Material added to the end of file F would be part of the same extent. But if there is so much material that no room is available after the last extent, then another extent would have to be created, and so on. Eventually the file system has free segments in many places and some files may be spread over many extents. Access time for those files (or for all files) may become excessively long.

To summarize, factors that typically cause or facilitate fragmentation include:

speed and rotational latency (and to a lesser extent seek time), of consumer-grade hard disk

s, on which file systems are usually placed. Thus, fragmentation is an important problem in recent file system research and design. The containment of fragmentation not only depends on the on-disk format of the file system, but also heavily on its implementation.

In simple file system benchmark

s, the fragmentation factor is often omitted, as realistic aging and fragmentation is difficult to model. Rather, for simplicity of comparison, file system benchmarks are often run on empty file systems, and unsurprisingly, the results may vary heavily from real-life access patterns.

File system fragmentation has less impact upon the performance of solid state drives, as there is no mechanical seek time involved like with rotating media, though additional non-sequential I/O operations impacts system performance, and many file system architectures consume additional internal resources when fragmentation is present.

(within the storing medium) between related files (see file sequence

for more detail). Unlike the previous two types of fragmentation, file scattering is a much more vague concept, as it heavily depends on the access pattern of specific applications. This also makes objectively measuring or estimating it very difficult. However, arguably, it is the most critical type of fragmentation, as studies have found that the most frequently accessed files tend to be small compared to available disk throughput per second.

To avoid related file fragmentation and improve locality of reference (in this case called file contiguity), assumptions about the operation of applications have to be made. A very frequent assumption made is that it is worthwhile to keep smaller files within a single directory together, and lay them out in the natural file system order. While it is often a reasonable assumption, it does not always hold. For example, an application might read several different files, perhaps in different directories, in exactly the same order they were written. Thus, a file system that simply orders all writes successively, might work faster for the given application.

Many of today's file systems attempt to preallocate longer chunks, or chunks from different free space fragments, called extents to files that are actively appended to. This largely avoids file fragmentation when several files are concurrently being appended to, thus avoiding their becoming excessively intertwined.

If the final size of a file subject to modification is known, storage for the entire file may be preallocated. For example, the Microsoft Windows

swap file (page file) is resized dynamically under normal operation, and often becomes highly fragmented. This can be prevented by specifying a page file with the same minimum and maximum sizes, effectively preallocating the entire file.

BitTorrent and other peer-to-peer

filesharing applications limit fragmentation by preallocating the full space needed for a file when initiating downloads.

A relatively recent technique is delayed allocation in XFS

, HFS+ and ZFS

; the same technique is also called allocate-on-flush in reiser4

and ext4

. When the file system is being written to, file system blocks are reserved, but the locations of specific files are not laid down yet. Later, when the file system is forced to flush changes as a result of memory pressure or a transaction commit, the allocator will have much better knowledge of the files' characteristics. Most file systems with this approach try to flush files in a single directory contiguously. Assuming that multiple reads from a single directory are common, locality of reference is improved. Reiser4 also orders the layout of files according to the directory hash table

, so that when files are being accessed in the natural file system order (as dictated by readdir), they are always read sequentially.

tools, which attempt to reorder fragments of files, and sometimes also decrease their scattering (i.e. improve their contiguity, or locality of reference

) by keeping either smaller files in directories, or directory trees, or even file sequence

s close to each other on the disk.

The HFS Plus

file system transparently defragments files that are less than 20 MiB

in size and are broken into 8 or more fragments, when the file is being opened.

The now obsolete Commodore Amiga Smart File System

(SFS) defragmented itself while the filesystem was in use. The defragmentation process is almost completely stateless (apart from the location it is working on), so that it can be stopped and started instantly. During defragmentation data integrity is ensured for both metadata and normal data.

Computing

Computing is usually defined as the activity of using and improving computer hardware and software. It is the computer-specific part of information technology...

, file system fragmentation, sometimes called file system aging, is the inability of a file system

File system

A file system is a means to organize data expected to be retained after a program terminates by providing procedures to store, retrieve and update data, as well as manage the available space on the device which contain it. A file system organizes data in an efficient manner and is tuned to the...

to lay out related data sequentially (contiguously), an inherent phenomenon in storage

Computer storage

Computer data storage, often called storage or memory, refers to computer components and recording media that retain digital data. Data storage is one of the core functions and fundamental components of computers....

-backed file systems that allow in-place modification of their contents. It is a special case of data fragmentation. File system fragmentation increases disk head movement or seeks, which are known to hinder throughput

Throughput

In communication networks, such as Ethernet or packet radio, throughput or network throughput is the average rate of successful message delivery over a communication channel. This data may be delivered over a physical or logical link, or pass through a certain network node...

. The correction to existing fragmentation is to reorganize files and free space back into contiguous areas, a process called defragmentation

Defragmentation

In the maintenance of file systems, defragmentation is a process that reduces the amount of fragmentation. It does this by physically organizing the contents of the mass storage device used to store files into the smallest number of contiguous regions . It also attempts to create larger regions of...

.

Cause

When a file system is first initialized on a partition (the partition is formatted for the file system), the partition contains only a few small internal structures and is otherwise one contiguous block of empty space. This means that the allocator algorithm is completely free to place newly created files anywhere on the partition. For some time after creation, files on the file system can be laid out near-optimally. When the operating systemOperating system

An operating system is a set of programs that manage computer hardware resources and provide common services for application software. The operating system is the most important type of system software in a computer system...

and application

Application software

Application software, also known as an application or an "app", is computer software designed to help the user to perform specific tasks. Examples include enterprise software, accounting software, office suites, graphics software and media players. Many application programs deal principally with...

s are installed or other archives are unpacked, laying out separate files sequentially also means that related files are likely to be positioned close to each other.

However, as existing files are deleted or truncated, new regions of free space are created. When existing files are appended to, it is often impossible to resume the write exactly where the file used to end, as another file may already be allocated there — thus, a new fragment has to be allocated. As time goes on, and the same factors are continuously present, free space as well as frequently appended files tend to fragment more. Shorter regions of free space also mean that the allocator is no longer able to allocate new files contiguously, and has to break them into fragments. This is especially true when the file system is more full — longer contiguous regions of free space are less likely to occur.

Note that the following is a simplification of an otherwise complicated subject. The method which is about to be explained has been the general practice for allocating files on disk and other random-access storage for over 30 years. Some operating systems do not simply allocate files one after the other, and some use various methods to try to prevent fragmentation, but in general, sooner or later, for the reasons explained in the following explanation, fragmentation will occur as time goes by on any system where files are routinely deleted or expanded. Consider the following scenario, as shown by the image on the right:

If file B is deleted, a second region of 10 blocks of free space is created, and the disk becomes fragmented. The file system could defragment the disk immediately after a deletion, which would incur a severe performance penalty at unpredictable times, but in general the empty space is simply left there, marked in a table as available for later use, then used again as needed (Example (2).)

Now if a new file F requires 7 blocks of space, it can be placed into the first 7 blocks of the space formerly holding the file B, and the 3 blocks following it will remain available (Example (3).) If another new file G is added, and needs only three blocks, it could then occupy the space after F and before C (Example (4).)

If subsequently F needs to be expanded, since the space immediately following it is occupied, there are three options: (1) add a new block somewhere else and indicate that F has a second extent, (2) move files in the way of the expansion elsewhere, to allow F to remain contiguous; or (3) move file F so it can be one contiguous file of the new, larger size. The second option is probably impractical for performance reasons, as is the third when the file is very large. Indeed the third option is impossible when there is no single contiguous free space large enough to hold the new file. Thus the usual practice is simply to create an extent somewhere else and chain the new extent onto the old one (Example (5).)

Material added to the end of file F would be part of the same extent. But if there is so much material that no room is available after the last extent, then another extent would have to be created, and so on. Eventually the file system has free segments in many places and some files may be spread over many extents. Access time for those files (or for all files) may become excessively long.

To summarize, factors that typically cause or facilitate fragmentation include:

- low free space.

- frequent deletion, truncation or extension of files.

- overuse of sparse files.

Performance implications

File system fragmentation is projected to become more problematic with newer hardware due to the increasing disparity between sequential accessSequential access

In computer science, sequential access means that a group of elements is accessed in a predetermined, ordered sequence. Sequential access is sometimes the only way of accessing the data, for example if it is on a tape...

speed and rotational latency (and to a lesser extent seek time), of consumer-grade hard disk

Hard disk

A hard disk drive is a non-volatile, random access digital magnetic data storage device. It features rotating rigid platters on a motor-driven spindle within a protective enclosure. Data is magnetically read from and written to the platter by read/write heads that float on a film of air above the...

s, on which file systems are usually placed. Thus, fragmentation is an important problem in recent file system research and design. The containment of fragmentation not only depends on the on-disk format of the file system, but also heavily on its implementation.

In simple file system benchmark

Benchmark (computing)

In computing, a benchmark is the act of running a computer program, a set of programs, or other operations, in order to assess the relative performance of an object, normally by running a number of standard tests and trials against it...

s, the fragmentation factor is often omitted, as realistic aging and fragmentation is difficult to model. Rather, for simplicity of comparison, file system benchmarks are often run on empty file systems, and unsurprisingly, the results may vary heavily from real-life access patterns.

File system fragmentation has less impact upon the performance of solid state drives, as there is no mechanical seek time involved like with rotating media, though additional non-sequential I/O operations impacts system performance, and many file system architectures consume additional internal resources when fragmentation is present.

Types of fragmentation

File system fragmentation may occur on several levels:- Fragmentation within individual fileComputer fileA computer file is a block of arbitrary information, or resource for storing information, which is available to a computer program and is usually based on some kind of durable storage. A file is durable in the sense that it remains available for programs to use after the current program has finished...

s and their metadataMetadataThe term metadata is an ambiguous term which is used for two fundamentally different concepts . Although the expression "data about data" is often used, it does not apply to both in the same way. Structural metadata, the design and specification of data structures, cannot be about data, because at...

. - Free space fragmentation, making it increasingly difficult to lay out new files contiguously.

- The decrease of locality of referenceLocality of referenceIn computer science, locality of reference, also known as the principle of locality, is the phenomenon of the same value or related storage locations being frequently accessed. There are two basic types of reference locality. Temporal locality refers to the reuse of specific data and/or resources...

between separate, but related files.

File fragmentation

Individual file fragmentation occurs when a single file has been broken into multiple pieces (called extents on extent-based file systems). While disk file systems attempt to keep individual files contiguous, this is not often possible without significant performance penalties. File system check and defragmentation tools typically only account for file fragmentation in their "fragmentation percentage" statistic.Free space fragmentation

Free (unallocated) space fragmentation occurs when there are several unused areas of the file system where new files or metadata can be written to. Unwanted free space fragmentation is generally caused by deletion or truncation of files, but file systems may also intentionally insert fragments ("bubbles") of free space in order to facilitate extending nearby files (see preemptive techniques below).File scattering

File segmentation, also called related-file fragmentation, or application-level (file) fragmentation, refers to the lack of locality of referenceLocality of reference

In computer science, locality of reference, also known as the principle of locality, is the phenomenon of the same value or related storage locations being frequently accessed. There are two basic types of reference locality. Temporal locality refers to the reuse of specific data and/or resources...

(within the storing medium) between related files (see file sequence

File sequence

In computing, as well as in non-computing contexts, a file sequence is a well-ordered, collection of files, usually related to each other in some way....

for more detail). Unlike the previous two types of fragmentation, file scattering is a much more vague concept, as it heavily depends on the access pattern of specific applications. This also makes objectively measuring or estimating it very difficult. However, arguably, it is the most critical type of fragmentation, as studies have found that the most frequently accessed files tend to be small compared to available disk throughput per second.

To avoid related file fragmentation and improve locality of reference (in this case called file contiguity), assumptions about the operation of applications have to be made. A very frequent assumption made is that it is worthwhile to keep smaller files within a single directory together, and lay them out in the natural file system order. While it is often a reasonable assumption, it does not always hold. For example, an application might read several different files, perhaps in different directories, in exactly the same order they were written. Thus, a file system that simply orders all writes successively, might work faster for the given application.

Techniques for mitigating fragmentation

Several techniques have been developed to fight fragmentation. They can usually be classified into two categories: preemptive and retroactive. Due to the difficulty of predicting access patterns these techniques are most often heuristic in nature and may degrade performance under unexpected workloads.Preventing fragmentation

Preemptive techniques attempt to keep fragmentation at a minimum at the time data is being written on the disk. The simplest is appending data to an existing fragment in place where possible, instead of allocating new blocks to a new fragment.Many of today's file systems attempt to preallocate longer chunks, or chunks from different free space fragments, called extents to files that are actively appended to. This largely avoids file fragmentation when several files are concurrently being appended to, thus avoiding their becoming excessively intertwined.

If the final size of a file subject to modification is known, storage for the entire file may be preallocated. For example, the Microsoft Windows

Microsoft Windows

Microsoft Windows is a series of operating systems produced by Microsoft.Microsoft introduced an operating environment named Windows on November 20, 1985 as an add-on to MS-DOS in response to the growing interest in graphical user interfaces . Microsoft Windows came to dominate the world's personal...

swap file (page file) is resized dynamically under normal operation, and often becomes highly fragmented. This can be prevented by specifying a page file with the same minimum and maximum sizes, effectively preallocating the entire file.

BitTorrent and other peer-to-peer

Peer-to-peer

Peer-to-peer computing or networking is a distributed application architecture that partitions tasks or workloads among peers. Peers are equally privileged, equipotent participants in the application...

filesharing applications limit fragmentation by preallocating the full space needed for a file when initiating downloads.

A relatively recent technique is delayed allocation in XFS

XFS

XFS is a high-performance journaling file system created by Silicon Graphics, Inc. It is the default file system in IRIX releases 5.3 and onwards and later ported to the Linux kernel. XFS is particularly proficient at parallel IO due to its allocation group based design...

, HFS+ and ZFS

ZFS

In computing, ZFS is a combined file system and logical volume manager designed by Sun Microsystems. The features of ZFS include data integrity verification against data corruption modes , support for high storage capacities, integration of the concepts of filesystem and volume management,...

; the same technique is also called allocate-on-flush in reiser4

Reiser4

Reiser4 is a computer file system, successor to the ReiserFS file system, developed from scratch by Namesys and sponsored by DARPA as well as Linspire...

and ext4

Ext4

The ext4 or fourth extended filesystem is a journaling file system for Linux, developed as the successor to ext3.It was born as a series of backward compatible extensions to ext3, many of them originally developed by Cluster File Systems for the Lustre file system between 2003 and 2006, meant to...

. When the file system is being written to, file system blocks are reserved, but the locations of specific files are not laid down yet. Later, when the file system is forced to flush changes as a result of memory pressure or a transaction commit, the allocator will have much better knowledge of the files' characteristics. Most file systems with this approach try to flush files in a single directory contiguously. Assuming that multiple reads from a single directory are common, locality of reference is improved. Reiser4 also orders the layout of files according to the directory hash table

Hash table

In computer science, a hash table or hash map is a data structure that uses a hash function to map identifying values, known as keys , to their associated values . Thus, a hash table implements an associative array...

, so that when files are being accessed in the natural file system order (as dictated by readdir), they are always read sequentially.

Eliminating fragmentation

Retroactive techniques attempt to reduce fragmentation, or the negative effects of fragmentation, after it has occurred. Many file systems provide defragmentationDefragmentation

In the maintenance of file systems, defragmentation is a process that reduces the amount of fragmentation. It does this by physically organizing the contents of the mass storage device used to store files into the smallest number of contiguous regions . It also attempts to create larger regions of...

tools, which attempt to reorder fragments of files, and sometimes also decrease their scattering (i.e. improve their contiguity, or locality of reference

Locality of reference

In computer science, locality of reference, also known as the principle of locality, is the phenomenon of the same value or related storage locations being frequently accessed. There are two basic types of reference locality. Temporal locality refers to the reuse of specific data and/or resources...

) by keeping either smaller files in directories, or directory trees, or even file sequence

File sequence

In computing, as well as in non-computing contexts, a file sequence is a well-ordered, collection of files, usually related to each other in some way....

s close to each other on the disk.

The HFS Plus

HFS Plus

HFS Plus or HFS+ is a file system developed by Apple Inc. to replace their Hierarchical File System as the primary file system used in Macintosh computers . It is also one of the formats used by the iPod digital music player...

file system transparently defragments files that are less than 20 MiB

MIB

MIB may refer to any of several concepts:* Master of International Business, a postgraduate business degree* Melayu Islam Beraja, the adopted national philosophy of Brunei* Motion induced blindness, a visual illusion in peripheral vision...

in size and are broken into 8 or more fragments, when the file is being opened.

The now obsolete Commodore Amiga Smart File System

Smart File System

The Smart File System is a journaling filesystem used on Amiga computers. It is designed for performance, scalability and integrity...

(SFS) defragmented itself while the filesystem was in use. The defragmentation process is almost completely stateless (apart from the location it is working on), so that it can be stopped and started instantly. During defragmentation data integrity is ensured for both metadata and normal data.

See also

- FragmentationFragmentation (computer)In computer storage, fragmentation is a phenomenon in which storage space is used inefficiently, reducing storage capacity and in most cases reducing the performance. The term is also used to denote the wasted space itself....

- DefragmentationDefragmentationIn the maintenance of file systems, defragmentation is a process that reduces the amount of fragmentation. It does this by physically organizing the contents of the mass storage device used to store files into the smallest number of contiguous regions . It also attempts to create larger regions of...

- File systemFile systemA file system is a means to organize data expected to be retained after a program terminates by providing procedures to store, retrieve and update data, as well as manage the available space on the device which contain it. A file system organizes data in an efficient manner and is tuned to the...

- List of defragmentation software

- Locality of referenceLocality of referenceIn computer science, locality of reference, also known as the principle of locality, is the phenomenon of the same value or related storage locations being frequently accessed. There are two basic types of reference locality. Temporal locality refers to the reuse of specific data and/or resources...