Data integration

Encyclopedia

Data integration involves combining data

residing in different sources and providing users with a unified view of these data.

This process becomes significant in a variety of situations, which include both commercial (when two similar companies need to merge their database

s) and scientific (combining research results from different bioinformatics

repositories, for example) domains. Data integration appears with increasing frequency as the volume and the need to share existing data explodes

. It has become the focus of extensive theoretical work, and numerous open problems remain unsolved. In management

circles, people frequently refer to data integration as "Enterprise Information Integration

" (EII).

Issues with combining heterogeneous data sources under a single query interface have existed for some time. The rapid adoption of database

Issues with combining heterogeneous data sources under a single query interface have existed for some time. The rapid adoption of database

s after the 1960s naturally led to the need to share or to merge existing repositories. This merging can take place at several levels in the database architecture.

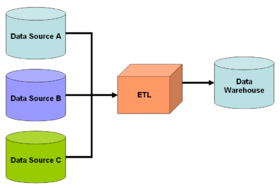

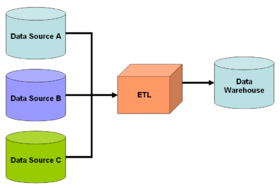

One popular solution is implemented based on data warehousing (see figure 1). The warehouse system extracts, transforms, and loads

data from heterogeneous sources into a single common queriable schema

so data becomes compatible with each other. This approach offers a tightly coupled

architecture because the data is already physically reconciled in a single repository at query-time, so it usually takes little time to resolve queries. However, problems arise with the "freshness" of data, which means information in warehouse is not always up-to-date. Therefore, when an original data source gets updated, the warehouse still retains outdated data and the ETL

process needs re-execution for synchronization. Difficulties also arise in constructing data warehouses when one has only a query interface to summary data sources and no access to the full data. This problem frequently emerges when integrating several commercial query services like travel or classified advertisement web applications.

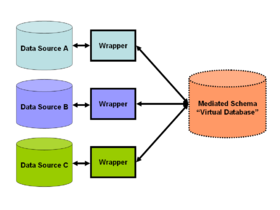

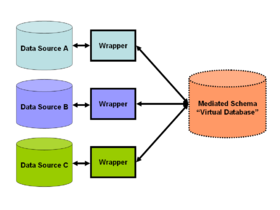

the trend in data integration has favored loosening the coupling between data and providing a unified query-interface to access real time data over a mediated schema (see figure 2), which makes information can be retrieved directly from original databases. This approach may need to specify mappings between the mediated schema and the schema of original sources, and transform a query into specialized queries to match the schema of the original databases. Therefore, this middleware

architecture is also termed as "view-based query-answering" because each data source is represented as a view

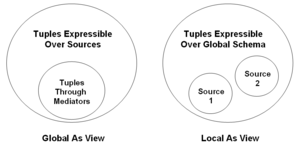

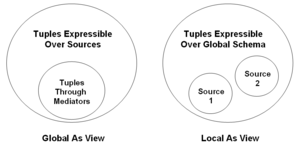

over the (nonexistent) mediated schema. Formally, computer scientists term such an approach "Local As View" (LAV) — where "Local" refers to the local sources/databases. An alternate model of integration has the mediated schema functioning as a view over the sources. This approach, called "Global As View" (GAV) — where "Global" refers to the global (mediated) schema — has attractions owing to the simplicity of answering queries by means of the mediated schema. However, it is necessary to reconstitute the view for the mediated schema whenever a new source gets integrated and/or an already integrated source modifies its schema.

some of the work in data integration research concerns the semantic integration

problem. This problem addresses not the structuring of the architecture of the integration, but how to resolve semantic conflicts between heterogeneous data sources. For example if two companies merge their databases, certain concepts and definitions in their respective schemas like "earnings" inevitably have different meanings. In one database it may mean profits in dollars (a floating-point number), while in the other it might represent the number of sales (an integer). A common strategy for the resolution of such problems involves the use of ontologies

which explicitly define schema terms and thus help to resolve semantic conflicts. This approach represents ontology-based data integration

.

where a user can query a variety of information about cities (such as crime statistics, weather, hotels, demographics, etc.). Traditionally, the information must be stored in a single database with a single schema. But any single enterprise would find information of this breadth somewhat difficult and expensive to collect. Even if the resources exist to gather the data, it would likely duplicate data in existing crime databases, weather websites, and census data.

A data-integration solution may address this problem by considering these external resources as materialized view

s over a virtual mediated schema, resulting in "virtual data integration". This means application-developers construct a virtual schema — the mediated schema — to best model the kinds of answers their users want. Next, they design "wrappers" or adapters for each data source, such as the crime database and weather website. These adapters simply transform the local query results (those returned by the respective websites or databases) into an easily processed form for the data integration solution (see figure 2). When an application-user queries the mediated schema, the data-integration solution transforms this query into appropriate queries over the respective data sources. Finally, the virtual database combines the results of these queries into the answer to the user's query.

This solution offers the convenience of adding new sources by simply constructing an adapter or an application software blade for them. It contrasts with ETL systems or with a single database solution, which require manual integration of entire new dataset into the system. The virtual ETL solutions leverage virtual mediated schema to implement data harmonization; whereby the data is copied from the designated "master" source to the defined targets, field by field. Advanced Data virtualization

is also built on the concept of object-oriented modeling in order to construct virtual mediated schema or virtual metadata repository, using hub and spoke architecture.

. Applying the theories gives indications as to the feasibility and difficulty of data integration. While its definitions may appear abstract, they have sufficient generality to accommodate all manner of integration systems.

where

where  is the global (or mediated) schema,

is the global (or mediated) schema,  is the heterogeneous set of source schemas, and

is the heterogeneous set of source schemas, and  is the mapping that maps queries between the source and the global schemas. Both

is the mapping that maps queries between the source and the global schemas. Both  and

and  are expressed in languages

are expressed in languages

over alphabets composed of symbols for each of their respective relations

. The mapping

consists of assertions between queries over

consists of assertions between queries over  and queries over

and queries over  . When users pose queries over the data integration system, they pose queries over

. When users pose queries over the data integration system, they pose queries over  and the mapping then asserts connections between the elements in the global schema and the source schemas.

and the mapping then asserts connections between the elements in the global schema and the source schemas.

A database over a schema is defined as a set of sets, one for each relation (in a relational database). The database corresponding to the source schema would comprise the set of sets of tuples for each of the heterogeneous data sources and is called the source database. Note that this single source database may actually represent a collection of disconnected databases. The database corresponding to the virtual mediated schema

would comprise the set of sets of tuples for each of the heterogeneous data sources and is called the source database. Note that this single source database may actually represent a collection of disconnected databases. The database corresponding to the virtual mediated schema  is called the global database. The global database must satisfy the mapping

is called the global database. The global database must satisfy the mapping  with respect to the source database. The legality of this mapping depends on the nature of the correspondence between

with respect to the source database. The legality of this mapping depends on the nature of the correspondence between  and

and  . Two popular ways to model this correspondence exist: Global as View or GAV and Local as View or LAV.

. Two popular ways to model this correspondence exist: Global as View or GAV and Local as View or LAV.

GAV systems model the global database as a set of views

GAV systems model the global database as a set of views

over . In this case

. In this case  associates to each element of

associates to each element of  as a query over

as a query over  . Query processing

. Query processing

becomes a straightforward operation due to the well-defined associations between and

and  . The burden of complexity falls on implementing mediator code instructing the data integration system exactly how to retrieve elements from the source databases. If any new sources join the system, considerable effort may be necessary to update the mediator, thus the GAV approach appears preferable when the sources seem unlikely to change.

. The burden of complexity falls on implementing mediator code instructing the data integration system exactly how to retrieve elements from the source databases. If any new sources join the system, considerable effort may be necessary to update the mediator, thus the GAV approach appears preferable when the sources seem unlikely to change.

In a GAV approach to the example data integration system above, the system designer would first develop mediators for each of the city information sources and then design the global schema around these mediators. For example, consider if one of the sources served a weather website. The designer would likely then add a corresponding element for weather to the global schema. Then the bulk of effort concentrates on writing the proper mediator code that will transform predicates on weather into a query over the weather website. This effort can become complex if some other source also relates to weather, because the designer may need to write code to properly combine the results from the two sources.

On the other hand, in LAV, the source database is modeled as a set of views

over . In this case

. In this case  associates to each element of

associates to each element of  a query over

a query over  . Here the exact associations between

. Here the exact associations between  and

and  are no longer well-defined. As is illustrated in the next section, the burden of determining how to retrieve elements from the sources is placed on the query processor. The benefit of an LAV modeling is that new sources can be added with far less work than in a GAV system, thus the LAV approach should be favored in cases where the mediated schema is more stable and unlikely to change.

are no longer well-defined. As is illustrated in the next section, the burden of determining how to retrieve elements from the sources is placed on the query processor. The benefit of an LAV modeling is that new sources can be added with far less work than in a GAV system, thus the LAV approach should be favored in cases where the mediated schema is more stable and unlikely to change.

In an LAV approach to the example data integration system above, the system designer designs the global schema first and then simply inputs the schemas of the respective city information sources. Consider again if one of the sources serves a weather website. The designer would add corresponding elements for weather to the global schema only if none existed already. Then programmers write an adapter or wrapper for the website and add a schema description of the website's results to the source schemas. The complexity of adding the new source moves from the designer to the query processor.

as a logical function applied to the relations of a database such as " where

where  ". If a tuple or set of tuples is substituted into the rule and satisfies it (makes it true), then we consider that tuple as part of the set of answers in the query. While formal languages like Datalog

". If a tuple or set of tuples is substituted into the rule and satisfies it (makes it true), then we consider that tuple as part of the set of answers in the query. While formal languages like Datalog

express these queries concisely and without ambiguity, common SQL

queries count as conjunctive queries as well.

In terms of data integration, "query containment" represents an important property of conjunctive queries. A query contains another query

contains another query  (denoted

(denoted  ) if the results of applying

) if the results of applying  are a subset of the results of applying

are a subset of the results of applying  for any database. The two queries are said to be equivalent if the resulting sets are equal for any database. This is important because in both GAV and LAV systems, a user poses conjunctive queries over a virtual schema represented by a set of views

for any database. The two queries are said to be equivalent if the resulting sets are equal for any database. This is important because in both GAV and LAV systems, a user poses conjunctive queries over a virtual schema represented by a set of views

, or "materialized" conjunctive queries. Integration seeks to rewrite the queries represented by the views to make their results equivalent or maximally contained by our user's query. This corresponds to the problem of answering queries using views (AQUV).

In GAV systems, a system designer writes mediator code to define the query-rewriting. Each element in the user's query corresponds to a substitution rule just as each element in the global schema corresponds to a query over the source. Query processing simply expands the subgoals of the user's query according to the rule specified in the mediator and thus the resulting query is likely to be equivalent. While the designer does the majority of the work beforehand, some GAV systems such as Tsimmis involve simplifying the mediator description process.

In LAV systems, queries undergo a more radical process of rewriting because no mediator exists to align the user's query with a simple expansion strategy. The integration system must execute a search over the space of possible queries in order to find the best rewrite. The resulting rewrite may not be an equivalent query but maximally contained, and the resulting tuples may be incomplete. the MiniCon algorithm is the leading query rewriting algorithm for LAV data integration systems.

In general, the complexity of query rewriting is NP-complete

. If the space of rewrites is relatively small this does not pose a problem — even for integration systems with hundreds of sources.

, invasive species

spread, and resource depletion

, are increasingly requiring the collection of disparate data sets for meta-analysis

. This type of data integration is especially challenging for ecological and environmental data because metadata standards

are not agreed upon and there are many different data types produced in these fields. National Science Foundation

initiatives such as Datanet

are intended to make data integration easier for scientists by providing cyberinfrastructure

and setting standards. The two funded Datanet

initiatives are DataONE

and the Data Conservancy.

Data

The term data refers to qualitative or quantitative attributes of a variable or set of variables. Data are typically the results of measurements and can be the basis of graphs, images, or observations of a set of variables. Data are often viewed as the lowest level of abstraction from which...

residing in different sources and providing users with a unified view of these data.

This process becomes significant in a variety of situations, which include both commercial (when two similar companies need to merge their database

Database

A database is an organized collection of data for one or more purposes, usually in digital form. The data are typically organized to model relevant aspects of reality , in a way that supports processes requiring this information...

s) and scientific (combining research results from different bioinformatics

Bioinformatics

Bioinformatics is the application of computer science and information technology to the field of biology and medicine. Bioinformatics deals with algorithms, databases and information systems, web technologies, artificial intelligence and soft computing, information and computation theory, software...

repositories, for example) domains. Data integration appears with increasing frequency as the volume and the need to share existing data explodes

Information explosion

The information explosion is the rapid increase in the amount of published information and the effects of this abundance of data. As the amount of available data grows, the problem of managing the information becomes more difficult, which can lead to information overload. The Online Oxford English...

. It has become the focus of extensive theoretical work, and numerous open problems remain unsolved. In management

Management

Management in all business and organizational activities is the act of getting people together to accomplish desired goals and objectives using available resources efficiently and effectively...

circles, people frequently refer to data integration as "Enterprise Information Integration

Enterprise Information Integration

Enterprise Information Integration , is a process of information integration, using data abstraction to provide a unified interface for viewing all the data within an organization, and a single set of structures and naming conventions to represent this data; the goal of EII is to get a large set of...

" (EII).

History

Database

A database is an organized collection of data for one or more purposes, usually in digital form. The data are typically organized to model relevant aspects of reality , in a way that supports processes requiring this information...

s after the 1960s naturally led to the need to share or to merge existing repositories. This merging can take place at several levels in the database architecture.

One popular solution is implemented based on data warehousing (see figure 1). The warehouse system extracts, transforms, and loads

Extract, transform, load

Extract, transform and load is a process in database usage and especially in data warehousing that involves:* Extracting data from outside sources* Transforming it to fit operational needs...

data from heterogeneous sources into a single common queriable schema

Logical schema

A Logical Schema is a data model of a specific problem domain expressed in terms of a particular data management technology. Without being specific to a particular database management product, it is in terms of either relational tables and columns, object-oriented classes, or XML tags...

so data becomes compatible with each other. This approach offers a tightly coupled

Coupling (computer science)

In computer science, coupling or dependency is the degree to which each program module relies on each one of the other modules.Coupling is usually contrasted with cohesion. Low coupling often correlates with high cohesion, and vice versa...

architecture because the data is already physically reconciled in a single repository at query-time, so it usually takes little time to resolve queries. However, problems arise with the "freshness" of data, which means information in warehouse is not always up-to-date. Therefore, when an original data source gets updated, the warehouse still retains outdated data and the ETL

Extract, transform, load

Extract, transform and load is a process in database usage and especially in data warehousing that involves:* Extracting data from outside sources* Transforming it to fit operational needs...

process needs re-execution for synchronization. Difficulties also arise in constructing data warehouses when one has only a query interface to summary data sources and no access to the full data. This problem frequently emerges when integrating several commercial query services like travel or classified advertisement web applications.

the trend in data integration has favored loosening the coupling between data and providing a unified query-interface to access real time data over a mediated schema (see figure 2), which makes information can be retrieved directly from original databases. This approach may need to specify mappings between the mediated schema and the schema of original sources, and transform a query into specialized queries to match the schema of the original databases. Therefore, this middleware

Middleware

Middleware is computer software that connects software components or people and their applications. The software consists of a set of services that allows multiple processes running on one or more machines to interact...

architecture is also termed as "view-based query-answering" because each data source is represented as a view

View (database)

In database theory, a view consists of a stored query accessible as a virtual table in a relational database or a set of documents in a document-oriented database composed of the result set of a query or map and reduce functions...

over the (nonexistent) mediated schema. Formally, computer scientists term such an approach "Local As View" (LAV) — where "Local" refers to the local sources/databases. An alternate model of integration has the mediated schema functioning as a view over the sources. This approach, called "Global As View" (GAV) — where "Global" refers to the global (mediated) schema — has attractions owing to the simplicity of answering queries by means of the mediated schema. However, it is necessary to reconstitute the view for the mediated schema whenever a new source gets integrated and/or an already integrated source modifies its schema.

some of the work in data integration research concerns the semantic integration

Semantic integration

Semantic integration is the process of interrelating information from diverse sources, for example calendars and to do lists; email archives; physical, psychological, and social presence information; documents of all sorts; contacts ; search results; and advertising and marketing relevance derived...

problem. This problem addresses not the structuring of the architecture of the integration, but how to resolve semantic conflicts between heterogeneous data sources. For example if two companies merge their databases, certain concepts and definitions in their respective schemas like "earnings" inevitably have different meanings. In one database it may mean profits in dollars (a floating-point number), while in the other it might represent the number of sales (an integer). A common strategy for the resolution of such problems involves the use of ontologies

Ontology (computer science)

In computer science and information science, an ontology formally represents knowledge as a set of concepts within a domain, and the relationships between those concepts. It can be used to reason about the entities within that domain and may be used to describe the domain.In theory, an ontology is...

which explicitly define schema terms and thus help to resolve semantic conflicts. This approach represents ontology-based data integration

Ontology based data integration

Ontology based Data Integration involves the use of ontology to effectively combine data or information from multiple heterogeneous sources . It is one of the multiple data integration approaches and may be classified as Local-As-View...

.

Example

Consider a web applicationWeb application

A web application is an application that is accessed over a network such as the Internet or an intranet. The term may also mean a computer software application that is coded in a browser-supported language and reliant on a common web browser to render the application executable.Web applications are...

where a user can query a variety of information about cities (such as crime statistics, weather, hotels, demographics, etc.). Traditionally, the information must be stored in a single database with a single schema. But any single enterprise would find information of this breadth somewhat difficult and expensive to collect. Even if the resources exist to gather the data, it would likely duplicate data in existing crime databases, weather websites, and census data.

A data-integration solution may address this problem by considering these external resources as materialized view

Materialized view

A materialized view is a database object that contains the results of a query. They are local copies of data located remotely, or are used to create summary tables based on aggregations of a table's data. Materialized views, which store data based on remote tables, are also known as snapshots...

s over a virtual mediated schema, resulting in "virtual data integration". This means application-developers construct a virtual schema — the mediated schema — to best model the kinds of answers their users want. Next, they design "wrappers" or adapters for each data source, such as the crime database and weather website. These adapters simply transform the local query results (those returned by the respective websites or databases) into an easily processed form for the data integration solution (see figure 2). When an application-user queries the mediated schema, the data-integration solution transforms this query into appropriate queries over the respective data sources. Finally, the virtual database combines the results of these queries into the answer to the user's query.

This solution offers the convenience of adding new sources by simply constructing an adapter or an application software blade for them. It contrasts with ETL systems or with a single database solution, which require manual integration of entire new dataset into the system. The virtual ETL solutions leverage virtual mediated schema to implement data harmonization; whereby the data is copied from the designated "master" source to the defined targets, field by field. Advanced Data virtualization

Data virtualization

Data virtualization describes the process of abstracting disparate data sources through a single data access layer ....

is also built on the concept of object-oriented modeling in order to construct virtual mediated schema or virtual metadata repository, using hub and spoke architecture.

Theory of data integration

The theory of data integration forms a subset of database theory and formalizes the underlying concepts of the problem in first-order logicFirst-order logic

First-order logic is a formal logical system used in mathematics, philosophy, linguistics, and computer science. It goes by many names, including: first-order predicate calculus, the lower predicate calculus, quantification theory, and predicate logic...

. Applying the theories gives indications as to the feasibility and difficulty of data integration. While its definitions may appear abstract, they have sufficient generality to accommodate all manner of integration systems.

Definitions

Data integration systems are formally defined as a tripleTriple

Triple, a doublet of "treble" or "threefold" , is used in several contexts:* Triple metre, a musical metre characterized by a primary division of three beats to the bar...

where

where  is the global (or mediated) schema,

is the global (or mediated) schema,  is the heterogeneous set of source schemas, and

is the heterogeneous set of source schemas, and  is the mapping that maps queries between the source and the global schemas. Both

is the mapping that maps queries between the source and the global schemas. Both  and

and  are expressed in languages

are expressed in languagesFormal language

A formal language is a set of words—that is, finite strings of letters, symbols, or tokens that are defined in the language. The set from which these letters are taken is the alphabet over which the language is defined. A formal language is often defined by means of a formal grammar...

over alphabets composed of symbols for each of their respective relations

Relational database

A relational database is a database that conforms to relational model theory. The software used in a relational database is called a relational database management system . Colloquial use of the term "relational database" may refer to the RDBMS software, or the relational database itself...

. The mapping

Functional predicate

In formal logic and related branches of mathematics, a functional predicate, or function symbol, is a logical symbol that may be applied to an object term to produce another object term....

consists of assertions between queries over

consists of assertions between queries over  and queries over

and queries over  . When users pose queries over the data integration system, they pose queries over

. When users pose queries over the data integration system, they pose queries over  and the mapping then asserts connections between the elements in the global schema and the source schemas.

and the mapping then asserts connections between the elements in the global schema and the source schemas.A database over a schema is defined as a set of sets, one for each relation (in a relational database). The database corresponding to the source schema

would comprise the set of sets of tuples for each of the heterogeneous data sources and is called the source database. Note that this single source database may actually represent a collection of disconnected databases. The database corresponding to the virtual mediated schema

would comprise the set of sets of tuples for each of the heterogeneous data sources and is called the source database. Note that this single source database may actually represent a collection of disconnected databases. The database corresponding to the virtual mediated schema  is called the global database. The global database must satisfy the mapping

is called the global database. The global database must satisfy the mapping  with respect to the source database. The legality of this mapping depends on the nature of the correspondence between

with respect to the source database. The legality of this mapping depends on the nature of the correspondence between  and

and  . Two popular ways to model this correspondence exist: Global as View or GAV and Local as View or LAV.

. Two popular ways to model this correspondence exist: Global as View or GAV and Local as View or LAV.

View (database)

In database theory, a view consists of a stored query accessible as a virtual table in a relational database or a set of documents in a document-oriented database composed of the result set of a query or map and reduce functions...

over

. In this case

. In this case  associates to each element of

associates to each element of  as a query over

as a query over  . Query processing

. Query processingQuery optimizer

The query optimizer is the component of a database management system that attempts to determine the most efficient way to execute a query. The optimizer considers the possible query plans for a given input query, and attempts to determine which of those plans will be the most efficient...

becomes a straightforward operation due to the well-defined associations between

and

and  . The burden of complexity falls on implementing mediator code instructing the data integration system exactly how to retrieve elements from the source databases. If any new sources join the system, considerable effort may be necessary to update the mediator, thus the GAV approach appears preferable when the sources seem unlikely to change.

. The burden of complexity falls on implementing mediator code instructing the data integration system exactly how to retrieve elements from the source databases. If any new sources join the system, considerable effort may be necessary to update the mediator, thus the GAV approach appears preferable when the sources seem unlikely to change.In a GAV approach to the example data integration system above, the system designer would first develop mediators for each of the city information sources and then design the global schema around these mediators. For example, consider if one of the sources served a weather website. The designer would likely then add a corresponding element for weather to the global schema. Then the bulk of effort concentrates on writing the proper mediator code that will transform predicates on weather into a query over the weather website. This effort can become complex if some other source also relates to weather, because the designer may need to write code to properly combine the results from the two sources.

On the other hand, in LAV, the source database is modeled as a set of views

View (database)

In database theory, a view consists of a stored query accessible as a virtual table in a relational database or a set of documents in a document-oriented database composed of the result set of a query or map and reduce functions...

over

. In this case

. In this case  associates to each element of

associates to each element of  a query over

a query over  . Here the exact associations between

. Here the exact associations between  and

and  are no longer well-defined. As is illustrated in the next section, the burden of determining how to retrieve elements from the sources is placed on the query processor. The benefit of an LAV modeling is that new sources can be added with far less work than in a GAV system, thus the LAV approach should be favored in cases where the mediated schema is more stable and unlikely to change.

are no longer well-defined. As is illustrated in the next section, the burden of determining how to retrieve elements from the sources is placed on the query processor. The benefit of an LAV modeling is that new sources can be added with far less work than in a GAV system, thus the LAV approach should be favored in cases where the mediated schema is more stable and unlikely to change.In an LAV approach to the example data integration system above, the system designer designs the global schema first and then simply inputs the schemas of the respective city information sources. Consider again if one of the sources serves a weather website. The designer would add corresponding elements for weather to the global schema only if none existed already. Then programmers write an adapter or wrapper for the website and add a schema description of the website's results to the source schemas. The complexity of adding the new source moves from the designer to the query processor.

Query processing

The theory of query processing in data integration systems is commonly expressed using conjunctive queries. One can loosely think of a conjunctive queryConjunctive query

In database theory, a conjunctive query is a restricted form of first-order queries. A large part of queries issued on relational databases can be written as conjunctive queries, and large parts of other first-order queries can be written as conjunctive queries....

as a logical function applied to the relations of a database such as "

where

where  ". If a tuple or set of tuples is substituted into the rule and satisfies it (makes it true), then we consider that tuple as part of the set of answers in the query. While formal languages like Datalog

". If a tuple or set of tuples is substituted into the rule and satisfies it (makes it true), then we consider that tuple as part of the set of answers in the query. While formal languages like DatalogDatalog

Datalog is a query and rule language for deductive databases that syntactically is a subset of Prolog. Its origins date back to the beginning of logic programming, but it became prominent as a separate area around 1977 when Hervé Gallaire and Jack Minker organized a workshop on logic and databases...

express these queries concisely and without ambiguity, common SQL

SQL

SQL is a programming language designed for managing data in relational database management systems ....

queries count as conjunctive queries as well.

In terms of data integration, "query containment" represents an important property of conjunctive queries. A query

contains another query

contains another query  (denoted

(denoted  ) if the results of applying

) if the results of applying  are a subset of the results of applying

are a subset of the results of applying  for any database. The two queries are said to be equivalent if the resulting sets are equal for any database. This is important because in both GAV and LAV systems, a user poses conjunctive queries over a virtual schema represented by a set of views

for any database. The two queries are said to be equivalent if the resulting sets are equal for any database. This is important because in both GAV and LAV systems, a user poses conjunctive queries over a virtual schema represented by a set of viewsView (database)

In database theory, a view consists of a stored query accessible as a virtual table in a relational database or a set of documents in a document-oriented database composed of the result set of a query or map and reduce functions...

, or "materialized" conjunctive queries. Integration seeks to rewrite the queries represented by the views to make their results equivalent or maximally contained by our user's query. This corresponds to the problem of answering queries using views (AQUV).

In GAV systems, a system designer writes mediator code to define the query-rewriting. Each element in the user's query corresponds to a substitution rule just as each element in the global schema corresponds to a query over the source. Query processing simply expands the subgoals of the user's query according to the rule specified in the mediator and thus the resulting query is likely to be equivalent. While the designer does the majority of the work beforehand, some GAV systems such as Tsimmis involve simplifying the mediator description process.

In LAV systems, queries undergo a more radical process of rewriting because no mediator exists to align the user's query with a simple expansion strategy. The integration system must execute a search over the space of possible queries in order to find the best rewrite. The resulting rewrite may not be an equivalent query but maximally contained, and the resulting tuples may be incomplete. the MiniCon algorithm is the leading query rewriting algorithm for LAV data integration systems.

In general, the complexity of query rewriting is NP-complete

NP-complete

In computational complexity theory, the complexity class NP-complete is a class of decision problems. A decision problem L is NP-complete if it is in the set of NP problems so that any given solution to the decision problem can be verified in polynomial time, and also in the set of NP-hard...

. If the space of rewrites is relatively small this does not pose a problem — even for integration systems with hundreds of sources.

Data Integration in the Life Sciences

Large-scale questions in science, such as global warmingGlobal warming

Global warming refers to the rising average temperature of Earth's atmosphere and oceans and its projected continuation. In the last 100 years, Earth's average surface temperature increased by about with about two thirds of the increase occurring over just the last three decades...

, invasive species

Invasive species

"Invasive species", or invasive exotics, is a nomenclature term and categorization phrase used for flora and fauna, and for specific restoration-preservation processes in native habitats, with several definitions....

spread, and resource depletion

Resource depletion

Resource depletion is an economic term referring to the exhaustion of raw materials within a region. Resources are commonly divided between renewable resources and non-renewable resources...

, are increasingly requiring the collection of disparate data sets for meta-analysis

Meta-analysis

In statistics, a meta-analysis combines the results of several studies that address a set of related research hypotheses. In its simplest form, this is normally by identification of a common measure of effect size, for which a weighted average might be the output of a meta-analyses. Here the...

. This type of data integration is especially challenging for ecological and environmental data because metadata standards

Metadata standards

Metadata standards are requirements which are intended to establish a common understanding of the meaning or semantics of the data, to ensure correct and proper use and interpretation of the data by its owners and users...

are not agreed upon and there are many different data types produced in these fields. National Science Foundation

National Science Foundation

The National Science Foundation is a United States government agency that supports fundamental research and education in all the non-medical fields of science and engineering. Its medical counterpart is the National Institutes of Health...

initiatives such as Datanet

Datanet

This article is about the U.S. National Science Foundation Office of Cyberinfrastructure . For the ISP, Datanet please visit Datanet .On September 28, 2007, the U.S. National Science Foundation Office of Cyberinfrastructure announced a request for proposals with the name Sustainable Digital Data...

are intended to make data integration easier for scientists by providing cyberinfrastructure

Cyberinfrastructure

United States federal research funders use the term cyberinfrastructure to describe research environments that support advanced data acquisition, data storage, data management, data integration, data mining, data visualization and other computing and information processing services distributed over...

and setting standards. The two funded Datanet

Datanet

This article is about the U.S. National Science Foundation Office of Cyberinfrastructure . For the ISP, Datanet please visit Datanet .On September 28, 2007, the U.S. National Science Foundation Office of Cyberinfrastructure announced a request for proposals with the name Sustainable Digital Data...

initiatives are DataONE

DataONE

Data Observation Network for Earth is a project supported by the National Science Foundation under the DataNet program. DataONE will provide scientific data archiving for ecological and environmental data produced by scientists worldwide. DataONE's stated goal is to preserve and provide access to...

and the Data Conservancy.

See also

- Business semantics managementBusiness semantics managementBusiness Semantics Management encompasses the technology, methodology, organization, and culture that brings business stakeholders together to collaboratively realize the reconciliation of their heterogeneous metadata; and consequently the application of the derived business semantics patterns to...

- Core data integrationCore data integrationCore data integration is the use of data integration technology for a significant, centrally planned and managed IT initiative within a company...

- Customer data integrationCustomer Data IntegrationIn data processing, customer data integration combines the technology, processes and services needed to set up and maintain an accurate, timely, complete and comprehensive representation of a customer across multiple channels, business-lines, and enterprises — typically from multiple sources of...

- Data curationData curationIn science, Data curation is a term used to indicate the process of extraction of important information from scientific texts such as research articles by experts and converting them into an electronic form such as an entry of a biological database....

- Database modelDatabase modelA database model is the theoretical foundation of a database and fundamentally determines in which manner data can be stored, organized, and manipulated in a database system. It thereby defines the infrastructure offered by a particular database system...

- Data fusionData fusionData fusion, is generally defined as the use of techniques that combine data from multiple sources and gather that information into discrete, actionable items in order to achieve inferences, which will be more efficient and narrowly tailored than if they were achieved by means of disparate...

- Data mappingData mappingData mapping is the process of creating data element mappings between two distinct data models. Data mapping is used as a first step for a wide variety of data integration tasks including:...

- DataspacesDataspacesDataspaces are an abstraction in data management that aim to overcome some of the problems encountered in data integration system. The aim is to reduce the effort required to set up a data integration system by relying on existing matching and mapping generation techniques, and to improve the...

- Data virtualizationData virtualizationData virtualization describes the process of abstracting disparate data sources through a single data access layer ....

- Data Warehousing

- Enterprise Architecture framework

- Edge data integrationEdge data integrationAn edge data integration is an implementation of data integration technology undertaken in an ad hoc or tactical fashion. This is also sometimes referred to as point-to-point integration because it connects two types of data directly to serve a narrow purpose. Many edge integrations, and actually...

- Enterprise application integrationEnterprise application integrationEnterprise Application Integration is defined as the use of software and computer systems architectural principles to integrate a set of enterprise computer applications.- Overview :...

- Enterprise Information IntegrationEnterprise Information IntegrationEnterprise Information Integration , is a process of information integration, using data abstraction to provide a unified interface for viewing all the data within an organization, and a single set of structures and naming conventions to represent this data; the goal of EII is to get a large set of...

(EII) - Enterprise integrationEnterprise integrationEnterprise integration is a technical field of Enterprise Architecture, which focused on the study of topics such as system interconnection, electronic data interchange, product data exchange and distributed computing environments....

- Extract, transform, loadExtract, transform, loadExtract, transform and load is a process in database usage and especially in data warehousing that involves:* Extracting data from outside sources* Transforming it to fit operational needs...

- GeodiGeodiGeoDI is a three-year project led by University College Cork and funded by the Irish National Development Plan under the Sea Change programme...

: Geoscientific Data Integration - Information integrationInformation integrationInformation integration is the merging of information from disparate sources with differing conceptual, contextual and typographical representations. It is used in data mining and consolidation of data from unstructured or semi-structured resources...

- Information ServerInformation ServerAn information server is an integrated software platform consisting of a set of core functional modules that enables organizations to integrate data from disparate sources and deliver trusted and complete information, at the time it is required and in the format it is needed...

- Integration Competency CenterIntegration Competency CenterThe Integration Competency Center , sometimes referred to as an Integration Center of Expertise , is a shared service function within an organization, particularly large corporate enterprises as well as public sector institutions, for performing methodical Data Integration , System Integration or...

- Integration ConsortiumIntegration ConsortiumThe Integration Consortium is a global non-profit community that shares knowledge and best practices related to enterprise application integration...

- Jitterbit: a open-source software

- JXTAJXTAJXTA is an open source peer-to-peer protocol specification begun by Sun Microsystems in 2001. The JXTA protocols are defined as a set of XML messages which allow any device connected to a network to exchange messages and collaborate independently of the underlying network topology.As JXTA is based...

- Master data managementMaster Data ManagementIn computing, master data management comprises a set of processes and tools that consistently defines and manages the non-transactional data entities of an organization...

- Object-relational mappingObject-relational mappingObject-relational mapping in computer software is a programming technique for converting data between incompatible type systems in object-oriented programming languages. This creates, in effect, a "virtual object database" that can be used from within the programming language...

- Ontology based data integrationOntology based data integrationOntology based Data Integration involves the use of ontology to effectively combine data or information from multiple heterogeneous sources . It is one of the multiple data integration approaches and may be classified as Local-As-View...

- Open TextOpen textIn semiotic analysis, an open text is a text that allows multiple or mediated interpretation by the readers. In contrast, a closed text leads the reader to one intended interpretation....

- Schema MatchingSchema matchingThe terms schema matching and mapping are often used interchangeably. For this article, we differentiate the two as follows: Schema matching is the process of identifying that two objects are semantically related while mapping refers to the transformations between the objects...

- Semantic IntegrationSemantic integrationSemantic integration is the process of interrelating information from diverse sources, for example calendars and to do lists; email archives; physical, psychological, and social presence information; documents of all sorts; contacts ; search results; and advertising and marketing relevance derived...

- SQLSQLSQL is a programming language designed for managing data in relational database management systems ....

- Three schema approachThree schema approachThe three-schema approach, or the Three Schema Concept, in software engineering is an approach to building information systems and systems information management from the 1970s...

- UDEF

- Web serviceWeb serviceA Web service is a method of communication between two electronic devices over the web.The W3C defines a "Web service" as "a software system designed to support interoperable machine-to-machine interaction over a network". It has an interface described in a machine-processable format...